我正在使用ARKit收集面部网格的三维顶点。我已经阅读了: 将图像映射到 3D 面部网格 和 跟踪和可视化面部。

我有以下结构体:

我有以下结构体:

struct CaptureData {

var vertices: [SIMD3<Float>]

var verticesformatted: String {

let verticesDescribed = vertices.map({ "\($0.x):\($0.y):\($0.z)" }).joined(separator: "~")

return "<\(verticesDescribed)>"

}

}

我有一个Strat按钮用于捕捉顶点:

@IBAction private func startPressed() {

captureData = [] // Clear data

currentCaptureFrame = 0 //inital capture frame

fpsTimer = Timer.scheduledTimer(withTimeInterval: 1/fps, repeats: true, block: {(timer) -> Void in self.recordData()})

}

private var fpsTimer = Timer()

private var captureData: [CaptureData] = [CaptureData]()

private var currentCaptureFrame = 0

还需要一个停止按钮来停止捕获(保存数据):

@IBAction private func stopPressed() {

do {

fpsTimer.invalidate() //turn off the timer

let capturedData = captureData.map{$0.verticesformatted}.joined(separator:"")

let dir: URL = FileManager.default.urls(for: .documentDirectory, in: .userDomainMask).last! as URL

let url = dir.appendingPathComponent("facedata.txt")

try capturedData.appendLineToURL(fileURL: url as URL)

}

catch {

print("Could not write to file")

}

}

数据重编码函数

private func recordData() {

guard let data = getFrameData() else { return }

captureData.append(data)

currentCaptureFrame += 1

}

获取帧数据的函数

private func getFrameData() -> CaptureData? {

let arFrame = sceneView?.session.currentFrame!

guard let anchor = arFrame?.anchors[0] as? ARFaceAnchor else {return nil}

let vertices = anchor.geometry.vertices

let data = CaptureData(vertices: vertices)

return data

}

ARSCN扩展:

extension ViewController: ARSCNViewDelegate {

func renderer(_ renderer: SCNSceneRenderer, didAdd node: SCNNode, for anchor: ARAnchor) {

guard let faceAnchor = anchor as? ARFaceAnchor else { return }

currentFaceAnchor = faceAnchor

if node.childNodes.isEmpty, let contentNode = selectedContentController.renderer(renderer, nodeFor: faceAnchor) {

node.addChildNode(contentNode)

}

selectedContentController.session = sceneView?.session

selectedContentController.sceneView = sceneView

}

/// - Tag: ARFaceGeometryUpdate

func renderer(_ renderer: SCNSceneRenderer, didUpdate node: SCNNode, for anchor: ARAnchor) {

guard anchor == currentFaceAnchor,

let contentNode = selectedContentController.contentNode,

contentNode.parent == node

else { return }

selectedContentController.session = sceneView?.session

selectedContentController.sceneView = sceneView

selectedContentController.renderer(renderer, didUpdate: contentNode, for: anchor)

}

}

我正在尝试使用来自跟踪和可视化面部的示例代码:

// Transform the vertex to the camera coordinate system.

float4 vertexCamera = scn_node.modelViewTransform * _geometry.position;

// Camera projection and perspective divide to get normalized viewport coordinates (clip space).

float4 vertexClipSpace = scn_frame.projectionTransform * vertexCamera;

vertexClipSpace /= vertexClipSpace.w;

// XY in clip space is [-1,1]x[-1,1], so adjust to UV texture coordinates: [0,1]x[0,1].

// Image coordinates are Y-flipped (upper-left origin).

float4 vertexImageSpace = float4(vertexClipSpace.xy * 0.5 + 0.5, 0.0, 1.0);

vertexImageSpace.y = 1.0 - vertexImageSpace.y;

// Apply ARKit's display transform (device orientation * front-facing camera flip).

float4 transformedVertex = displayTransform * vertexImageSpace;

// Output as texture coordinates for use in later rendering stages.

_geometry.texcoords[0] = transformedVertex.xy;

我还阅读了关于投影点的内容(但不确定哪个更适用):

func projectPoint(_ point: SCNVector3) -> SCNVector3

我的问题是如何使用上面的示例代码,并将收集到的3D人脸网格顶点转换为2D图像坐标?

我想要获取3D网格顶点以及它们对应的2D坐标。

目前,我可以这样捕捉面部网格点:<mesh_x: mesh_ y: mesh_ z:...>

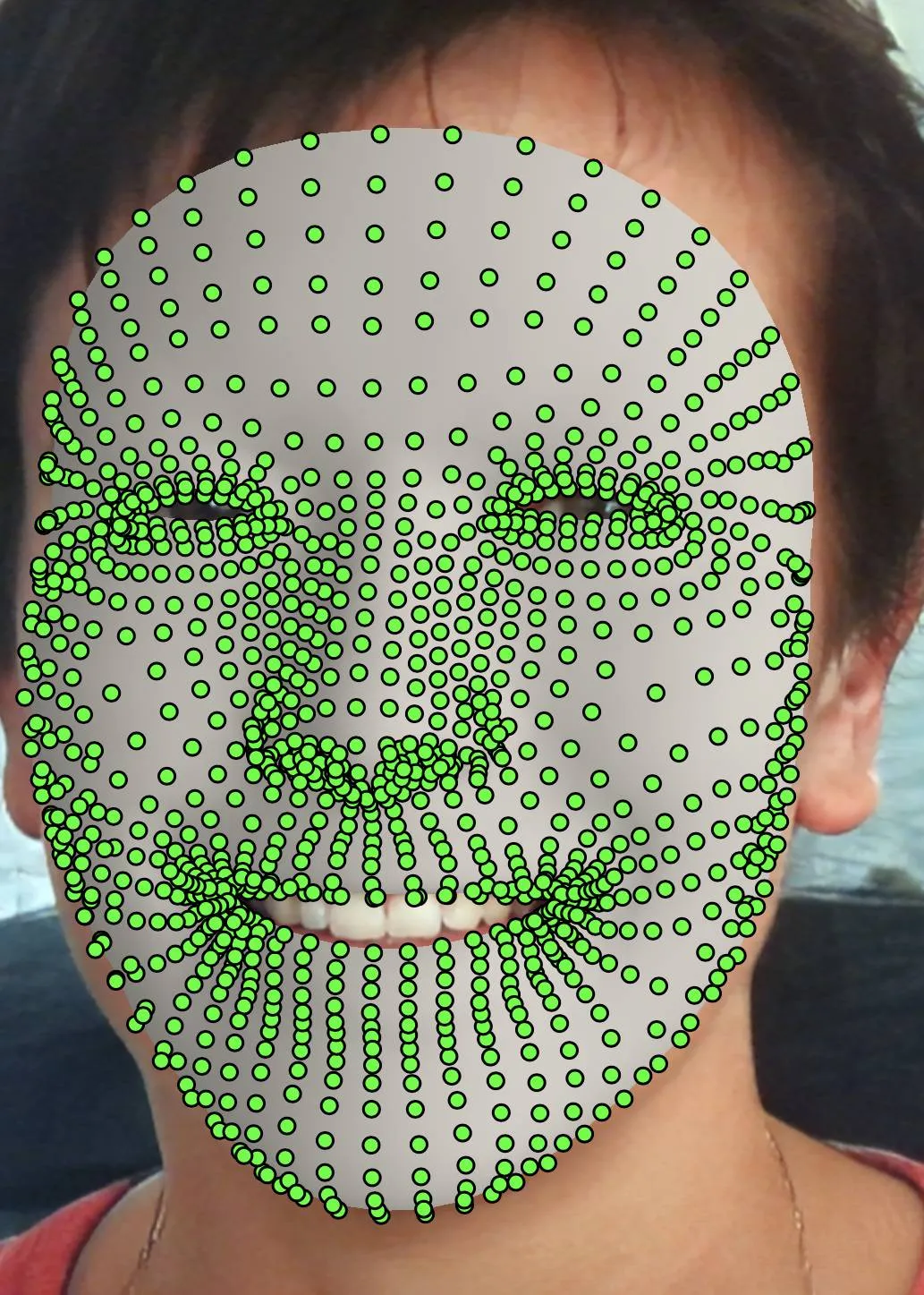

我希望将我的网格点转换为图像坐标并将它们一起显示,如下所示:

期望的结果:<mesh_x: mesh_ y: mesh_ z:img_x: img_y...>

有什么建议吗?先谢谢!

let vect = view.projectPoint(SCNVector3(pworld.position.x, pworld.position.y, pworld.position.z)),我收到了“值类型 'float4x4'(又名 'simd_float4x4')没有成员 'position'”的错误提示。 - swiftlearneer/// Get the position of the transform matrix. public var position: SCNVector3 { get{ return SCNVector3(self[3][0], self[3][1], self[3][2]) } } }```- oliverprojectPoint函数返回的 2D 点是在图像空间中定义的。 - oliver