我正在使用 Windows 11 x64 操作系统,运行 Python 3.11.0 版本。

pip install transformers

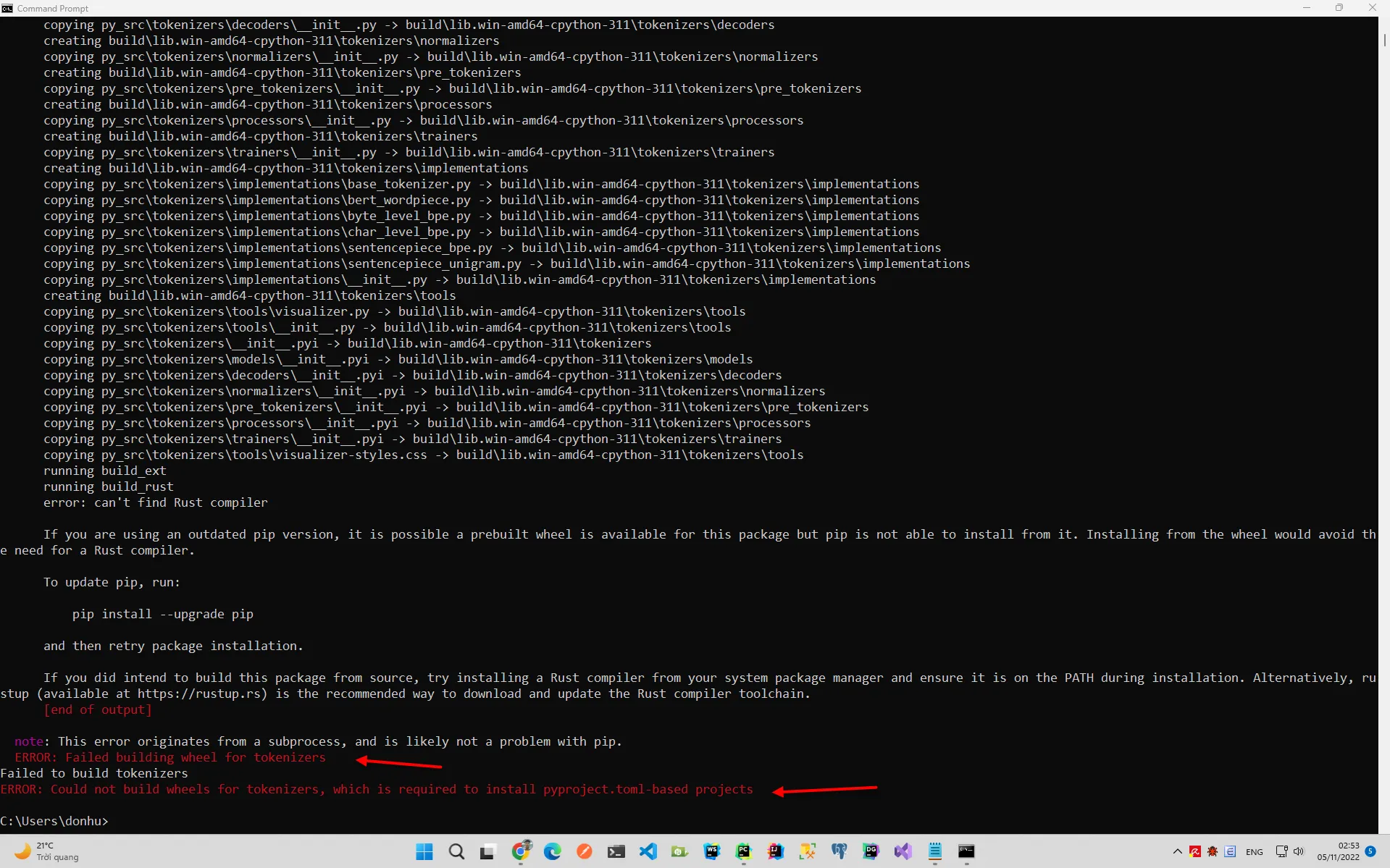

错误

Microsoft Windows [Version 10.0.22621.674]

(c) Microsoft Corporation. All rights reserved.

C:\Users\donhu>python

Python 3.11.0 (main, Oct 24 2022, 18:26:48) [MSC v.1933 64 bit (AMD64)] on win32

Type "help", "copyright", "credits" or "license" for more information.

>>> exit()

C:\Users\donhu>conda install -c huggingface transformers

'conda' is not recognized as an internal or external command,

operable program or batch file.

C:\Users\donhu>pip install transformers

Collecting transformers

Downloading transformers-4.24.0-py3-none-any.whl (5.5 MB)

---------------------------------------- 5.5/5.5 MB 12.5 MB/s eta 0:00:00

Collecting filelock

Using cached filelock-3.8.0-py3-none-any.whl (10 kB)

Collecting huggingface-hub<1.0,>=0.10.0

Using cached huggingface_hub-0.10.1-py3-none-any.whl (163 kB)

Requirement already satisfied: numpy>=1.17 in c:\users\donhu\appdata\local\programs\python\python311\lib\site-packages (from transformers) (1.23.4)

Requirement already satisfied: packaging>=20.0 in c:\users\donhu\appdata\local\programs\python\python311\lib\site-packages (from transformers) (21.3)

Collecting pyyaml>=5.1

Using cached PyYAML-6.0-cp311-cp311-win_amd64.whl (143 kB)

Collecting regex!=2019.12.17

Using cached regex-2022.10.31-cp311-cp311-win_amd64.whl (267 kB)

Requirement already satisfied: requests in c:\users\donhu\appdata\local\programs\python\python311\lib\site-packages (from transformers) (2.28.1)

Collecting tokenizers!=0.11.3,<0.14,>=0.11.1

Downloading tokenizers-0.13.1.tar.gz (358 kB)

---------------------------------------- 358.7/358.7 kB 21.8 MB/s eta 0:00:00

Installing build dependencies ... done

Getting requirements to build wheel ... done

Preparing metadata (pyproject.toml) ... done

Collecting tqdm>=4.27

Using cached tqdm-4.64.1-py2.py3-none-any.whl (78 kB)

Collecting typing-extensions>=3.7.4.3

Using cached typing_extensions-4.4.0-py3-none-any.whl (26 kB)

Requirement already satisfied: pyparsing!=3.0.5,>=2.0.2 in c:\users\donhu\appdata\local\programs\python\python311\lib\site-packages (from packaging>=20.0->transformers) (3.0.9)

Requirement already satisfied: colorama in c:\users\donhu\appdata\local\programs\python\python311\lib\site-packages (from tqdm>=4.27->transformers) (0.4.6)

Requirement already satisfied: charset-normalizer<3,>=2 in c:\users\donhu\appdata\local\programs\python\python311\lib\site-packages (from requests->transformers) (2.1.1)

Requirement already satisfied: idna<4,>=2.5 in c:\users\donhu\appdata\local\programs\python\python311\lib\site-packages (from requests->transformers) (3.4)

Requirement already satisfied: urllib3<1.27,>=1.21.1 in c:\users\donhu\appdata\local\programs\python\python311\lib\site-packages (from requests->transformers) (1.26.12)

Requirement already satisfied: certifi>=2017.4.17 in c:\users\donhu\appdata\local\programs\python\python311\lib\site-packages (from requests->transformers) (2022.9.24)

Building wheels for collected packages: tokenizers

Building wheel for tokenizers (pyproject.toml) ... error

error: subprocess-exited-with-error

× Building wheel for tokenizers (pyproject.toml) did not run successfully.

│ exit code: 1

╰─> [51 lines of output]

running bdist_wheel

running build

running build_py

creating build

creating build\lib.win-amd64-cpython-311

creating build\lib.win-amd64-cpython-311\tokenizers

copying py_src\tokenizers\__init__.py -> build\lib.win-amd64-cpython-311\tokenizers

creating build\lib.win-amd64-cpython-311\tokenizers\models

copying py_src\tokenizers\models\__init__.py -> build\lib.win-amd64-cpython-311\tokenizers\models

creating build\lib.win-amd64-cpython-311\tokenizers\decoders

copying py_src\tokenizers\decoders\__init__.py -> build\lib.win-amd64-cpython-311\tokenizers\decoders

creating build\lib.win-amd64-cpython-311\tokenizers\normalizers

copying py_src\tokenizers\normalizers\__init__.py -> build\lib.win-amd64-cpython-311\tokenizers\normalizers

creating build\lib.win-amd64-cpython-311\tokenizers\pre_tokenizers

copying py_src\tokenizers\pre_tokenizers\__init__.py -> build\lib.win-amd64-cpython-311\tokenizers\pre_tokenizers

creating build\lib.win-amd64-cpython-311\tokenizers\processors

copying py_src\tokenizers\processors\__init__.py -> build\lib.win-amd64-cpython-311\tokenizers\processors

creating build\lib.win-amd64-cpython-311\tokenizers\trainers

copying py_src\tokenizers\trainers\__init__.py -> build\lib.win-amd64-cpython-311\tokenizers\trainers

creating build\lib.win-amd64-cpython-311\tokenizers\implementations

copying py_src\tokenizers\implementations\base_tokenizer.py -> build\lib.win-amd64-cpython-311\tokenizers\implementations

copying py_src\tokenizers\implementations\bert_wordpiece.py -> build\lib.win-amd64-cpython-311\tokenizers\implementations

copying py_src\tokenizers\implementations\byte_level_bpe.py -> build\lib.win-amd64-cpython-311\tokenizers\implementations

copying py_src\tokenizers\implementations\char_level_bpe.py -> build\lib.win-amd64-cpython-311\tokenizers\implementations

copying py_src\tokenizers\implementations\sentencepiece_bpe.py -> build\lib.win-amd64-cpython-311\tokenizers\implementations

copying py_src\tokenizers\implementations\sentencepiece_unigram.py -> build\lib.win-amd64-cpython-311\tokenizers\implementations

copying py_src\tokenizers\implementations\__init__.py -> build\lib.win-amd64-cpython-311\tokenizers\implementations

creating build\lib.win-amd64-cpython-311\tokenizers\tools

copying py_src\tokenizers\tools\visualizer.py -> build\lib.win-amd64-cpython-311\tokenizers\tools

copying py_src\tokenizers\tools\__init__.py -> build\lib.win-amd64-cpython-311\tokenizers\tools

copying py_src\tokenizers\__init__.pyi -> build\lib.win-amd64-cpython-311\tokenizers

copying py_src\tokenizers\models\__init__.pyi -> build\lib.win-amd64-cpython-311\tokenizers\models

copying py_src\tokenizers\decoders\__init__.pyi -> build\lib.win-amd64-cpython-311\tokenizers\decoders

copying py_src\tokenizers\normalizers\__init__.pyi -> build\lib.win-amd64-cpython-311\tokenizers\normalizers

copying py_src\tokenizers\pre_tokenizers\__init__.pyi -> build\lib.win-amd64-cpython-311\tokenizers\pre_tokenizers

copying py_src\tokenizers\processors\__init__.pyi -> build\lib.win-amd64-cpython-311\tokenizers\processors

copying py_src\tokenizers\trainers\__init__.pyi -> build\lib.win-amd64-cpython-311\tokenizers\trainers

copying py_src\tokenizers\tools\visualizer-styles.css -> build\lib.win-amd64-cpython-311\tokenizers\tools

running build_ext

running build_rust

error: can't find Rust compiler

If you are using an outdated pip version, it is possible a prebuilt wheel is available for this package but pip is not able to install from it. Installing from the wheel would avoid the need for a Rust compiler.

To update pip, run:

pip install --upgrade pip

and then retry package installation.

If you did intend to build this package from source, try installing a Rust compiler from your system package manager and ensure it is on the PATH during installation. Alternatively, rustup (available at https://rustup.rs) is the recommended way to download and update the Rust compiler toolchain.

[end of output]

note: This error originates from a subprocess, and is likely not a problem with pip.

ERROR: Failed building wheel for tokenizers

Failed to build tokenizers

ERROR: Could not build wheels for tokenizers, which is required to install pyproject.toml-based projects

C:\Users\donhu>