我需要获取模型和相机之间的旋转差异,并将值转换为弧度/角度,然后传递给片段着色器。

为此,我需要分解模型旋转矩阵,可能还需要相机视图矩阵。我似乎找不到一个适合在着色器内部进行分解的机制。

旋转细节进入片段着色器以计算UV偏移量。

original_rotation + viewing_angles 用于计算以下纹理的精灵偏移,作为广告牌显示。

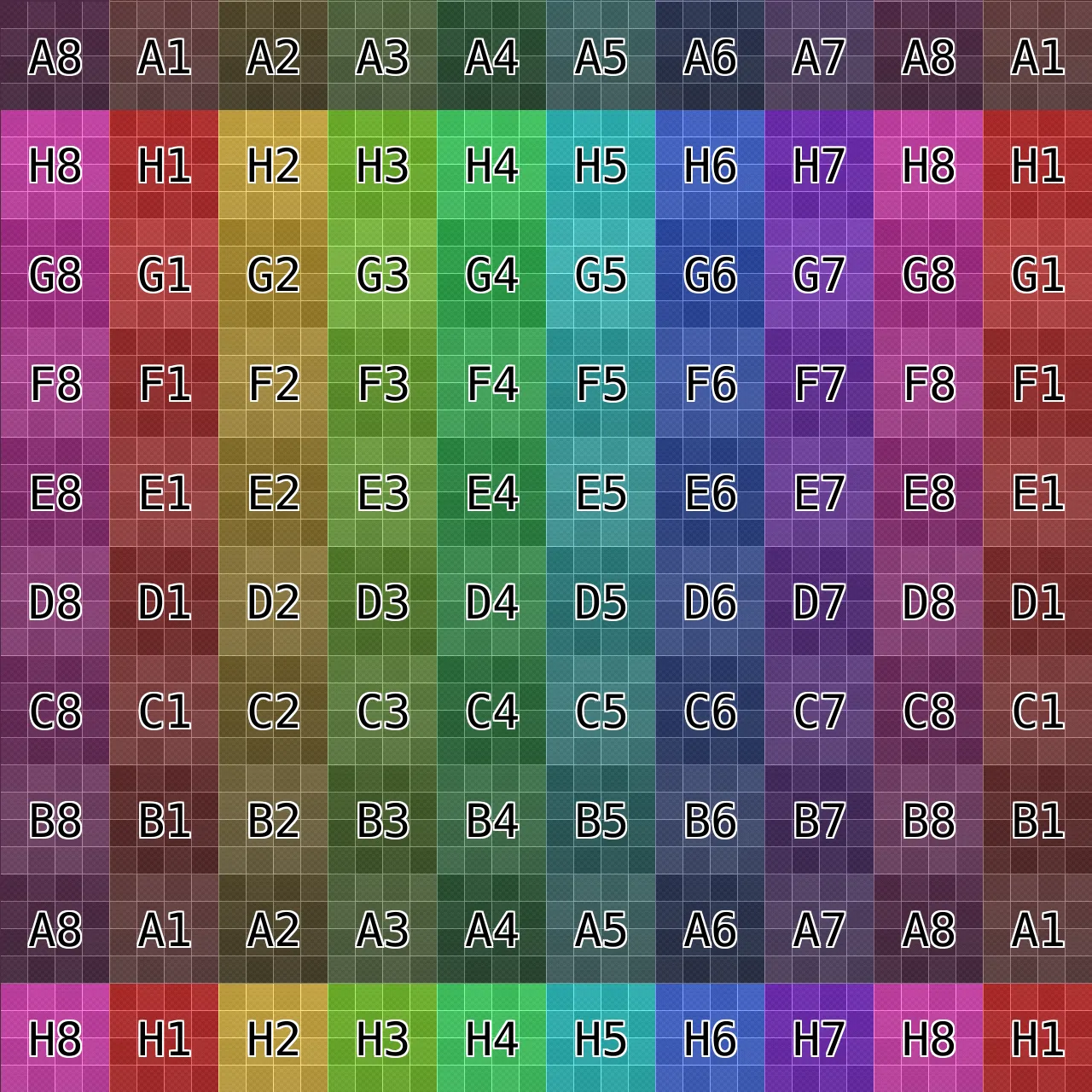

最终,UV应该向下偏移(例如:从H3到A3,从上向下看),向上偏移(例如:从A3到H3,从上向下看),从左到右看,从侧面向前看(例如:从D1到D8,反之亦然)。

为此,我需要分解模型旋转矩阵,可能还需要相机视图矩阵。我似乎找不到一个适合在着色器内部进行分解的机制。

旋转细节进入片段着色器以计算UV偏移量。

original_rotation + viewing_angles 用于计算以下纹理的精灵偏移,作为广告牌显示。

最终,UV应该向下偏移(例如:从H3到A3,从上向下看),向上偏移(例如:从A3到H3,从上向下看),从左到右看,从侧面向前看(例如:从D1到D8,反之亦然)。

const vertex_shader = `

precision highp float;

uniform mat4 modelViewMatrix;

uniform mat4 projectionMatrix;

attribute vec3 position;

attribute vec2 uv;

attribute mat4 instanceMatrix;

attribute float index;

attribute float texture_index;

uniform vec2 rows_cols;

uniform vec3 camera_location;

varying float vTexIndex;

varying vec2 vUv;

varying vec4 transformed_normal;

float normal_to_orbit(vec3 rotation_vector, vec3 view_vector){

rotation_vector = normalize(rotation_vector);

view_vector = normalize(view_vector);

vec3 x_direction = vec3(1.0,0,0);

vec3 y_direction = vec3(0,1.0,0);

vec3 z_direction = vec3(0,0,1.0);

float rotation_x_length = dot(rotation_vector, x_direction);

float rotation_y_length = dot(rotation_vector, y_direction);

float rotation_z_length = dot(rotation_vector, z_direction);

float view_x_length = dot(view_vector, x_direction);

float view_y_length = dot(view_vector, y_direction);

float view_z_length = dot(view_vector, z_direction);

//TOP

float top_rotation = degrees(atan(rotation_x_length, rotation_z_length));

float top_view = degrees(atan(view_x_length, view_z_length));

float top_final = top_view-top_rotation;

float top_idx = floor(top_final/(360.0/rows_cols.x));

//FRONT

float front_rotation = degrees(atan(rotation_x_length, rotation_z_length));

float front_view = degrees(atan(view_x_length, view_z_length));

float front_final = front_view-front_rotation;

float front_idx = floor(front_final/(360.0/rows_cols.y));

return abs((front_idx*rows_cols.x)+top_idx);

}

vec3 extractEulerAngleXYZ(mat4 mat) {

vec3 rotangles = vec3(0,0,0);

rotangles.x = atan(mat[2].z, -mat[1].z);

float cosYangle = sqrt(pow(mat[0].x, 2.0) + pow(mat[0].y, 2.0));

rotangles.y = atan(cosYangle, mat[0].z);

float sinXangle = sin(rotangles.x);

float cosXangle = cos(rotangles.x);

rotangles.z = atan(cosXangle * mat[1].y + sinXangle * mat[2].y, cosXangle * mat[1].x + sinXangle * mat[2].x);

return rotangles;

}

float view_index(vec3 position, mat4 mv_matrix, mat4 rot_matrix){

vec4 posInView = mv_matrix * vec4(0.0, 0.0, 0.0, 1.0);

// posInView /= posInView[3];

vec3 VinView = normalize(-posInView.xyz); // (0, 0, 0) - posInView

// vec4 NinView = normalize(rot_matrix * vec4(0.0, 0.0, 1.0, 1.0));

// float NdotV = dot(NinView, VinView);

vec4 view_normal = rot_matrix * vec4(VinView.xyz, 1.0);

float view_x_length = dot(view_normal.xyz, vec3(1.0,0,0));

float view_y_length = dot(view_normal.xyz, vec3(0,1.0,0));

float view_z_length = dot(view_normal.xyz, vec3(0,0,1.0));

// float radians = atan(-view_x_length, -view_z_length);

float radians = atan(view_x_length, view_z_length);

// float angle = radians/PI*180.0 + 180.0;

float angle = degrees(radians);

if (radians < 0.0) { angle += 360.0; }

if (0.0<=angle && angle<=360.0){

return floor(angle/(360.0/rows_cols.x));

}

return 0.0;

}

void main(){

vec4 original_normal = vec4(0.0, 0.0, 1.0, 1.0);

// transformed_normal = modelViewMatrix * instanceMatrix * original_normal;

vec3 rotangles = extractEulerAngleXYZ(modelViewMatrix * instanceMatrix);

// transformed_normal = vec4(rotangles.xyz, 1.0);

transformed_normal = vec4(camera_location.xyz, 1.0);

vec4 v = (modelViewMatrix* instanceMatrix* vec4(0.0, 0.0, 0.0, 1.0)) + vec4(position.x, position.y, 0.0, 0.0) * vec4(1.0, 1.0, 1.0, 1.0);

vec4 model_center = (modelViewMatrix* instanceMatrix* vec4(0.0, 0.0, 0.0, 1.0));

vec4 model_normal = (modelViewMatrix* instanceMatrix* vec4(0.0, 0.0, 1.0, 1.0));

vec4 cam_loc = vec4(camera_location.xyz, 1.0);

vec4 view_vector = normalize((cam_loc-model_center));

//float findex = normal_to_orbit(model_normal.xyz, view_vector.xyz);

float findex = view_index(position, base_matrix, combined_rot);

vTexIndex = texture_index;

vUv = vec2(mod(findex,rows_cols.x)/rows_cols.x, floor(findex/rows_cols.x)/rows_cols.y) + (uv / rows_cols);

//vUv = vec2(mod(index,rows_cols.x)/rows_cols.x, floor(index/rows_cols.x)/rows_cols.y) + (uv / rows_cols);

gl_Position = projectionMatrix * v;

// gl_Position = projectionMatrix * modelViewMatrix * instanceMatrix * vec4(position, 1.0);

}

`

const fragment_shader = (texture_count) => {

var fragShader = `

precision highp float;

uniform sampler2D textures[${texture_count}];

varying float vTexIndex;

varying vec2 vUv;

varying vec4 transformed_normal;

void main() {

vec4 finalColor;

`;

for (var i = 0; i < texture_count; i++) {

if (i == 0) {

fragShader += `if (vTexIndex < ${i}.5) {

finalColor = texture2D(textures[${i}], vUv);

}

`

} else {

fragShader += `else if (vTexIndex < ${i}.5) {

finalColor = texture2D(textures[${i}], vUv);

}

`

}

}

//fragShader += `gl_FragColor = finalColor * transformed_normal; }`;

fragShader += `gl_FragColor = finalColor; }`;

// fragShader += `gl_FragColor = startColor * finalColor; }`;

// int index = int(v_TexIndex+0.5); //https://stackoverflow.com/questions/60896915/texture-slot-not-getting-picked-properly-in-shader-issue

//console.log('frag shader: ', fragShader)

return fragShader;

}

function reset_instance_positions() {

const dummy = new THREE.Object3D();

const offset = 500*4

for (var i = 0; i < max_instances; i++) {

dummy.position.set(offset-(Math.floor(i % 8)*500), offset-(Math.floor(i / 8)*500), 0);

dummy.updateMatrix();

mesh.setMatrixAt(i, dummy.matrix);

}

mesh.instanceMatrix.needsUpdate = true;

}

function setup_geometry() {

const geometry = new THREE.InstancedBufferGeometry().copy(new THREE.PlaneBufferGeometry(400, 400));

const index = new Float32Array(max_instances * 1); // index

for (let i = 0; i < max_instances; i++) {

index[i] = (i % max_instances) * 1.0 /* index[i] = 0.0 */

}

geometry.setAttribute("index", new THREE.InstancedBufferAttribute(index, 1));

const texture_index = new Float32Array(max_instances * 1); // texture_index

const max_maps = 1

for (let i = 0; i < max_instances; i++) {

texture_index[i] = (Math.floor(i / max_instances) % max_maps) * 1.0 /* index[i] = 0.0 */

}

geometry.setAttribute("texture_index", new THREE.InstancedBufferAttribute(texture_index, 1));

const textures = [texture]

const grid_xy = new THREE.Vector2(8, 8)

mesh = new THREE.InstancedMesh(geometry,

new THREE.RawShaderMaterial({

uniforms: {

textures: {

type: 'tv',

value: textures

},

rows_cols: {

value: new THREE.Vector2(grid_xy.x * 1.0, grid_xy.y * 1.0)

},

camera_location: {

value: camera.position

}

},

vertexShader: vertex_shader,

fragmentShader: fragment_shader(textures.length),

side: THREE.DoubleSide,

// transparent: true,

}), max_instances);

scene.add(mesh);

reset_instance_positions()

}

var camera, scene, mesh, renderer;

const max_instances = 64

function init() {

camera = new THREE.PerspectiveCamera(60, window.innerWidth / window.innerHeight,1, 10000 );

camera.position.z = 1024;

scene = new THREE.Scene();

scene.background = new THREE.Color(0xffffff);

setup_geometry()

var canvas = document.createElement('canvas');

var context = canvas.getContext('webgl2');

renderer = new THREE.WebGLRenderer({

canvas: canvas,

context: context

});

renderer.setPixelRatio(window.devicePixelRatio);

renderer.setSize(window.innerWidth, window.innerHeight);

document.body.appendChild(renderer.domElement);

window.addEventListener('resize', onWindowResize, false);

var controls = new THREE.OrbitControls(camera, renderer.domElement);

}

function onWindowResize() {

camera.aspect = window.innerWidth / window.innerHeight;

camera.updateProjectionMatrix();

renderer.setSize(window.innerWidth, window.innerHeight);

}

function animate() {

requestAnimationFrame(animate);

renderer.render(scene, camera);

}

var dataurl = "https://istack.dev59.com/accaU.webp"

var texture;

var imageElement = document.createElement('img');

imageElement.onload = function(e) {

texture = new THREE.Texture(this);

texture.needsUpdate = true;

init();

animate();

};

imageElement.src = dataurl;