我已经使用cv::StereoBM一段时间了,现在想尝试切换到cuda::StereoBM(使用GPU),但是我遇到了一个问题,即使使用相同的设置和输入图像,它们看起来完全不同。我在这篇文章中读到,与cv::StereoBM相比,cuda的输入需要以不同的方式进行矫正。具体来说,视差必须在[0,256]范围内。我花了很长时间寻找其他关于如何为cuda校正图像的示例,但没有结果。使用cv::StereoBM的输出效果不错,因此我的图像对于它来说已经被正确矫正了。有没有办法将一种矫正类型转换为另一种呢?

如果有人感兴趣,这里是我用于立体纠正的代码(注意:我对每个图像进行了矫正,以消除“镜头效应”,然后再将它们运行到这个程序中):

如果有人感兴趣,这里是我用于立体纠正的代码(注意:我对每个图像进行了矫正,以消除“镜头效应”,然后再将它们运行到这个程序中):

#include "opencv2/core/core.hpp"

#include "opencv2/calib3d/calib3d.hpp"

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

//#include "opencv2/contrib/contrib.hpp"

#include <stdio.h>

using namespace cv;

using namespace std;

int main(int argc, char* argv[])

{

int numBoards = 20;

int board_w = 9;

int board_h = 14;

Size board_sz = Size(board_w, board_h);

int board_n = board_w*board_h;

vector<vector<Point3f> > object_points;

vector<vector<Point2f> > imagePoints1, imagePoints2;

vector<Point2f> corners1, corners2;

vector<Point3f> obj;

for (int j=0; j<board_n; j++)

{

obj.push_back(Point3f(j/board_w, j%board_w, 0.0f));

}

Mat img1, img2, gray1, gray2, image1, image2;

const char* right_cam_gst = "nvcamerasrc sensor-id=0 ! video/x-raw(memory:NVMM), format=UYVY, width=1280, height=720, framerate=30/1 ! nvvidconv flip-method=2 ! video/x-raw, format=GRAY8, width=1280, height=720 ! appsink";

const char* Left_cam_gst = "nvcamerasrc sensor-id=1 ! video/x-raw(memory:NVMM), format=UYVY, width=1280, height=720, framerate=30/1 ! nvvidconv flip-method=2 ! video/x-raw, format=GRAY8, width=1280, height=720 ! appsink";

VideoCapture cap1 = VideoCapture(right_cam_gst);

VideoCapture cap2 = VideoCapture(Left_cam_gst);

int success = 0, k = 0;

bool found1 = false, found2 = false;

Mat distCoeffs0;

Mat intrinsic0;

cv::FileStorage storage0("CamData0.yml", cv::FileStorage::READ);

storage0["distCoeffs"] >> distCoeffs0;

storage0["intrinsic"] >> intrinsic0;

storage0.release();

Mat distCoeffs1;

Mat intrinsic1;

cv::FileStorage storage1("CamData1.yml", cv::FileStorage::READ);

storage1["distCoeffs"] >> distCoeffs1;

storage1["intrinsic"] >> intrinsic1;

storage1.release();

while (success < numBoards)

{

cap1 >> image1;

cap2 >> image2;

//resize(img1, img1, Size(320, 280));

//resize(img2, img2, Size(320, 280));

undistort(image1, img1, intrinsic0, distCoeffs0);

undistort(image2, img2, intrinsic1, distCoeffs1);

// cvtColor(img1, gray1, CV_BGR2GRAY);

// cvtColor(img2, gray2, CV_BGR2GRAY);

found1 = findChessboardCorners(img1, board_sz, corners1, CV_CALIB_CB_ADAPTIVE_THRESH | CV_CALIB_CB_FILTER_QUADS);

found2 = findChessboardCorners(img2, board_sz, corners2, CV_CALIB_CB_ADAPTIVE_THRESH | CV_CALIB_CB_FILTER_QUADS);

if (found1)

{

cornerSubPix(img1, corners1, Size(11, 11), Size(-1, -1), TermCriteria(CV_TERMCRIT_EPS | CV_TERMCRIT_ITER, 30, 0.1));

drawChessboardCorners(img1, board_sz, corners1, found1);

}

if (found2)

{

cornerSubPix(img2, corners2, Size(11, 11), Size(-1, -1), TermCriteria(CV_TERMCRIT_EPS | CV_TERMCRIT_ITER, 30, 0.1));

drawChessboardCorners(img2, board_sz, corners2, found2);

}

imshow("image1", img1);

imshow("image2", img2);

k = waitKey(10);

// if (found1 && found2)

// {

// k = waitKey(0);

// }

if (k == 27)

{

break;

}

if (k == ' ' && found1 !=0 && found2 != 0)

{

imagePoints1.push_back(corners1);

imagePoints2.push_back(corners2);

object_points.push_back(obj);

printf ("Corners stored\n");

success++;

if (success >= numBoards)

{

break;

}

}

}

destroyAllWindows();

printf("Starting Calibration\n");

Mat CM1 = Mat(3, 3, CV_64FC1);

Mat CM2 = Mat(3, 3, CV_64FC1);

Mat D1, D2;

Mat R, T, E, F;

stereoCalibrate(object_points, imagePoints1, imagePoints2,

CM1, D1, CM2, D2, img1.size(), R, T, E, F,

CV_CALIB_SAME_FOCAL_LENGTH | CV_CALIB_ZERO_TANGENT_DIST,

cvTermCriteria(CV_TERMCRIT_ITER+CV_TERMCRIT_EPS, 100, 1e-5));

FileStorage fs1("mystereocalib.yml", FileStorage::WRITE);

fs1 << "CM1" << CM1;

fs1 << "CM2" << CM2;

fs1 << "D1" << D1;

fs1 << "D2" << D2;

fs1 << "R" << R;

fs1 << "T" << T;

fs1 << "E" << E;

fs1 << "F" << F;

printf("Done Calibration\n");

printf("Starting Rectification\n");

Mat R1, R2, P1, P2, Q;

stereoRectify(CM1, D1, CM2, D2, img1.size(), R, T, R1, R2, P1, P2, Q);

fs1 << "R1" << R1;

fs1 << "R2" << R2;

fs1 << "P1" << P1;

fs1 << "P2" << P2;

fs1 << "Q" << Q;

fs1.release();

printf("Done Rectification\n");

printf("Applying Undistort\n");

Mat map1x, map1y, map2x, map2y;

Mat imgU1, imgU2, disp, disp8 , o1, o2;

initUndistortRectifyMap(CM1, Mat(), R1, P1, img1.size(), CV_32FC1, map1x, map1y);

initUndistortRectifyMap(CM2, Mat(), R2, P2, img2.size(), CV_32FC1, map2x, map2y);

printf("Undistort complete\n");

while(1)

{

cap1 >> image1;

cap2 >> image2;

undistort(image1, img1, intrinsic0, distCoeffs0);

undistort(image2, img2, intrinsic1, distCoeffs1);

remap(img1, imgU1, map1x, map1y, INTER_LINEAR, BORDER_CONSTANT, Scalar());

remap(img2, imgU2, map2x, map2y, INTER_LINEAR, BORDER_CONSTANT, Scalar());

imshow("image1", imgU1);

imshow("image2", imgU2);

k = waitKey(5);

if(k==27)

{

break;

}

}

cap1.release();

cap2.release();

return(0);

}

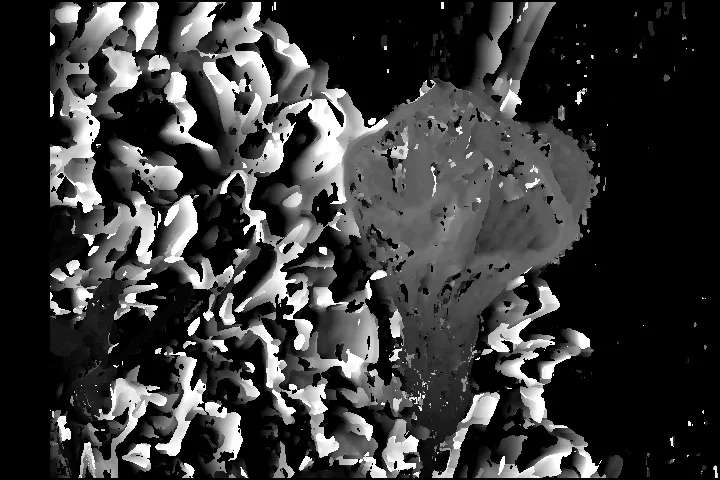

以下是不同方法输出的图像: