我正在尝试将一个ARAnchor投影到2D空间,但我面临着方向问题...

以下是我的函数,用于将左上角、右上角、左下角、右下角位置投影到2D空间:

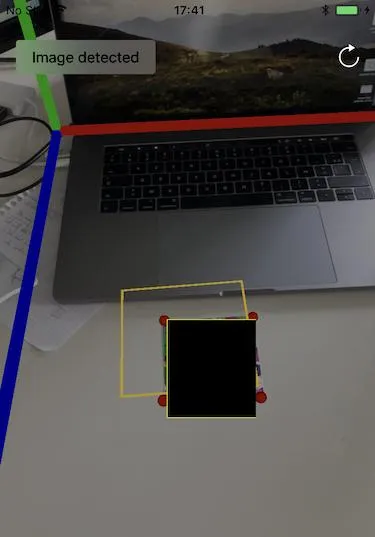

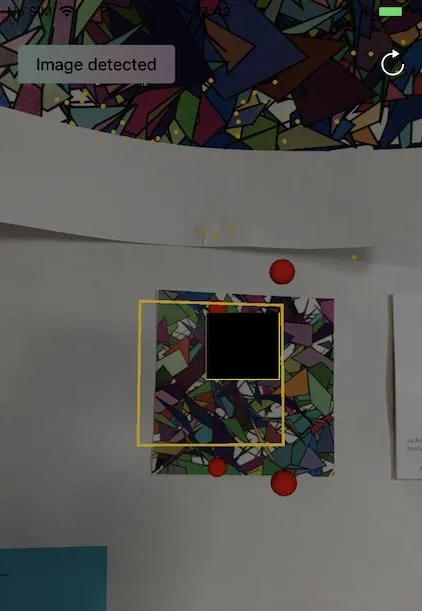

这个函数在我面对世界原点时运作得非常好。然而,如果我向左或向右移动,角点的计算就会出现问题。

以下是我的函数,用于将左上角、右上角、左下角、右下角位置投影到2D空间:

/// Returns the projection of an `ARImageAnchor` from the 3D world space

/// detected by ARKit into the 2D space of a view rendering the scene.

///

/// - Parameter from: An Anchor instance for projecting.

/// - Returns: An optional `CGRect` corresponding on `ARImageAnchor` projection.

internal func projection(from anchor: ARImageAnchor,

alignment: ARPlaneAnchor.Alignment,

debug: Bool = false) -> CGRect? {

guard let camera = session.currentFrame?.camera else {

return nil

}

let refImg = anchor.referenceImage

let anchor3DPoint = anchor.transform.columns.3

let size = view.bounds.size

let width = Float(refImg.physicalSize.width / 2)

let height = Float(refImg.physicalSize.height / 2)

/// Upper left corner point

let projection = ProjectionHelper.projection(from: anchor3DPoint,

width: width,

height: height,

focusAlignment: alignment)

let topLeft = projection.0

let topLeftProjected = camera.projectPoint(topLeft,

orientation: .portrait,

viewportSize: size)

let topRight:simd_float3 = projection.1

let topRightProjected = camera.projectPoint(topRight,

orientation: .portrait,

viewportSize: size)

let bottomLeft = projection.2

let bottomLeftProjected = camera.projectPoint(bottomLeft,

orientation: .portrait,

viewportSize: size)

let bottomRight = projection.3

let bottomRightProjected = camera.projectPoint(bottomRight,

orientation: .portrait,

viewportSize: size)

let result = CGRect(origin: topLeftProjected,

size: CGSize(width: topRightProjected.distance(point: topLeftProjected),

height: bottomRightProjected.distance(point: bottomLeftProjected)))

return result

}

这个函数在我面对世界原点时运作得非常好。然而,如果我向左或向右移动,角点的计算就会出现问题。