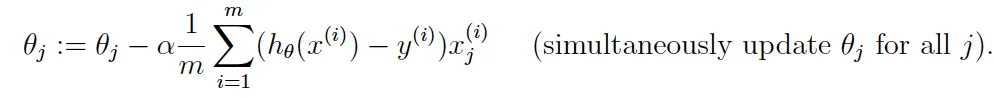

我正在尝试在Andrew Ng的机器学习课程中实现梯度下降算法。读入数据后,我尝试实现以下操作,将我的theta值列表更新1000次,期望能够收敛。

所涉及的算法是gradientDescent。我知道通常这个问题的原因是alpha可能过大,但是当我改变alpha的倍数时,例如改变n倍,我的结果也会改变n倍。当我改变iterations的倍数时也同样发生。我想说这可能与Haskell的惰性有关,但我完全不确定。任何帮助都将不胜感激。

module LR1V where

import qualified Data.Matrix as M

import System.IO

import Data.List.Split

import qualified Data.Vector as V

main :: IO ()

main = do

contents <- getContents

let lns = lines contents :: [String]

entries = map (splitOn ",") lns :: [[String]]

mbPoints = mapM readPoints entries :: Maybe [[Double]]

case mbPoints of

Just points -> runData points

_ -> putStrLn "Error: it is possible the file is incorrectly formatted"

readPoints :: [String] -> Maybe [Double]

readPoints dat@(x:y:_) = return $ map read dat

readPoints _ = Nothing

runData :: [[Double]] -> IO ()

runData pts = do

let (mxs,ys) = runPoints pts

c = M.ncols mxs

m = M.nrows mxs

thetas = M.zero 1 (M.ncols mxs)

alpha = 0.01

iterations = 1000

results = gradientDescent mxs ys thetas alpha m c iterations

print results

runPoints :: [[Double]] -> (M.Matrix Double, [Double])

runPoints pts = (xs, ys) where

xs = M.fromLists $ addX0 $ map init pts

ys = map last pts

-- X0 will always be 1

addX0 :: [[Double]] -> [[Double]]

addX0 = map (1.0 :)

-- theta is 1xn and x is nx1, where n is the amount of features

-- so it is safe to assume a scalar results from the multiplication

hypothesis :: M.Matrix Double -> M.Matrix Double -> Double

hypothesis thetas x =

M.getElem 1 1 (M.multStd thetas x)

gradientDescent :: M.Matrix Double

-> [Double]

-> M.Matrix Double

-> Double

-> Int

-> Int

-> Int

-> [Double]

gradientDescent mxs ys thetas alpha m n it =

let x i = M.colVector $ M.getRow i mxs

y i = ys !! (i-1)

h i = hypothesis thetas (x i)

thL = zip [1..] $ M.toList thetas :: [(Int, Double)]

z i j = ((h i) - (y i))*(M.getElem i j $ mxs)

sumSquares j = sum [z i j | i <- [1..m]]

thetaJ t j = t - ((alpha * (1/ (fromIntegral m))) * (sumSquares j))

result = map snd $ foldl (\ts _ -> [(j,thetaJ t j) | (j,t) <- ts]) thL [1..it] in

result

和数据相关的内容...

6.1101,17.592

5.5277,9.1302

8.5186,13.662

7.0032,11.854

5.8598,6.8233

8.3829,11.886

7.4764,4.3483

8.5781,12

6.4862,6.5987

5.0546,3.8166

5.7107,3.2522

14.164,15.505

5.734,3.1551

8.4084,7.2258

5.6407,0.71618

5.3794,3.5129

6.3654,5.3048

5.1301,0.56077

6.4296,3.6518

7.0708,5.3893

6.1891,3.1386

20.27,21.767

5.4901,4.263

6.3261,5.1875

5.5649,3.0825

18.945,22.638

12.828,13.501

10.957,7.0467

13.176,14.692

22.203,24.147

5.2524,-1.22

6.5894,5.9966

9.2482,12.134

5.8918,1.8495

8.2111,6.5426

7.9334,4.5623

8.0959,4.1164

5.6063,3.3928

12.836,10.117

6.3534,5.4974

5.4069,0.55657

6.8825,3.9115

11.708,5.3854

5.7737,2.4406

7.8247,6.7318

7.0931,1.0463

5.0702,5.1337

5.8014,1.844

11.7,8.0043

5.5416,1.0179

7.5402,6.7504

5.3077,1.8396

7.4239,4.2885

7.6031,4.9981

6.3328,1.4233

6.3589,-1.4211

6.2742,2.4756

5.6397,4.6042

9.3102,3.9624

9.4536,5.4141

8.8254,5.1694

5.1793,-0.74279

21.279,17.929

14.908,12.054

18.959,17.054

7.2182,4.8852

8.2951,5.7442

10.236,7.7754

5.4994,1.0173

20.341,20.992

10.136,6.6799

7.3345,4.0259

6.0062,1.2784

7.2259,3.3411

5.0269,-2.6807

6.5479,0.29678

7.5386,3.8845

5.0365,5.7014

10.274,6.7526

5.1077,2.0576

5.7292,0.47953

5.1884,0.20421

6.3557,0.67861

9.7687,7.5435

6.5159,5.3436

8.5172,4.2415

9.1802,6.7981

6.002,0.92695

5.5204,0.152

5.0594,2.8214

5.7077,1.8451

7.6366,4.2959

5.8707,7.2029

5.3054,1.9869

8.2934,0.14454

13.394,9.0551

5.4369,0.61705

当

alpha为0.01时,我的theta值计算结果为[58.39135051546406,653.2884974555699]。当alpha为0.001时,我的值变为[5.839135051546473,65.32884974555617]。当iterations更改为10,000时,我的值返回到之前的状态。