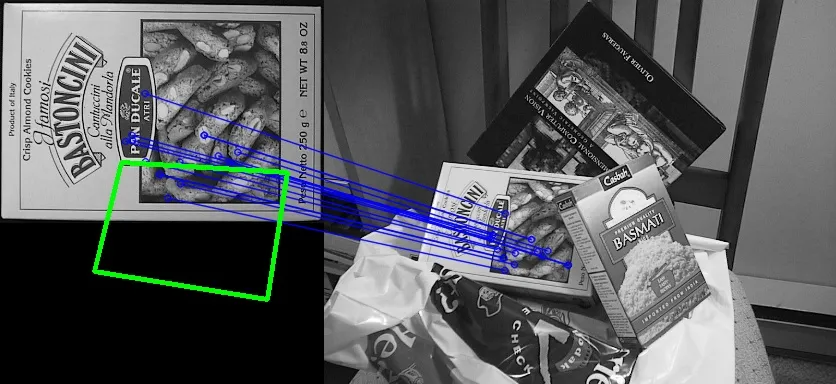

我一直在遇到一个问题,就是检测到的物体轮廓不在正确的位置,好像坐标错了。我的Hessian设置为2000,并且我过滤了距离最小值的3倍以下的匹配项。感谢任何帮助。

运行匹配和单应性的结果:

下面是代码示例:

public static void findMatches()

{

System.loadLibrary(Core.NATIVE_LIBRARY_NAME);

//Load Image 1

Mat img_object = Highgui.imread("./resources/Database/box.png");

//Load Image 2

Mat img_scene = Highgui.imread("./resources/Database/box_in_scene.png");

//Check if either image is null if so exit application

if (img_object == null || img_scene == null)

{

System.exit(0);

}

//Convert Image 1 to greyscale

Mat grayImageobject = new Mat(img_object.rows(), img_object.cols(), img_object.type());

Imgproc.cvtColor(img_object, grayImageobject, Imgproc.COLOR_BGRA2GRAY);

Core.normalize(grayImageobject, grayImageobject, 0, 255, Core.NORM_MINMAX);

//Convert image 2 to greyscale

Mat grayImageScene = new Mat(img_scene.rows(), img_scene.cols(), img_scene.type());

Imgproc.cvtColor(img_scene, grayImageScene, Imgproc.COLOR_BGRA2GRAY);

Core.normalize(grayImageScene, grayImageScene, 0, 255, Core.NORM_MINMAX);

//Create a SURF feature detector

FeatureDetector detector = FeatureDetector.create(4); //4 = SURF

//Cannot input hessian value as normal so we have to write the desired value into a

//file and then read value from file into detector.read

try (Writer writer = new BufferedWriter(new OutputStreamWriter(new FileOutputStream("hessian.txt"), "utf-8"))) {

writer.write("%YAML:1.0\nhessianThreshold: 2000.\noctaves:3\noctaveLayers: 4\nupright: 0\n");

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

detector.read("hessian.txt");

//Mat of keypoints for object and scene

MatOfKeyPoint keypoints_object = new MatOfKeyPoint();

MatOfKeyPoint keypoints_scene = new MatOfKeyPoint();

//Detect keypoints in scene and object storing them in mat of keypoints

detector.detect(img_object, keypoints_object);

detector.detect(img_scene, keypoints_scene);

DescriptorExtractor extractor = DescriptorExtractor.create(2); //2 = SURF;

Mat descriptor_object = new Mat();

Mat descriptor_scene = new Mat() ;

extractor.compute(img_object, keypoints_object, descriptor_object);

extractor.compute(img_scene, keypoints_scene, descriptor_scene);

DescriptorMatcher matcher = DescriptorMatcher.create(1); // 1 = FLANNBASED

MatOfDMatch matches = new MatOfDMatch();

matcher.match(descriptor_object, descriptor_scene, matches);

List<DMatch> matchesList = matches.toList();

Double max_dist = 0.0;

Double min_dist = 100.0;

for(int i = 0; i < descriptor_object.rows(); i++){

Double dist = (double) matchesList.get(i).distance;

if(dist < min_dist) min_dist = dist;

if(dist > max_dist) max_dist = dist;

}

System.out.println("-- Max dist : " + max_dist);

System.out.println("-- Min dist : " + min_dist);

LinkedList<DMatch> good_matches = new LinkedList<DMatch>();

MatOfDMatch gm = new MatOfDMatch();

for(int i = 0; i < descriptor_object.rows(); i++){

if(matchesList.get(i).distance < 3*min_dist){

good_matches.addLast(matchesList.get(i));

}

}

gm.fromList(good_matches);

Mat img_matches = new Mat();

Features2d.drawMatches(img_object,keypoints_object,img_scene,keypoints_scene, gm, img_matches, new Scalar(255,0,0), new Scalar(0,0,255), new MatOfByte(), 2);

if(good_matches.size() >= 10){

LinkedList<Point> objList = new LinkedList<Point>();

LinkedList<Point> sceneList = new LinkedList<Point>();

List<KeyPoint> keypoints_objectList = keypoints_object.toList();

List<KeyPoint> keypoints_sceneList = keypoints_scene.toList();

for(int i = 0; i<good_matches.size(); i++){

objList.addLast(keypoints_objectList.get(good_matches.get(i).queryIdx).pt);

sceneList.addLast(keypoints_sceneList.get(good_matches.get(i).trainIdx).pt);

}

MatOfPoint2f obj = new MatOfPoint2f();

obj.fromList(objList);

MatOfPoint2f scene = new MatOfPoint2f();

scene.fromList(sceneList);

Mat homography = Calib3d.findHomography(obj, scene);

Mat obj_corners = new Mat(4,1,CvType.CV_32FC2);

Mat scene_corners = new Mat(4,1,CvType.CV_32FC2);

obj_corners.put(0, 0, new double[] {0,0});

obj_corners.put(1, 0, new double[] {img_object.cols(),0});

obj_corners.put(2, 0, new double[] {img_object.cols(),img_object.rows()});

obj_corners.put(3, 0, new double[] {0,img_object.rows()});

//Compute the most probable perspective transformation

//out of several pairs of corresponding points.

//Imgproc.getPerspectiveTransform(obj_corners, scene_corners);

Core.perspectiveTransform(obj_corners,scene_corners, homography);

Core.line(img_matches, new Point(scene_corners.get(0,0)), new Point(scene_corners.get(1,0)), new Scalar(0, 255, 0),4);

Core.line(img_matches, new Point(scene_corners.get(1,0)), new Point(scene_corners.get(2,0)), new Scalar(0, 255, 0),4);

Core.line(img_matches, new Point(scene_corners.get(2,0)), new Point(scene_corners.get(3,0)), new Scalar(0, 255, 0),4);

Core.line(img_matches, new Point(scene_corners.get(3,0)), new Point(scene_corners.get(0,0)), new Scalar(0, 255, 0),4);

Highgui.imwrite("./resources/ImageMatching" + ".jpg", img_matches);

createWindow("Image Matching", "resources/ImageMatching.jpg");

}

else

{

System.out.println("Not enough Matches");

System.exit(0);

}

}

Core.line(img_matches, new Point(scene_corners.get(0,0)[0] + img_object.cols(), scene_corners.get(0,0)[0]), new Point(scene_corners.get(1,0)[1] + img_object.cols(), scene_corners.get(1,0)[1]), new Scalar(0, 255, 0),4);- JuppalCore.line(img_matches, new Point(scene_corners.get(3,0)[0] + img_object.cols(), scene_corners.get(3,0)[1]), new Point(scene_corners.get(4,0)[0] + img_object.cols(), scene_corners.get(4,0)[1]), new Scalar(0, 255, 0),4);- Juppal