你可以使用以下函数,它还会将元素排序:

def correlation(dataset, threshold = 0.3):

c = dataset.corr().abs()

s = c.unstack()

so = s.sort_values(kind="quicksort")

results = []

for index, row in so.items():

if index[0] != index[1] and row > threshold:

results.append({index: row})

return results

您可以按照以下方式调用函数,发送您想要查找相关性和阈值的Pandas数据集:

highly_correlated_features = correlation(dataset=data_train_val_without_label, threshold=0.35)

highly_correlated_features

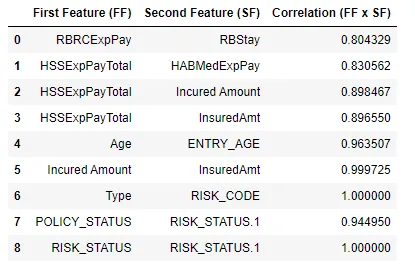

对于具有以下列和默认阈值的数据集,它将产生类似于这样的结果:

输入列:

0 HighBP 202944 non-null float64

1 HighChol 202944 non-null float64

2 CholCheck 202944 non-null float64

3 BMI 202944 non-null float64

4 Smoker 202944 non-null float64

5 Stroke 202944 non-null float64

6 HeartDiseaseorAttack 202944 non-null float64

7 PhysActivity 202944 non-null float64

8 Fruits 202944 non-null float64

9 Veggies 202944 non-null float64

10 HvyAlcoholConsump 202944 non-null float64

11 AnyHealthcare 202944 non-null float64

12 NoDocbcCost 202944 non-null float64

13 GenHlth 202944 non-null float64

14 MentHlth 202944 non-null float64

15 PhysHlth 202944 non-null float64

16 DiffWalk 202944 non-null float64

17 Sex 202944 non-null float64

18 Age 202944 non-null float64

19 Education 202944 non-null float64

20 Income 202944 non-null float64

输出:

[{('Income', 'Education'): 0.38083797089605675},

{('Education', 'Income'): 0.38083797089605675},

{('DiffWalk', 'PhysHlth'): 0.38145172573435343},

{('PhysHlth', 'DiffWalk'): 0.38145172573435343},

{('DiffWalk', 'GenHlth'): 0.385707943062701},

{('GenHlth', 'DiffWalk'): 0.385707943062701},

{('PhysHlth', 'GenHlth'): 0.3907082729122655},

{('GenHlth', 'PhysHlth'): 0.3907082729122655}]

DropCorrelatedFeatures()转换器,可以为您完成繁重的工作,并且与sklearn兼容。features_to_drop_属性显示它将删除哪些内容。 - kevin_theinfinityfundfindCorrelation函数。它识别相关的列并返回除一个之外的所有标签。这里的现有答案会删除所有相关的列,这意味着会删除太多的列。 - undefined