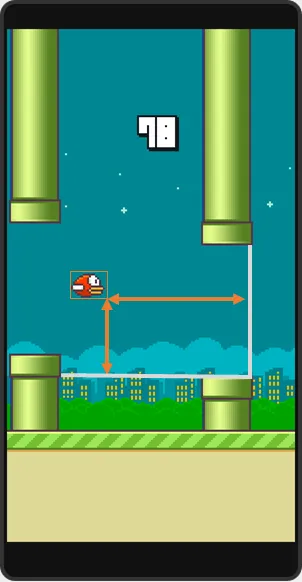

我尝试将Q学习应用于我写的一个简单游戏中。这个游戏是基于玩家必须“跳跃”以避免迎面而来的箱子。

我使用了两个动作设计系统;“跳跃”和“不做任何事”,状态是下一块的距离(除以地板确保没有大量的状态)。

我的问题似乎在于算法实现没有考虑“未来奖励”,因此它最终会在错误的时候跳跃。

以下是我的Q学习算法实现:

我使用了两个动作设计系统;“跳跃”和“不做任何事”,状态是下一块的距离(除以地板确保没有大量的状态)。

我的问题似乎在于算法实现没有考虑“未来奖励”,因此它最终会在错误的时候跳跃。

以下是我的Q学习算法实现:

JumpGameAIClass.prototype.getQ = function getQ(state) {

if (!this.Q.hasOwnProperty(state)) {

this.Q[state] = {};

for (var actionIndex = 0; actionIndex < this.actions.length; actionIndex++) {

var action = this.actions[actionIndex];

this.Q[state][action] = 0;

}

}

return this.Q[state];

};

JumpGameAIClass.prototype.getBlockDistance = function getBlockDistance() {

var closest = -1;

for (var blockIndex = 0; blockIndex < this.blocks.length; blockIndex++) {

var block = this.blocks[blockIndex];

var distance = block.x - this.playerX;

if (distance >= 0 && (closest === -1 || distance < closest)) {

closest = distance;

}

}

return Math.max(0, Math.floor(closest * this.resolution));

};

JumpGameAIClass.prototype.getActionWithHighestQ = function getActionWithHighestQ(distance) {

var jumpReward = this.getQ(distance)[this.actions[0]];

var doNothingReward = this.getQ(distance)[this.actions[1]];

if (jumpReward > doNothingReward) {

return this.actions[0];

} else if (doNothingReward > jumpReward) {

return this.actions[1];

} else {

if (!this.canJump()) {

return this.actions[1];

}

return this.actions[Math.floor(Math.random() * this.actions.length)];

}

};

JumpGameAIClass.prototype.getActionEpsilonGreedy = function getActionEpsilonGreedy() {

// We can't jump while in mid-air

if (!this.canJump()) {

return this.actions[1];

}

if (Math.random() < this.epsilon) {

return this.actions[Math.floor(Math.random() * this.actions.length)];

} else {

return this.getActionWithHighestQ(this.getBlockDistance());

}

};

JumpGameAIClass.prototype.think = function think() {

var reward = this.liveReward;

if (this.score !== this.lastScore) {

this.lastScore = this.score;

reward = this.scoreReward;

} else if (!this.playerAlive) {

reward = this.deathReward;

}

this.drawDistance();

var distance = this.getBlockDistance(),

maxQ = this.getQ(distance)[this.getActionWithHighestQ(distance)],

previousQ = this.getQ(this.lastDistance)[this.lastAction];

this.getQ(this.lastDistance)[this.lastAction] = previousQ + this.alpha * (reward + (this.gamma * maxQ) - previousQ);

this.lastAction = this.getActionEpsilonGreedy();

this.lastDistance = distance;

switch (this.lastAction) {

case this.actions[0]:

this.jump();

break;

}

};

以下是一些与其相关的属性:

epsilon: 0.05,

alpha: 1,

gamma: 1,

resolution: 0.1,

actions: [ 'jump', 'do_nothing' ],

Q: {},

liveReward: 0,

scoreReward: 100,

deathReward: -1000,

lastAction: 'do_nothing',

lastDistance: 0,

lastScore: 0

我需要使用lastAction/lastDistance来计算Q值,因为我不能使用当前数据(这将会在前一帧执行的动作上进行操作)。

think方法在所有渲染和游戏内容完成后(物理、控制、死亡等)每一帧都会被调用。

var JumpGameAIClass = function JumpGame(canvas) {

Game.JumpGame.call(this, canvas);

Object.defineProperties(this, {

epsilon: {

value: 0.05

},

alpha: {

value: 1

},

gamma: {

value: 1

},

resolution: {

value: 0.1

},

actions: {

value: [ 'jump', 'do_nothing' ]

},

Q: {

value: { },

writable: true

},

liveReward: {

value: 0

},

scoreReward: {

value: 100

},

deathReward: {

value: -1000

},

lastAction: {

value: 'do_nothing',

writable: true

},

lastDistance: {

value: 0,

writable: true

},

lastScore: {

value: 0,

writable: true

}

});

};

JumpGameAIClass.prototype = Object.create(Game.JumpGame.prototype);

JumpGameAIClass.prototype.getQ = function getQ(state) {

if (!this.Q.hasOwnProperty(state)) {

this.Q[state] = {};

for (var actionIndex = 0; actionIndex < this.actions.length; actionIndex++) {

var action = this.actions[actionIndex];

this.Q[state][action] = 0;

}

}

return this.Q[state];

};

JumpGameAIClass.prototype.getBlockDistance = function getBlockDistance() {

var closest = -1;

for (var blockIndex = 0; blockIndex < this.blocks.length; blockIndex++) {

var block = this.blocks[blockIndex];

var distance = block.x - this.playerX;

if (distance >= 0 && (closest === -1 || distance < closest)) {

closest = distance;

}

}

return Math.max(0, Math.floor(closest * this.resolution));

};

JumpGameAIClass.prototype.getActionWithHighestQ = function getActionWithHighestQ(distance) {

var jumpReward = this.getQ(distance)[this.actions[0]];

var doNothingReward = this.getQ(distance)[this.actions[1]];

if (jumpReward > doNothingReward) {

return this.actions[0];

} else if (doNothingReward > jumpReward) {

return this.actions[1];

} else {

if (!this.canJump()) {

return this.actions[1];

}

return this.actions[Math.floor(Math.random() * this.actions.length)];

}

};

JumpGameAIClass.prototype.getActionEpsilonGreedy = function getActionEpsilonGreedy() {

if (!this.canJump()) {

return this.actions[1];

}

if (Math.random() < this.epsilon) {

return this.actions[Math.floor(Math.random() * this.actions.length)];

} else {

return this.getActionWithHighestQ(this.getBlockDistance());

}

};

JumpGameAIClass.prototype.onDeath = function onDeath() {

this.restart();

};

JumpGameAIClass.prototype.think = function think() {

var reward = this.liveReward;

if (this.score !== this.lastScore) {

this.lastScore = this.score;

reward = this.scoreReward;

} else if (!this.playerAlive) {

reward = this.deathReward;

}

this.drawDistance();

var distance = this.getBlockDistance(),

maxQ = this.getQ(distance)[this.getActionWithHighestQ(distance)],

previousQ = this.getQ(this.lastDistance)[this.lastAction];

this.getQ(this.lastDistance)[this.lastAction] = previousQ + this.alpha * (reward + (this.gamma * maxQ) - previousQ);

this.lastAction = this.getActionEpsilonGreedy();

this.lastDistance = distance;

switch (this.lastAction) {

case this.actions[0]:

this.jump();

break;

}

};

JumpGameAIClass.prototype.drawDistance = function drawDistance() {

this.context.save();

this.context.textAlign = 'center';

this.context.textBaseline = 'bottom';

this.context.fillText('Distance: ' + this.getBlockDistance(), this.canvasWidth / 2, this.canvasHeight / 4);

this.context.textBaseline = 'top';

this.context.fillText('Last Distance: ' + this.lastDistance, this.canvasWidth / 2, this.canvasHeight / 4);

this.context.restore();

};

JumpGameAIClass.prototype.onFrame = function onFrame() {

Game.JumpGame.prototype.onFrame.apply(this, arguments);

this.think();

}

Game.JumpGameAI = JumpGameAIClass;body {

background-color: #EEEEEE;

text-align: center;

}

canvas#game {

background-color: #FFFFFF;

border: 1px solid #DDDDDD;

}<!DOCTYPE HTML>

<html lang="en">

<head>

<title>jump</title>

</head>

<body>

<canvas id="game" width="512" height="512">

<h1>Your browser doesn't support canvas!</h1>

</canvas>

<script src="https://raw.githubusercontent.com/cagosta/requestAnimationFrame/master/app/requestAnimationFrame.js"></script>

<!-- https://gist.github.com/jackwilsdon/d06bffa6b32c53321478 -->

<script src="https://cdn.rawgit.com/jackwilsdon/d06bffa6b32c53321478/raw/4e467f82590e76543bf55ff788504e26afc3d694/game.js"></script>

<script src="https://cdn.rawgit.com/jackwilsdon/d06bffa6b32c53321478/raw/2b7ce2c3dd268c4aef9ad27316edb0b235ad0d06/canvasgame.js"></script>

<script src="https://cdn.rawgit.com/jackwilsdon/d06bffa6b32c53321478/raw/2696c72e001e48359a6ce880f1c475613fe359f5/jump.js"></script>

<script src="https://cdn.rawgit.com/jackwilsdon/d06bffa6b32c53321478/raw/249c92f3385757b6edf2ceb49e26f14b89ffdcfe/bootstrap.js"></script>

</body>

gamma = 1?你尝试过更经典的0.9吗? - Demplo