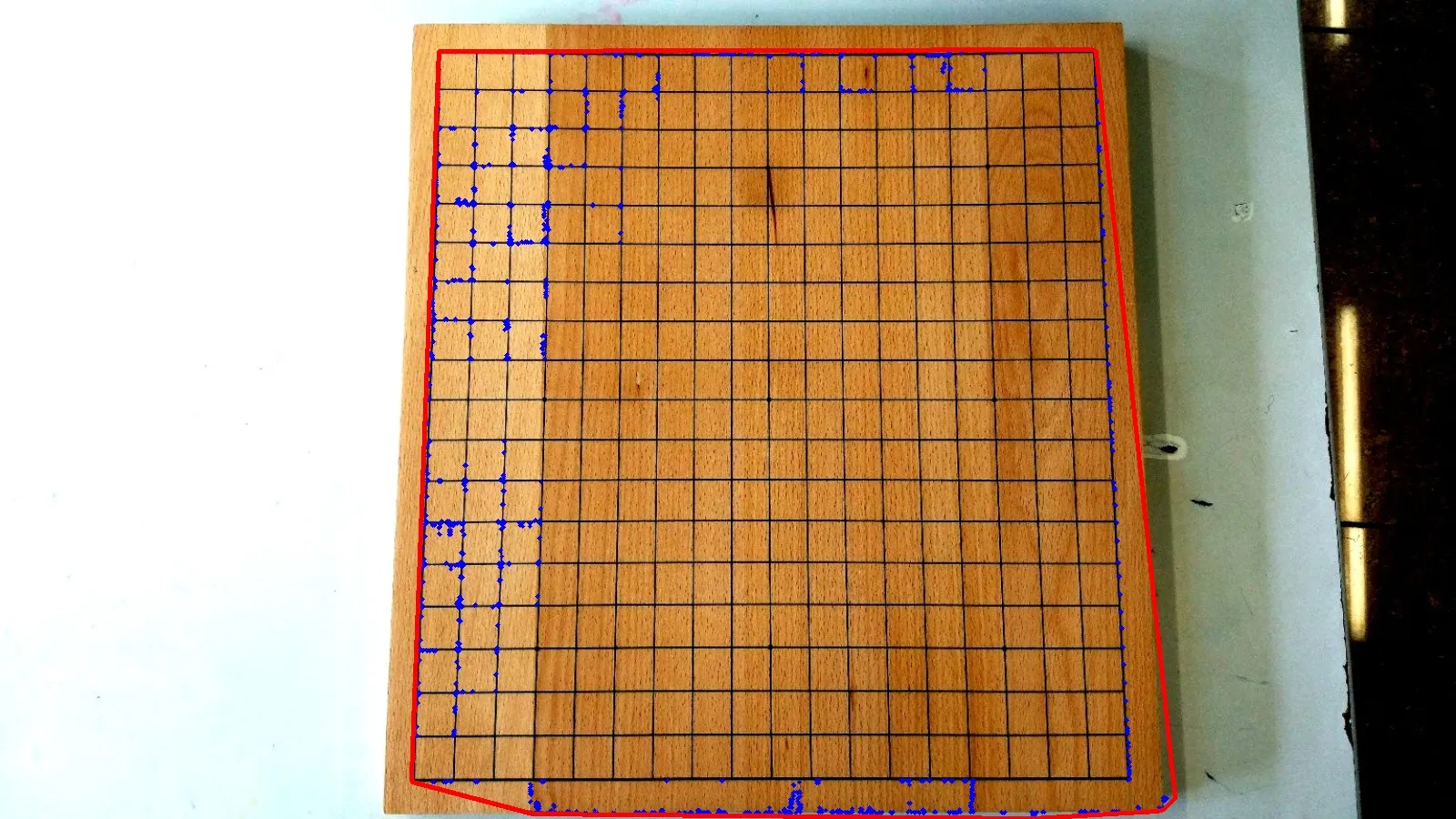

我需要找到一个围棋棋盘,并使用Python中的OpenCV2检测照片上的棋子,但是现在我遇到了检测棋盘的问题,同一轮廓中有奇怪的点,我不明白如何去除它们。这是我目前拥有的:

from skimage import exposure

import numpy as np

import argparse

import imutils

import cv2

ap = argparse.ArgumentParser()

ap.add_argument("-r", required = True,

help = "ratio", type=int, default = 800)

args = vars(ap.parse_args())

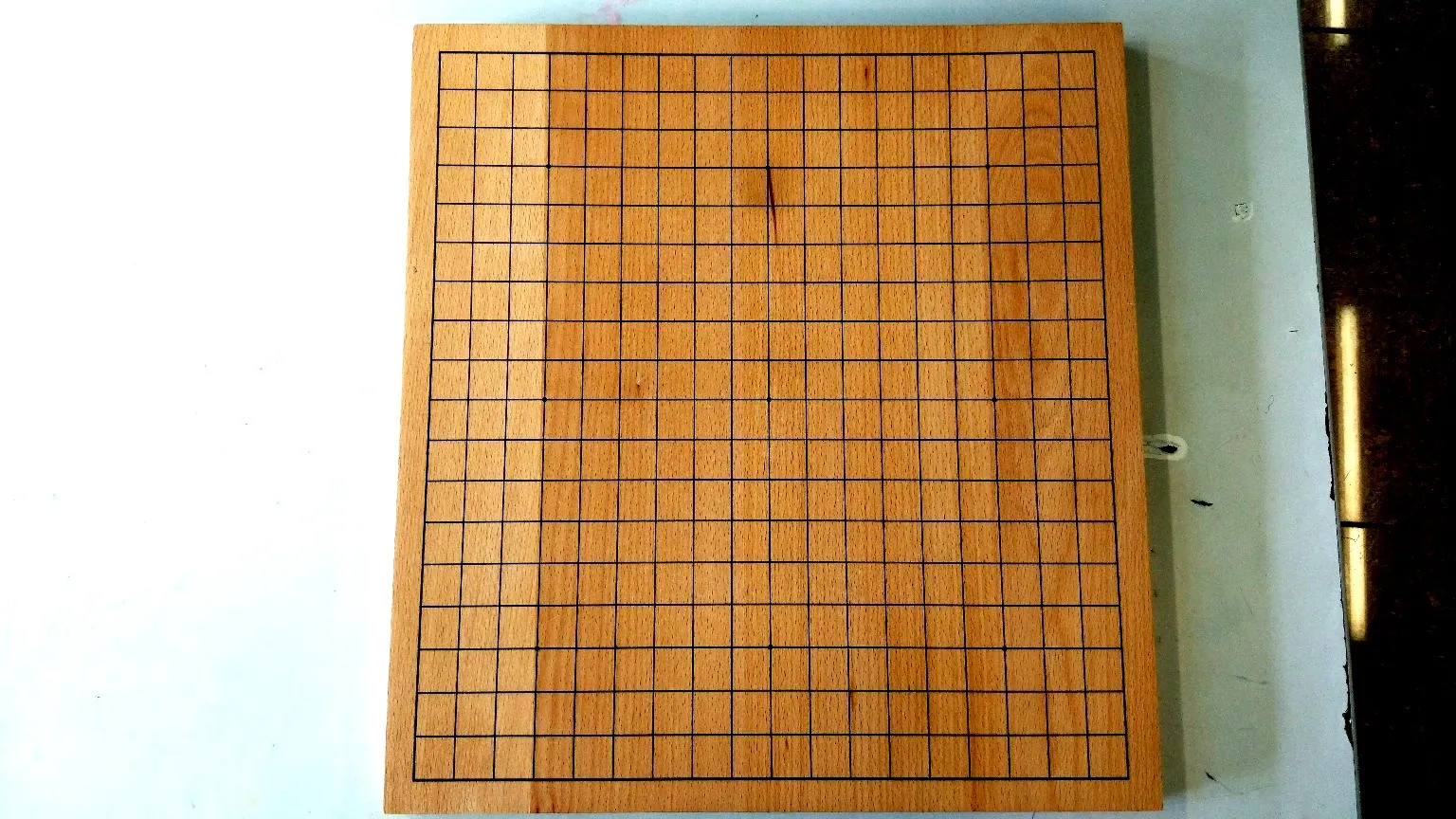

img = cv2.imread('3.jpg') #upload image and change resolution

ratio = img.shape[0] / args["r"]

orig = img.copy()

img = imutils.resize(img, height = args["r"])

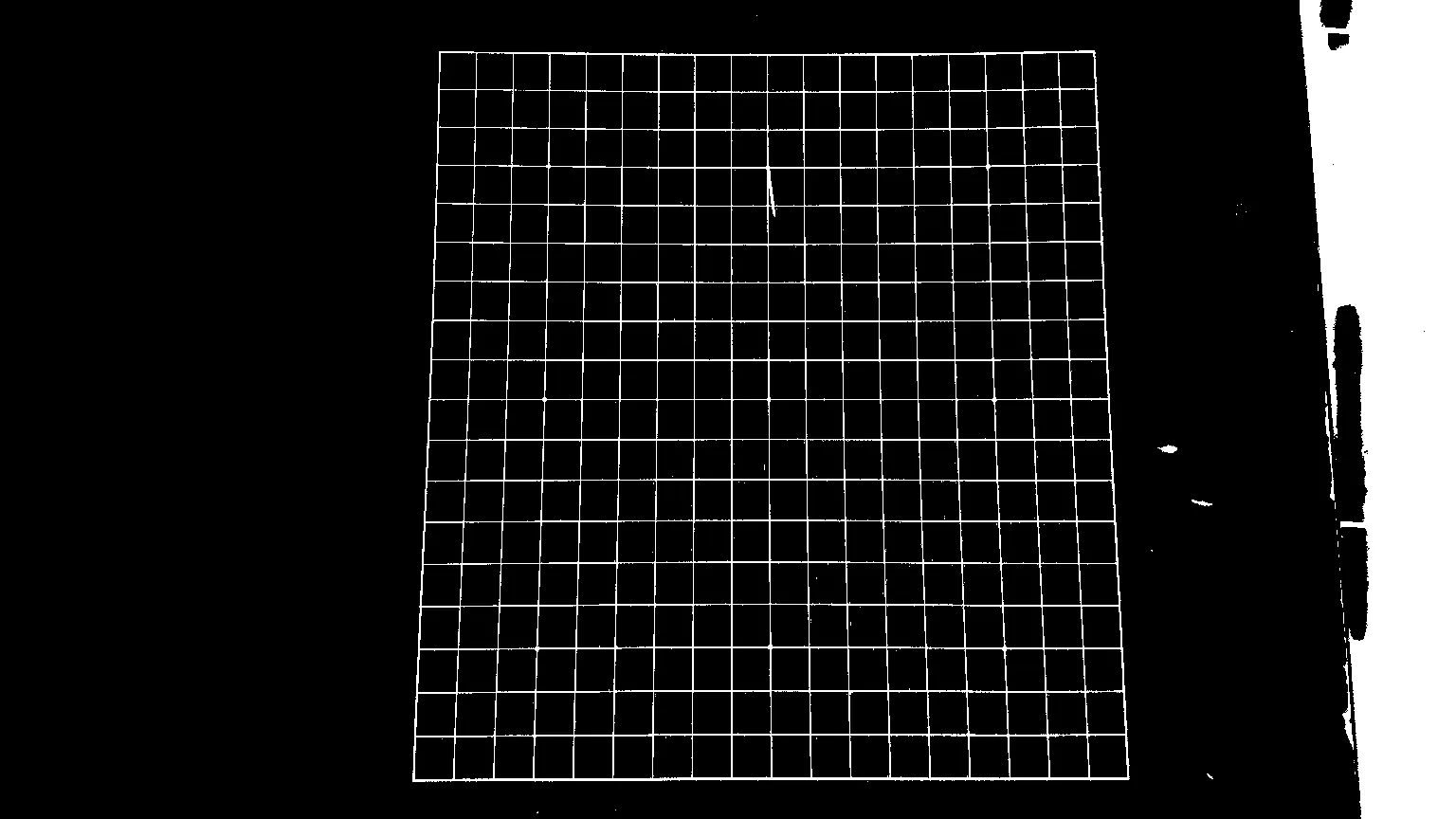

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

gray = cv2.bilateralFilter(gray, 11, 17, 17)

edged = cv2.Canny(gray, 30, 200)

cnts= cv2.findContours(edged.copy(), cv2.RETR_LIST, cv2.CHAIN_APPROX_SIMPLE) #search contours and sorting them

cnts = imutils.grab_contours(cnts)

cnts = sorted(cnts, key = cv2.contourArea, reverse = True)[:10]

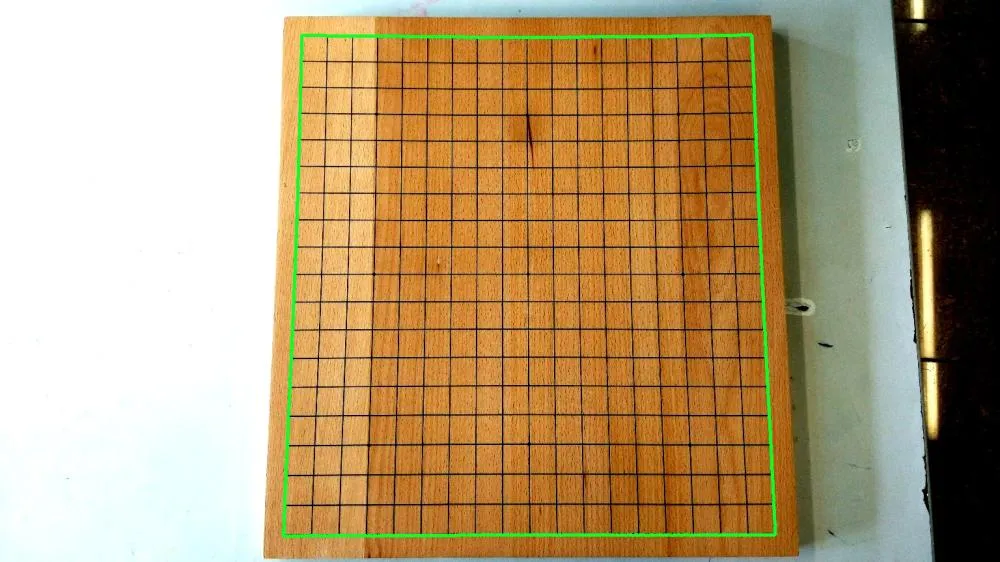

screenCnt = None

for cnt in cnts:

rect = cv2.minAreaRect(cnt) # try to fit each contour in rectangle

box = cv2.boxPoints(rect)

box = np.int0(box)

area = int(rect[1][0]*rect[1][1]) # calculating contour area

if (area > 300000):

print(area)

cv2.drawContours(img, cnt, -1, (255, 0, 0), 4) #dots in contour

hull = cv2.convexHull(cnt) # calculating convex hull

cv2.drawContours(img, [hull], -1, (0, 0, 255), 3)

cv2.imshow("death", img)

cv2.waitKey(0)

源代码

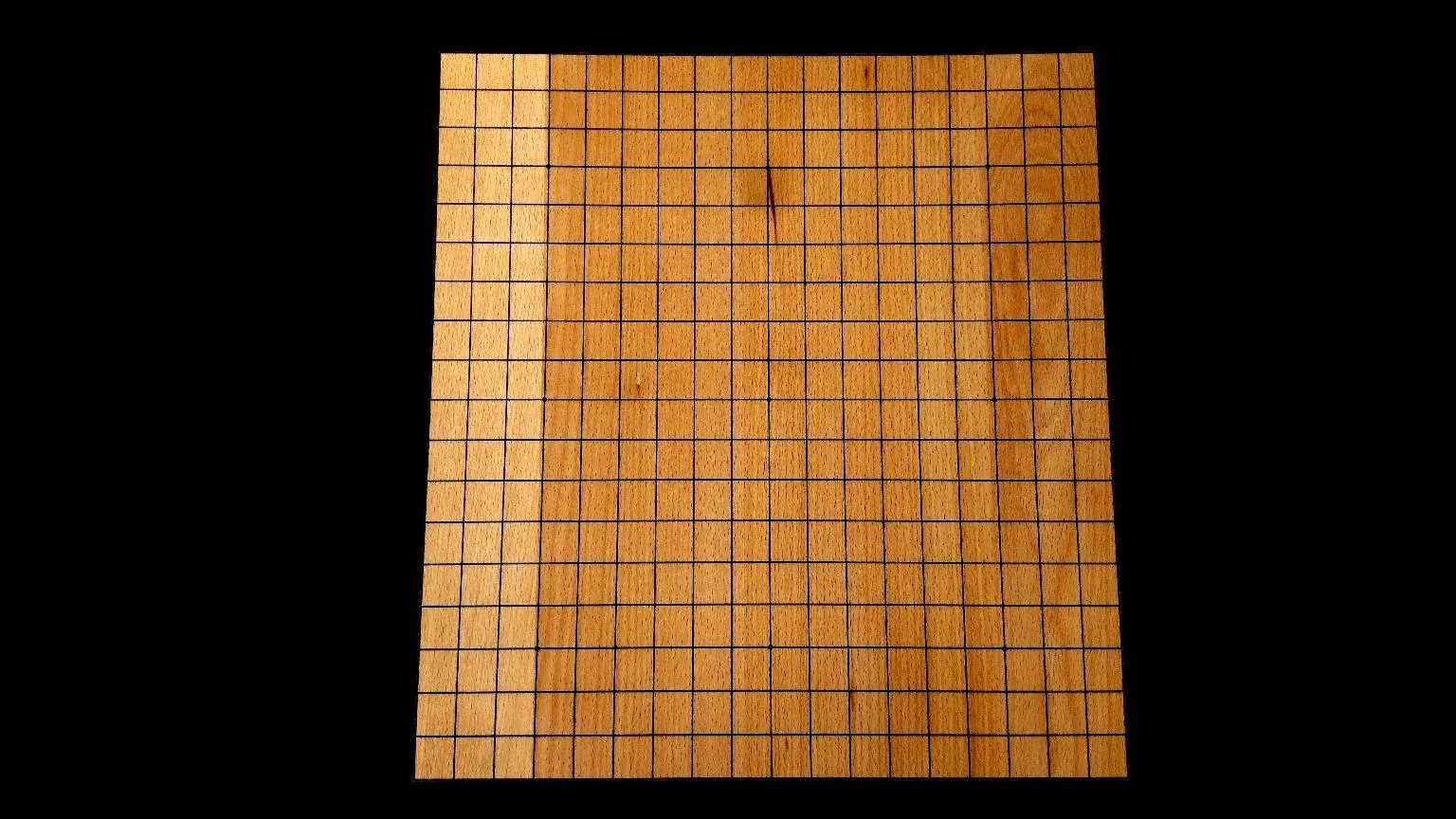

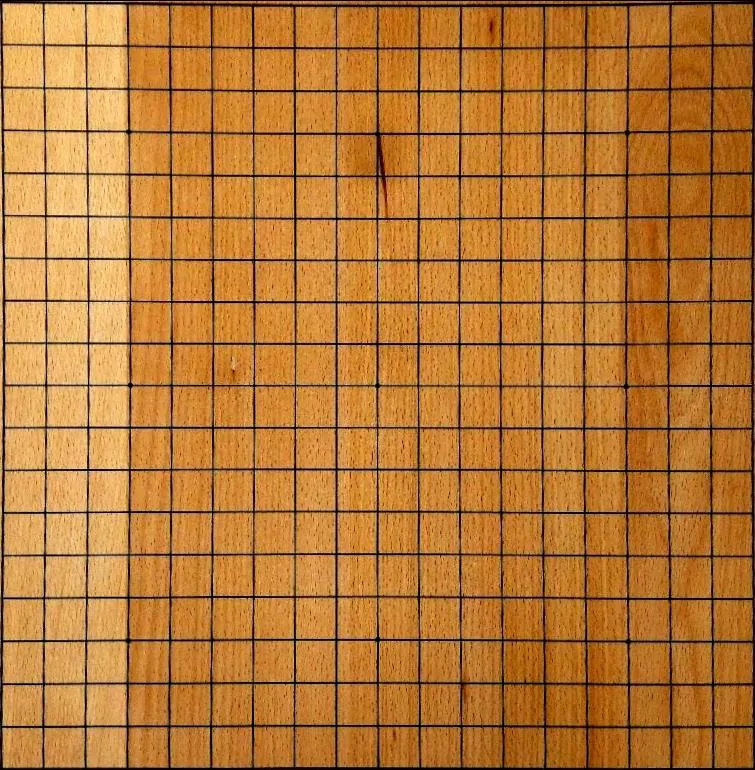

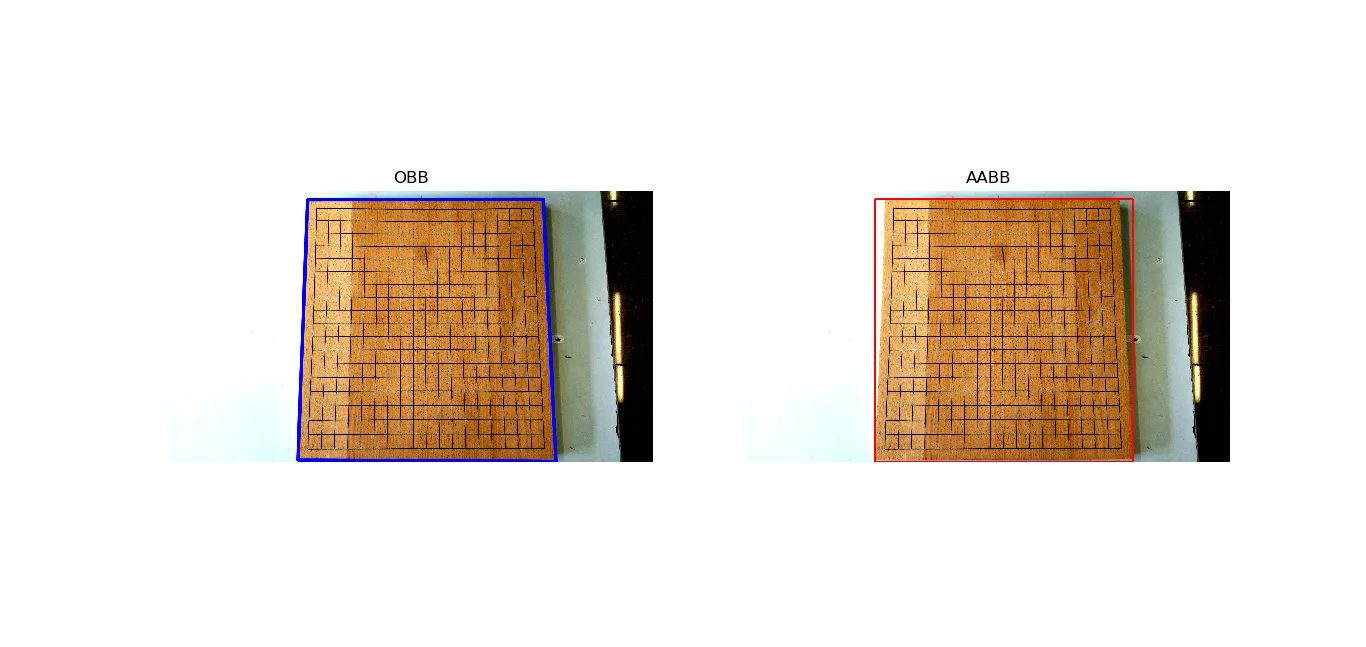

结果

cnts = sorted(cnts, key = cv2.contourArea, reverse = True)[:10]中这样做了。 - zteffi