我正在尝试在这篇文章中实现CNN模型(https://arxiv.org/abs/1605.07333)。

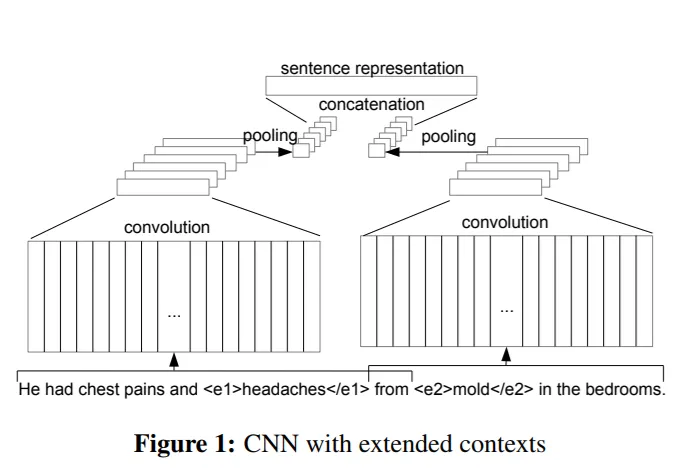

这里,他们有两个不同的上下文作为输入,分别由两个独立的卷积和最大池化层进行处理。在池化之后,他们将结果连接起来。 假设每个卷积神经网络都像这样建模,我该如何实现上述模型?

谢谢您的提前帮助! 最终代码:我只是使用了@FernandoOrtega的解决方案:

这里,他们有两个不同的上下文作为输入,分别由两个独立的卷积和最大池化层进行处理。在池化之后,他们将结果连接起来。 假设每个卷积神经网络都像这样建模,我该如何实现上述模型?

def baseline_cnn(activation='relu'):

model = Sequential()

model.add(Embedding(SAMPLE_SIZE, EMBEDDING_DIMS, input_length=MAX_SMI_LEN))

model.add(Dropout(0.2))

model.add(Conv1D(NUM_FILTERS, FILTER_LENGTH, padding='valid', activation=activation, strides=1))

model.add(GlobalMaxPooling1D())

model.add(Dense(1))

model.add(Activation('sigmoid'))

model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy'])

return model

谢谢您的提前帮助! 最终代码:我只是使用了@FernandoOrtega的解决方案:

def build_combined(FLAGS, NUM_FILTERS, FILTER_LENGTH1, FILTER_LENGTH2):

Dinput = Input(shape=(FLAGS.max_dlen, FLAGS.dset_size))

Tinput = Input(shape=(FLAGS.max_tlen, FLAGS.tset_size))

encode_d= Conv1D(filters=NUM_FILTERS, kernel_size=FILTER_LENGTH1, activation='relu', padding='valid', strides=1)(Dinput)

encode_d = Conv1D(filters=NUM_FILTERS*2, kernel_size=FILTER_LENGTH1, activation='relu', padding='valid', strides=1)(encode_d)

encode_d = GlobalMaxPooling1D()(encode_d)

encode_tt = Conv1D(filters=NUM_FILTERS, kernel_size=FILTER_LENGTH2, activation='relu', padding='valid', strides=1)(Tinput)

encode_tt = Conv1D(filters=NUM_FILTERS*2, kernel_size=FILTER_LENGTH1, activation='relu', padding='valid', strides=1)(encode_tt)

encode_tt = GlobalMaxPooling1D()(encode_tt)

encode_combined = keras.layers.concatenate([encode_d, encode_tt])

# Fully connected

FC1 = Dense(1024, activation='relu')(encode_combined)

FC2 = Dropout(0.1)(FC1)

FC2 = Dense(512, activation='relu')(FC2)

predictions = Dense(1, kernel_initializer='normal')(FC2)

combinedModel = Model(inputs=[Dinput, Tinput], outputs=[predictions])

combinedModel.compile(optimizer='adam', loss='mean_squared_error', metrics=[accuracy])

print(combinedModel.summary())

return combinedModel