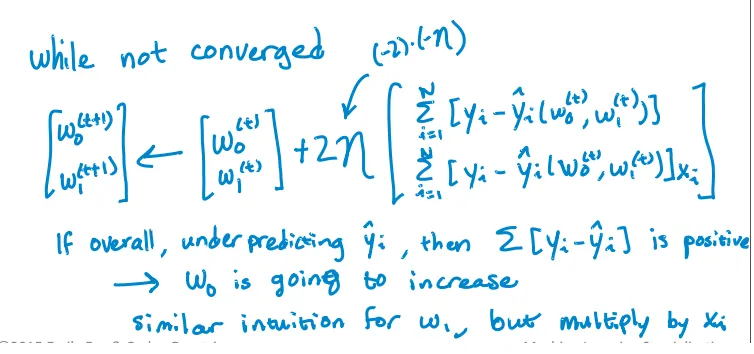

我正在尝试实现这个单变量算法来查找截距和斜率:

以下是我的 Python 代码,用于更新拦截和斜率。但它并没有收敛。RSS 随着迭代而增加,而不是减少,经过一些迭代后变为无穷大。我在实现算法时没有发现任何错误。如何解决这个问题? 我已经附上了 csv 文件。

以下是代码:

import pandas as pd

import numpy as np

#Defining gradient_decend

#This Function takes X value, Y value and vector of w0(intercept),w1(slope)

#INPUT FEATURES=X(sq.feet of house size)

#TARGET VALUE=Y (Price of House)

#W=np.array([w0,w1]).reshape(2,1)

#W=[w0,

# w1]

def gradient_decend(X,Y,W):

intercept=W[0][0]

slope=W[1][0]

#Here i will get a list

#list is like this

#gd=[sum(predicted_value-(intercept+slope*x)),

# sum(predicted_value-(intercept+slope*x)*x)]

gd=[sum(y-(intercept+slope*x) for x,y in zip(X,Y)),

sum(((y-(intercept+slope*x))*x) for x,y in zip(X,Y))]

return np.array(gd).reshape(2,1)

#Defining Resudual sum of squares

def RSS(X,Y,W):

return sum((y-(W[0][0]+W[1][0]*x))**2 for x,y in zip(X,Y))

#Reading Training Data

training_data=pd.read_csv("kc_house_train_data.csv")

#Defining fixed parameters

#Learning Rate

n=0.0001

iteration=1500

#Intercept

w0=0

#Slope

w1=0

#Creating 2,1 vector of w0,w1 parameters

W=np.array([w0,w1]).reshape(2,1)

#Running gradient Decend

for i in range(iteration):

W=W+((2*n)* (gradient_decend(training_data["sqft_living"],training_data["price"],W)))

print RSS(training_data["sqft_living"],training_data["price"],W)

这里 是CSV文件。