val d = sc.parallelize(0 until 1000000).map(i => (i%100000, i)).persist

d.join(d.reduceByKey(_ + _)).collect

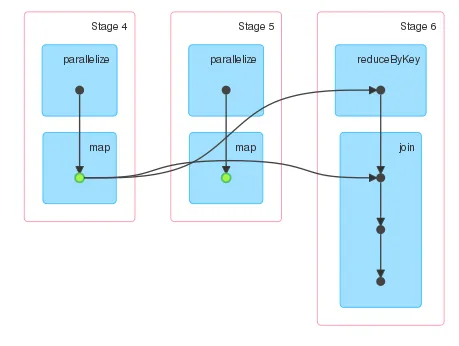

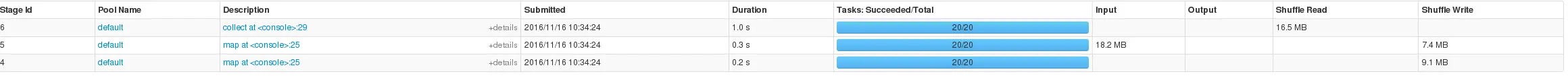

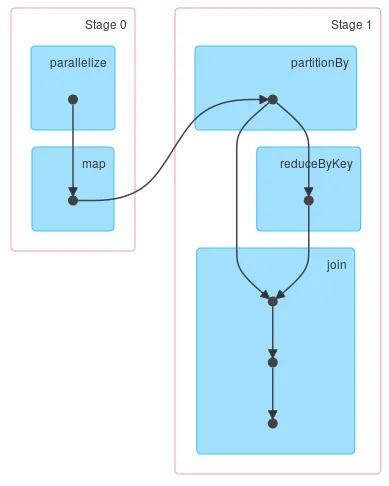

Spark UI 显示三个阶段。第四和第五阶段对应于计算

d,第六阶段对应于计算 collect 操作。由于 d 已经被持续化,我期望只有两个阶段。然而,第五阶段存在但与其他阶段无关。

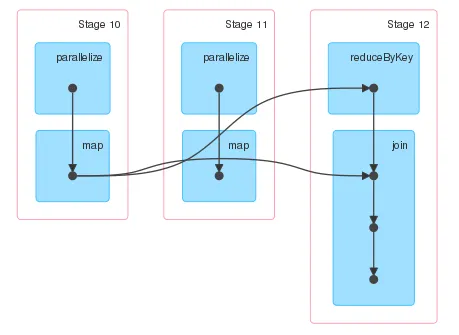

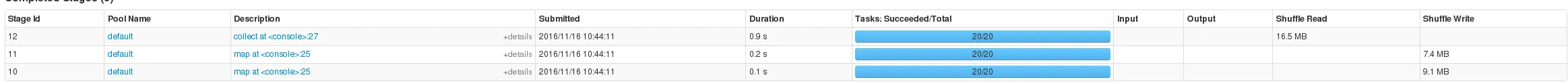

尝试运行相同的计算,但不使用persist,DAG看起来完全一样,只是没有绿色点表示RDD已被持久化。

我希望第11阶段的输出连接到第12阶段的输入,但实际上并没有连接。通过查看阶段描述,这些阶段似乎表明正在持久化d,因为第5阶段有输入,但我仍然不明白为什么第5阶段存在。