症状的背景和示例

我正在使用神经网络进行超分辨率(增加图像的分辨率)。但是,由于一幅图像可能很大,所以我需要将其分成多个较小的图像,并在每个图像上单独进行预测,然后再将结果合并在一起。

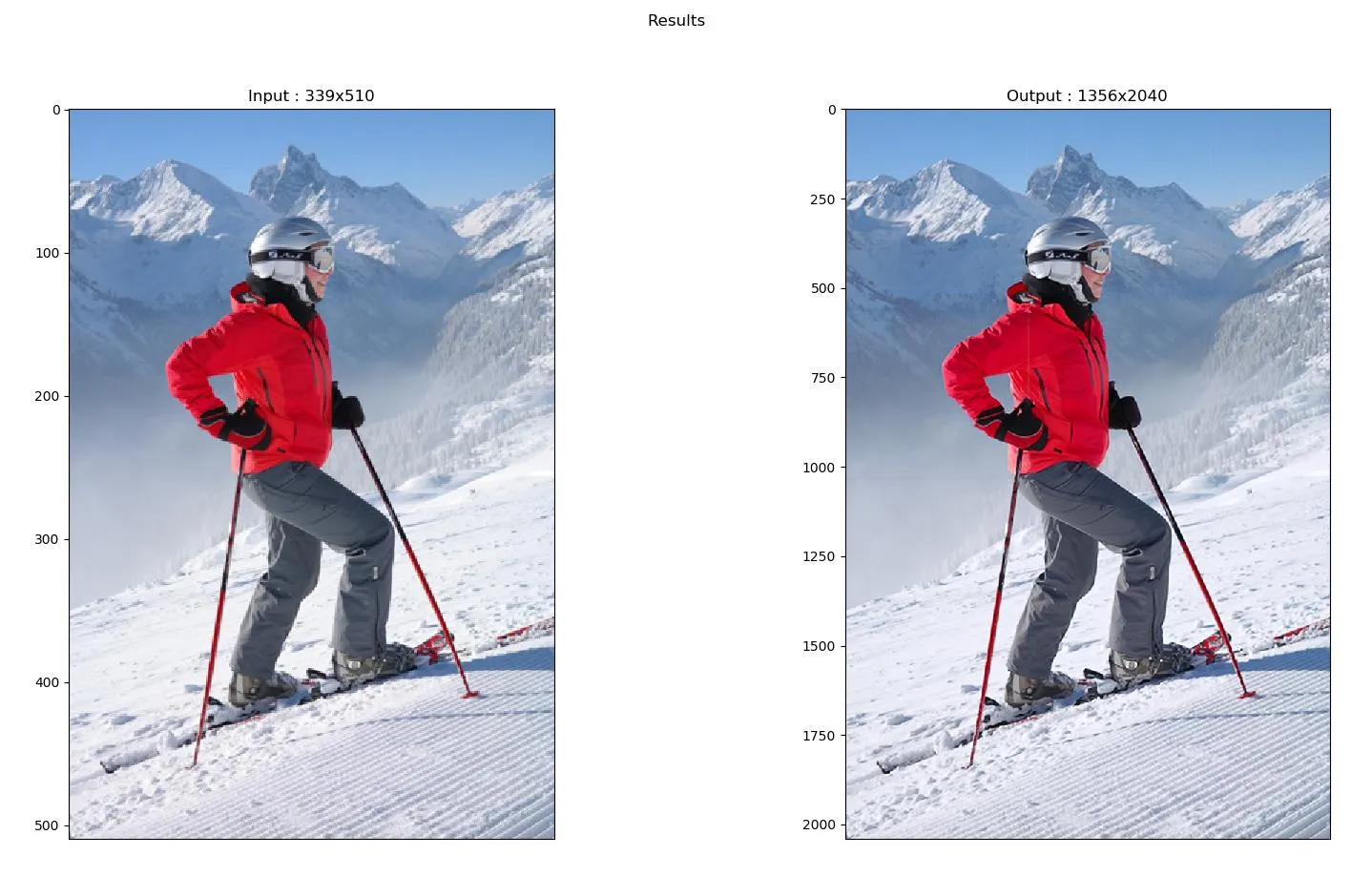

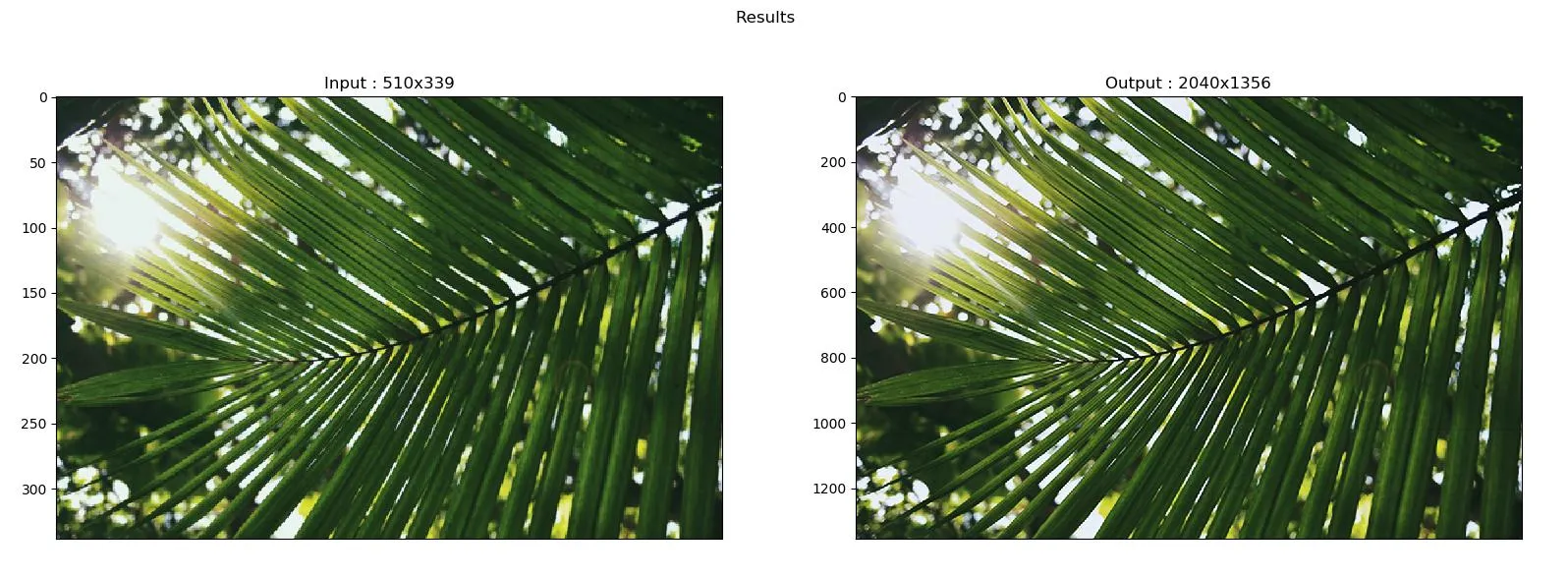

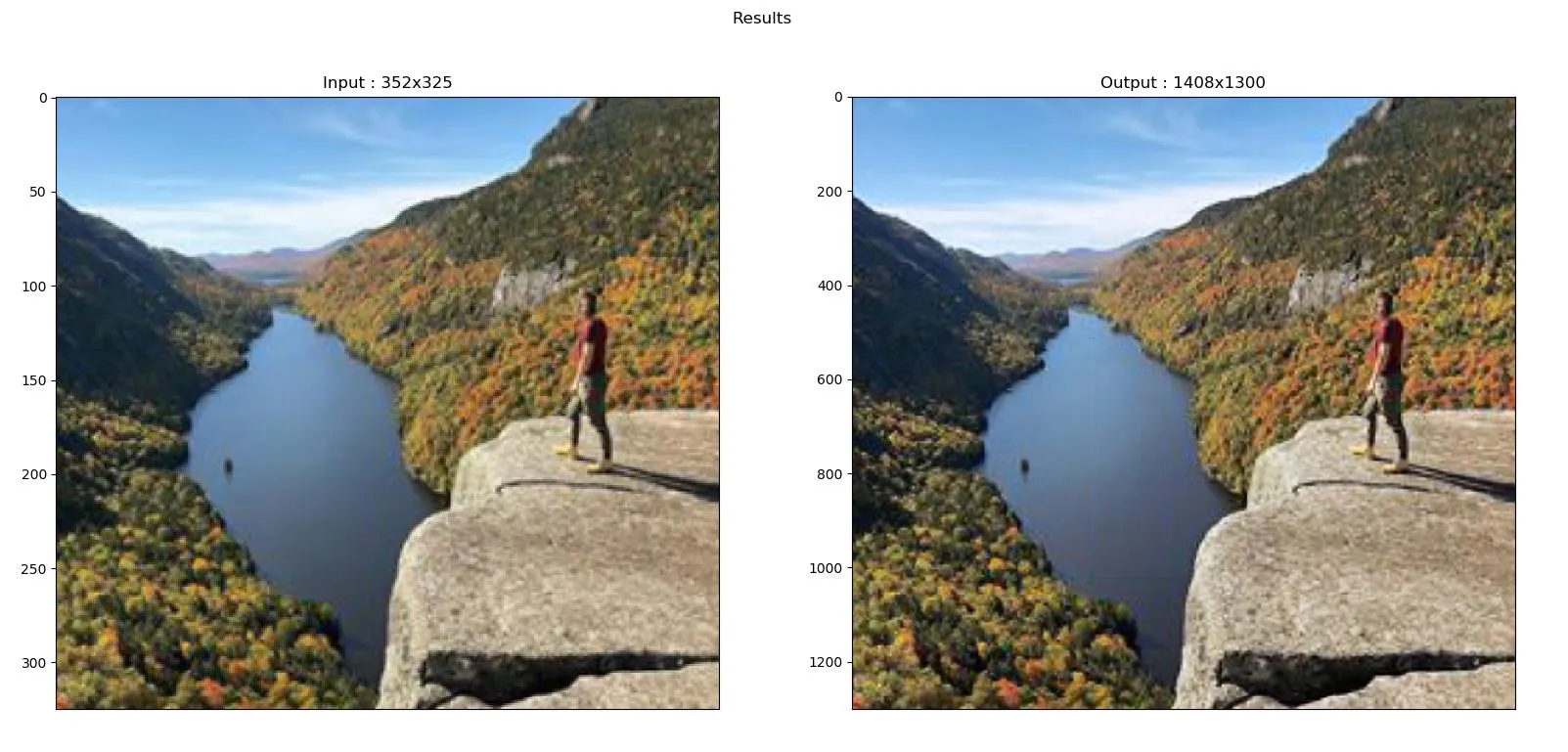

以下是这个过程的示例:

示例1: 在输出图片中,您可以看到一个微妙的垂直线穿过滑雪者的肩膀。

示例2: 一旦您开始看到它们,就会注意到微妙的线条正在整个图像中形成正方形(我将图像分割为单独预测的残留物)。

示例3: 您可以清楚地看到穿过湖泊的垂直线。

问题来源

基本上,我的网络在边缘处做出了糟糕的预测,我认为这是正常的,因为周围的信息较少。

源代码

import numpy as np

import matplotlib.pyplot as plt

import skimage.io

from keras.models import load_model

from constants import verbosity, save_dir, overlap, \

model_name, tests_path, input_width, input_height

from utils import float_im

def predict(args):

model = load_model(save_dir + '/' + args.model)

image = skimage.io.imread(tests_path + args.image)[:, :, :3] # removing possible extra channels (Alpha)

print("Image shape:", image.shape)

predictions = []

images = []

crops = seq_crop(image) # crops into multiple sub-parts the image based on 'input_' constants

for i in range(len(crops)): # amount of vertical crops

for j in range(len(crops[0])): # amount of horizontal crops

current_image = crops[i][j]

images.append(current_image)

print("Moving on to predictions. Amount:", len(images))

for p in range(len(images)):

if p%3 == 0 and verbosity == 2:

print("--prediction #", p)

# Hack because GPU can only handle one image at a time

input_img = (np.expand_dims(images[p], 0)) # Add the image to a batch where it's the only member

predictions.append(model.predict(input_img)[0]) # returns a list of lists, one for each image in the batch

return predictions, image, crops

def show_pred_output(input, pred):

plt.figure(figsize=(20, 20))

plt.suptitle("Results")

plt.subplot(1, 2, 1)

plt.title("Input : " + str(input.shape[1]) + "x" + str(input.shape[0]))

plt.imshow(input, cmap=plt.cm.binary).axes.get_xaxis().set_visible(False)

plt.subplot(1, 2, 2)

plt.title("Output : " + str(pred.shape[1]) + "x" + str(pred.shape[0]))

plt.imshow(pred, cmap=plt.cm.binary).axes.get_xaxis().set_visible(False)

plt.show()

# adapted from https://dev59.com/MK7la4cB1Zd3GeqPfpcA#52463034

def seq_crop(img):

"""

To crop the whole image in a list of sub-images of the same size.

Size comes from "input_" variables in the 'constants' (Evaluation).

Padding with 0 the Bottom and Right image.

:param img: input image

:return: list of sub-images with defined size

"""

width_shape = ceildiv(img.shape[1], input_width)

height_shape = ceildiv(img.shape[0], input_height)

sub_images = [] # will contain all the cropped sub-parts of the image

for j in range(height_shape):

horizontal = []

for i in range(width_shape):

horizontal.append(crop_precise(img, i*input_width, j*input_height, input_width, input_height))

sub_images.append(horizontal)

return sub_images

def crop_precise(img, coord_x, coord_y, width_length, height_length):

"""

To crop a precise portion of an image.

When trying to crop outside of the boundaries, the input to padded with zeros.

:param img: image to crop

:param coord_x: width coordinate (top left point)

:param coord_y: height coordinate (top left point)

:param width_length: width of the cropped portion starting from coord_x

:param height_length: height of the cropped portion starting from coord_y

:return: the cropped part of the image

"""

tmp_img = img[coord_y:coord_y + height_length, coord_x:coord_x + width_length]

return float_im(tmp_img) # From [0,255] to [0.,1.]

# from https://dev59.com/Y2Uq5IYBdhLWcg3wEcRZ#17511341

def ceildiv(a, b):

return -(-a // b)

# adapted from https://stackoverflow.com/a/52733370/9768291

def reconstruct(predictions, crops):

# unflatten predictions

def nest(data, template):

data = iter(data)

return [[next(data) for _ in row] for row in template]

if len(crops) != 0:

predictions = nest(predictions, crops)

H = np.cumsum([x[0].shape[0] for x in predictions])

W = np.cumsum([x.shape[1] for x in predictions[0]])

D = predictions[0][0]

recon = np.empty((H[-1], W[-1], D.shape[2]), D.dtype)

for rd, rs in zip(np.split(recon, H[:-1], 0), predictions):

for d, s in zip(np.split(rd, W[:-1], 1), rs):

d[...] = s

return recon

if __name__ == '__main__':

print(" - ", args)

preds, original, crops = predict(args) # returns the predictions along with the original

enhanced = reconstruct(preds, crops) # reconstructs the enhanced image from predictions

plt.imsave('output/' + args.save, enhanced, cmap=plt.cm.gray)

show_pred_output(original, enhanced)

问题(我想要的)

有很多显而易见的天真方法可以解决这个问题,但我相信必须有一种非常简洁的方法来实现它:如何添加一个overlap_amount变量,使我能够进行重叠预测,从而丢弃每个子图像(“片段”)的“边缘部分”,并用其周围段的预测结果替换它(因为它们不包含“边缘预测”)?

当然,我希望最小化“无用”的预测量(要丢弃的像素)。还值得注意的是,输入的段产生一个4倍大的输出段(即,如果它是一个20x20像素图像,则现在您将获得一个80x80像素的图像作为输出)。