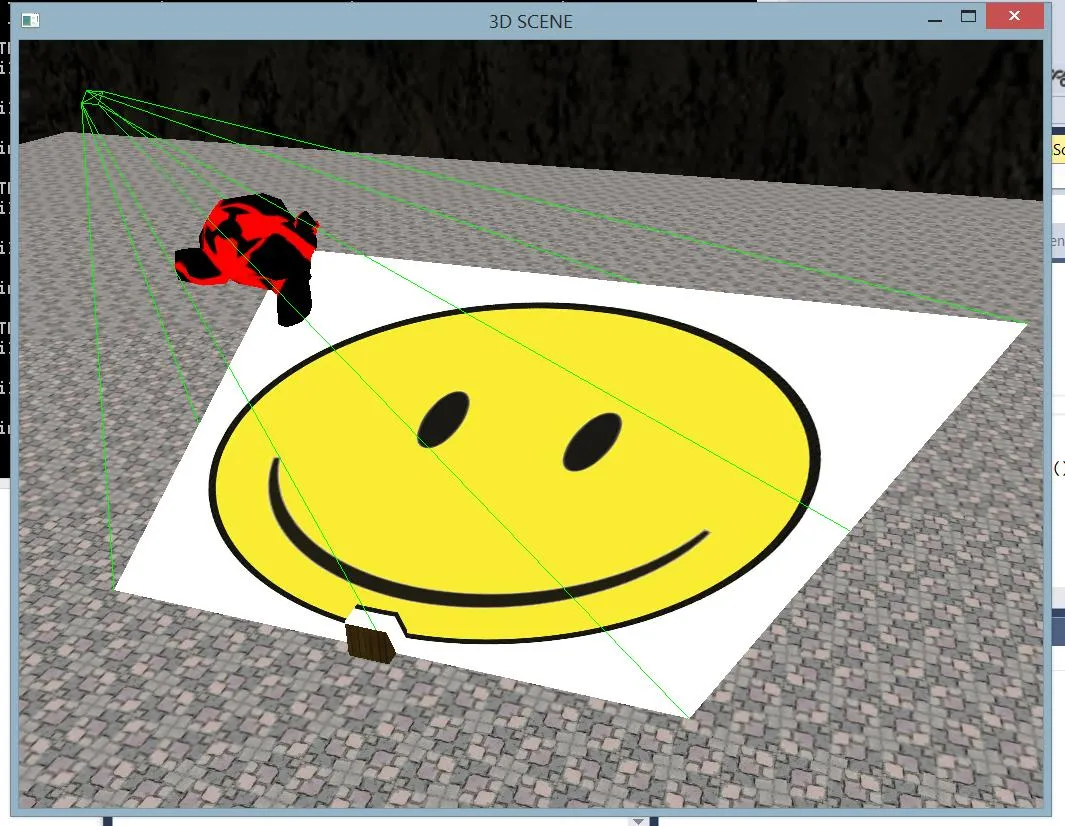

我正在尝试使用OpenGL 3+中的着色器实现简单的投影纹理映射方法。虽然网上有一些示例,但我在创建带有着色器的工作示例时遇到了麻烦。

我实际上计划使用两个着色器,一个用于正常场景绘制,另一个用于投影纹理映射。我有一个用于绘制场景的函数void ProjTextureMappingScene::renderScene(GLFWwindow *window),并使用glUseProgram()在着色器之间进行切换。正常绘图效果良好。然而,我不清楚如何在已经贴上纹理的立方体上渲染投影纹理。我是否需要使用模板缓冲区或帧缓冲对象(其余场景不应受影响)?

我还认为我的投影纹理映射着色器不正确,因为第二次渲染立方体时它会显示为黑色。此外,我尝试使用颜色进行调试,只有着色器的t分量似乎是非零的(所以立方体呈绿色)。我在下面的片段着色器中覆盖了texColor,仅供调试目的。

顶点着色器

#version 330

uniform mat4 TexGenMat;

uniform mat4 InvViewMat;

uniform mat4 P;

uniform mat4 MV;

uniform mat4 N;

layout (location = 0) in vec3 inPosition;

//layout (location = 1) in vec2 inCoord;

layout (location = 2) in vec3 inNormal;

out vec3 vNormal, eyeVec;

out vec2 texCoord;

out vec4 projCoords;

void main()

{

vNormal = (N * vec4(inNormal, 0.0)).xyz;

vec4 posEye = MV * vec4(inPosition, 1.0);

vec4 posWorld = InvViewMat * posEye;

projCoords = TexGenMat * posWorld;

// only needed for specular component

// currently not used

eyeVec = -posEye.xyz;

gl_Position = P * MV * vec4(inPosition, 1.0);

}

片元着色器

#version 330

uniform sampler2D projMap;

uniform sampler2D gSampler;

uniform vec4 vColor;

in vec3 vNormal, lightDir, eyeVec;

//in vec2 texCoord;

in vec4 projCoords;

out vec4 outputColor;

struct DirectionalLight

{

vec3 vColor;

vec3 vDirection;

float fAmbientIntensity;

};

uniform DirectionalLight sunLight;

void main (void)

{

// supress the reverse projection

if (projCoords.q > 0.0)

{

vec2 finalCoords = projCoords.st / projCoords.q;

vec4 vTexColor = texture(gSampler, finalCoords);

// only t has non-zero values..why?

vTexColor = vec4(finalCoords.s, finalCoords.t, finalCoords.r, 1.0);

//vTexColor = vec4(projCoords.s, projCoords.t, projCoords.r, 1.0);

float fDiffuseIntensity = max(0.0, dot(normalize(vNormal), -sunLight.vDirection));

outputColor = vTexColor*vColor*vec4(sunLight.vColor * (sunLight.fAmbientIntensity + fDiffuseIntensity), 1.0);

}

}

创建TexGen矩阵

biasMatrix = glm::mat4(0.5f, 0, 0, 0.5f,

0, 0.5f, 0, 0.5f,

0, 0, 0.5f, 0.5f,

0, 0, 0, 1);

// 4:3 perspective with 45 fov

projectorP = glm::perspective(45.0f * zoomFactor, 4.0f / 3.0f, 0.1f, 1000.0f);

projectorOrigin = glm::vec3(-3.0f, 3.0f, 0.0f);

projectorTarget = glm::vec3(0.0f, 0.0f, 0.0f);

projectorV = glm::lookAt(projectorOrigin, // projector origin

projectorTarget, // project on object at origin

glm::vec3(0.0f, 1.0f, 0.0f) // Y axis is up

);

mModel = glm::mat4(1.0f);

...

texGenMatrix = biasMatrix * projectorP * projectorV * mModel;

invViewMatrix = glm::inverse(mModel*mModelView);

再次渲染立方体

对于我来说,立方体的模型视图应该是什么还不清楚?应该使用幻灯片投影仪的视图矩阵(就像现在一样)还是普通的视景投影仪?当前立方体在场景视图中呈黑色(调试时呈绿色),正如它从滑动投影仪中看到的那样(我制作了一个切换热键,以便可以看到滑动投影仪的“视图”)。 立方体也随着视图移动。如何将投影投射到立方体本身上?

mModel = glm::translate(projectorV, projectorOrigin);

// bind projective texture

tTextures[2].bindTexture();

// set all uniforms

...

// bind VBO data and draw

glBindVertexArray(uiVAOSceneObjects);

glDrawArrays(GL_TRIANGLES, 6, 36);

切换主场景摄像机和幻灯片投影仪摄像机

if (useMainCam)

{

mCurrent = glm::mat4(1.0f);

mModelView = mModelView*mCurrent;

mProjection = *pipeline->getProjectionMatrix();

}

else

{

mModelView = projectorV;

mProjection = projectorP;

}