我已经为MNIST数据库编写了下面这个简单的MLP网络。

from __future__ import print_function

import keras

from keras.datasets import mnist

from keras.models import Sequential

from keras.layers import Dense, Dropout

from keras import callbacks

batch_size = 100

num_classes = 10

epochs = 20

tb = callbacks.TensorBoard(log_dir='/Users/shlomi.shwartz/tensorflow/notebooks/logs/minist', histogram_freq=10, batch_size=32,

write_graph=True, write_grads=True, write_images=True,

embeddings_freq=10, embeddings_layer_names=None,

embeddings_metadata=None)

early_stop = callbacks.EarlyStopping(monitor='val_loss', min_delta=0,

patience=3, verbose=1, mode='auto')

# the data, shuffled and split between train and test sets

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train = x_train.reshape(60000, 784)

x_test = x_test.reshape(10000, 784)

x_train = x_train.astype('float32')

x_test = x_test.astype('float32')

x_train /= 255

x_test /= 255

print(x_train.shape[0], 'train samples')

print(x_test.shape[0], 'test samples')

# convert class vectors to binary class matrices

y_train = keras.utils.to_categorical(y_train, num_classes)

y_test = keras.utils.to_categorical(y_test, num_classes)

model = Sequential()

model.add(Dense(200, activation='relu', input_shape=(784,)))

model.add(Dropout(0.2))

model.add(Dense(100, activation='relu'))

model.add(Dropout(0.2))

model.add(Dense(60, activation='relu'))

model.add(Dropout(0.2))

model.add(Dense(30, activation='relu'))

model.add(Dropout(0.2))

model.add(Dense(10, activation='softmax'))

model.summary()

model.compile(loss='categorical_crossentropy',

optimizer='adam',

metrics=['accuracy'])

history = model.fit(x_train, y_train,

callbacks=[tb,early_stop],

batch_size=batch_size,

epochs=epochs,

verbose=1,

validation_data=(x_test, y_test))

score = model.evaluate(x_test, y_test, verbose=0)

print('Test loss:', score[0])

print('Test accuracy:', score[1])

Traceback (most recent call last):

File "/Users/shlomi.shwartz/IdeaProjects/TF/src/minist.py", line 65, in <module>

validation_data=(x_test, y_test))

File "/Users/shlomi.shwartz/tensorflow/lib/python3.6/site-packages/keras/models.py", line 870, in fit

initial_epoch=initial_epoch)

File "/Users/shlomi.shwartz/tensorflow/lib/python3.6/site-packages/keras/engine/training.py", line 1507, in fit

initial_epoch=initial_epoch)

File "/Users/shlomi.shwartz/tensorflow/lib/python3.6/site-packages/keras/engine/training.py", line 1117, in _fit_loop

callbacks.set_model(callback_model)

File "/Users/shlomi.shwartz/tensorflow/lib/python3.6/site-packages/keras/callbacks.py", line 52, in set_model

callback.set_model(model)

File "/Users/shlomi.shwartz/tensorflow/lib/python3.6/site-packages/keras/callbacks.py", line 719, in set_model

self.saver = tf.train.Saver(list(embeddings.values()))

File "/usr/local/Cellar/python3/3.6.1/Frameworks/Python.framework/Versions/3.6/lib/python3.6/site-packages/tensorflow/python/training/saver.py", line 1139, in __init__

self.build()

File "/usr/local/Cellar/python3/3.6.1/Frameworks/Python.framework/Versions/3.6/lib/python3.6/site-packages/tensorflow/python/training/saver.py", line 1161, in build

raise ValueError("No variables to save")

ValueError: No variables to save

问:我错过了什么?这是在Keras中做事的正确方式吗?

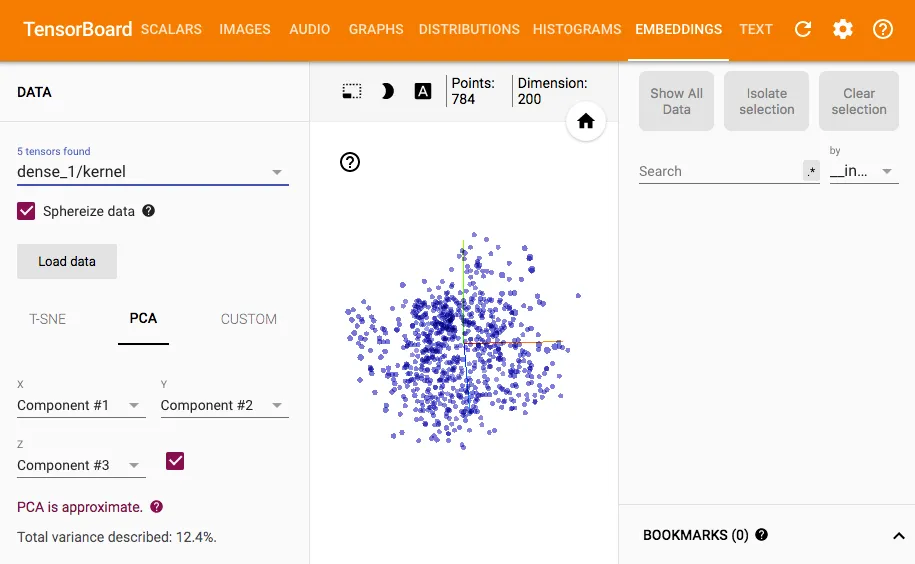

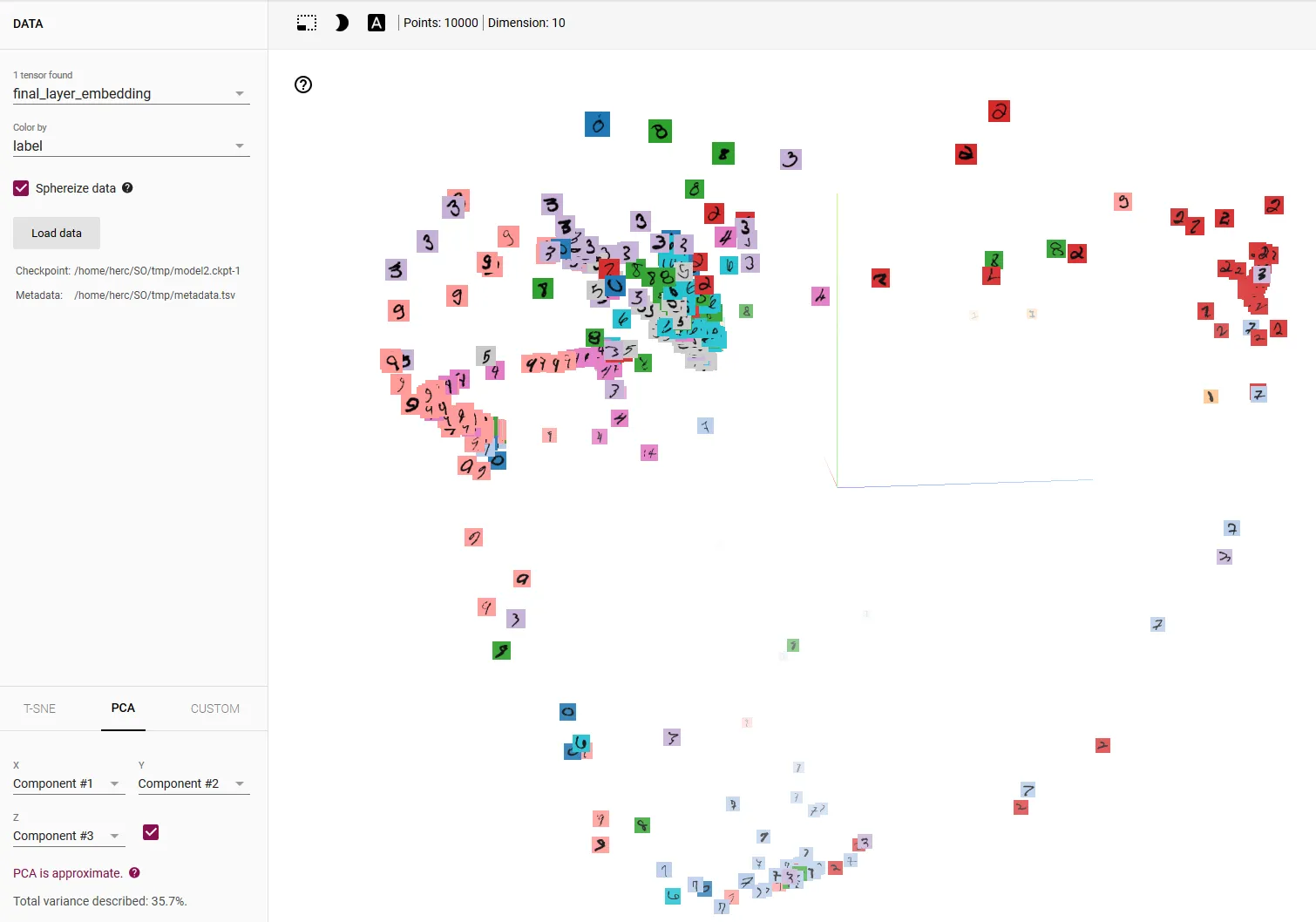

更新:我知道使用嵌入投影有一些前提条件,但我还没有找到一个好的Keras教程来实现它,任何帮助都将不胜感激。

embeddings_freq的值更改为10并出现错误之前,它的值是多少? - DarkCygnus