我正在使用 Flask 和 Celery,尝试锁定特定任务,以便一次只能运行一个。在 celery 文档中,它提供了一个示例来实现这个 Celery docs, Ensuring a task is only executed one at a time。虽然这个示例是针对 Django 的,但我已经尽力将其转换为适用于 Flask 的代码。然而,我仍然发现有锁的 myTask1 可以运行多次。

有一件事情不太清楚,就是我是否正确地使用了缓存。我以前从未使用过,所有内容都很新鲜。文档中提到但没有解释的一件事是:

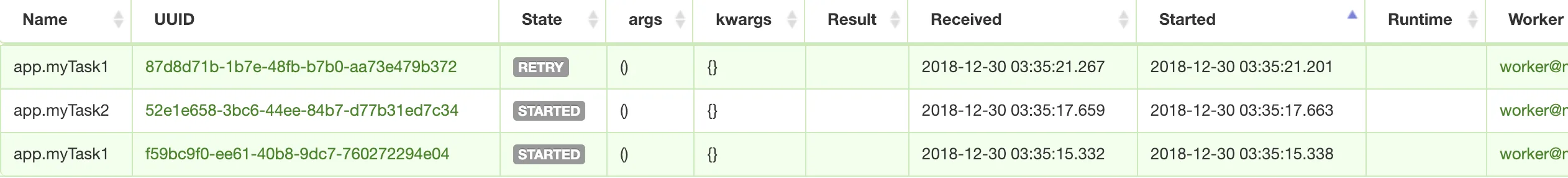

这里还有一张截图,你可以看到我运行了

有一件事情不太清楚,就是我是否正确地使用了缓存。我以前从未使用过,所有内容都很新鲜。文档中提到但没有解释的一件事是:

文档说明:

为了使此功能正常工作,您需要使用缓存后端,其中 .add 操作是原子操作。memcached 已知可用于此目的。

我不太确定这意味着什么,我是否应该将缓存与数据库一起使用,如果是的话,我该如何做?我正在使用mongodb。在我的代码中,我只是为缓存设置了这个 cache = Cache(app, config={'CACHE_TYPE': 'simple'}),因为这是 Flask-Cache 文档中提到的Flask-Cache Docs。

另一件让我不清楚的事情是,由于我是从我的 Flask 路由 task1 中调用 myTask1,所以我是否需要做任何不同的事情。

以下是我正在使用的代码示例。

from flask import (Flask, render_template, flash, redirect,

url_for, session, logging, request, g, render_template_string, jsonify)

from flask_caching import Cache

from contextlib import contextmanager

from celery import Celery

from Flask_celery import make_celery

from celery.result import AsyncResult

from celery.utils.log import get_task_logger

from celery.five import monotonic

from flask_pymongo import PyMongo

from hashlib import md5

import pymongo

import time

app = Flask(__name__)

cache = Cache(app, config={'CACHE_TYPE': 'simple'})

app.config['SECRET_KEY']= 'super secret key for me123456789987654321'

######################

# MONGODB SETUP

#####################

app.config['MONGO_HOST'] = 'localhost'

app.config['MONGO_DBNAME'] = 'celery-test-db'

app.config["MONGO_URI"] = 'mongodb://localhost:27017/celery-test-db'

mongo = PyMongo(app)

##############################

# CELERY ARGUMENTS

##############################

app.config['CELERY_BROKER_URL'] = 'amqp://localhost//'

app.config['CELERY_RESULT_BACKEND'] = 'mongodb://localhost:27017/celery-test-db'

app.config['CELERY_RESULT_BACKEND'] = 'mongodb'

app.config['CELERY_MONGODB_BACKEND_SETTINGS'] = {

"host": "localhost",

"port": 27017,

"database": "celery-test-db",

"taskmeta_collection": "celery_jobs",

}

app.config['CELERY_TASK_SERIALIZER'] = 'json'

celery = Celery('task',broker='mongodb://localhost:27017/jobs')

celery = make_celery(app)

LOCK_EXPIRE = 60 * 2 # Lock expires in 2 minutes

@contextmanager

def memcache_lock(lock_id, oid):

timeout_at = monotonic() + LOCK_EXPIRE - 3

# cache.add fails if the key already exists

status = cache.add(lock_id, oid, LOCK_EXPIRE)

try:

yield status

finally:

# memcache delete is very slow, but we have to use it to take

# advantage of using add() for atomic locking

if monotonic() < timeout_at and status:

# don't release the lock if we exceeded the timeout

# to lessen the chance of releasing an expired lock

# owned by someone else

# also don't release the lock if we didn't acquire it

cache.delete(lock_id)

@celery.task(bind=True, name='app.myTask1')

def myTask1(self):

self.update_state(state='IN TASK')

lock_id = self.name

with memcache_lock(lock_id, self.app.oid) as acquired:

if acquired:

# do work if we got the lock

print('acquired is {}'.format(acquired))

self.update_state(state='DOING WORK')

time.sleep(90)

return 'result'

# otherwise, the lock was already in use

raise self.retry(countdown=60) # redeliver message to the queue, so the work can be done later

@celery.task(bind=True, name='app.myTask2')

def myTask2(self):

print('you are in task2')

self.update_state(state='STARTING')

time.sleep(120)

print('task2 done')

@app.route('/', methods=['GET', 'POST'])

def index():

return render_template('index.html')

@app.route('/task1', methods=['GET', 'POST'])

def task1():

print('running task1')

result = myTask1.delay()

# get async task id

taskResult = AsyncResult(result.task_id)

# push async taskid into db collection job_task_id

mongo.db.job_task_id.insert({'taskid': str(taskResult), 'TaskName': 'task1'})

return render_template('task1.html')

@app.route('/task2', methods=['GET', 'POST'])

def task2():

print('running task2')

result = myTask2.delay()

# get async task id

taskResult = AsyncResult(result.task_id)

# push async taskid into db collection job_task_id

mongo.db.job_task_id.insert({'taskid': str(taskResult), 'TaskName': 'task2'})

return render_template('task2.html')

@app.route('/status', methods=['GET', 'POST'])

def status():

taskid_list = []

task_state_list = []

TaskName_list = []

allAsyncData = mongo.db.job_task_id.find()

for doc in allAsyncData:

try:

taskid_list.append(doc['taskid'])

except:

print('error with db conneciton in asyncJobStatus')

TaskName_list.append(doc['TaskName'])

# PASS TASK ID TO ASYNC RESULT TO GET TASK RESULT FOR THAT SPECIFIC TASK

for item in taskid_list:

try:

task_state_list.append(myTask1.AsyncResult(item).state)

except:

task_state_list.append('UNKNOWN')

return render_template('status.html', data_list=zip(task_state_list, TaskName_list))

最终工作代码

from flask import (Flask, render_template, flash, redirect,

url_for, session, logging, request, g, render_template_string, jsonify)

from flask_caching import Cache

from contextlib import contextmanager

from celery import Celery

from Flask_celery import make_celery

from celery.result import AsyncResult

from celery.utils.log import get_task_logger

from celery.five import monotonic

from flask_pymongo import PyMongo

from hashlib import md5

import pymongo

import time

import redis

from flask_redis import FlaskRedis

app = Flask(__name__)

# ADDING REDIS

redis_store = FlaskRedis(app)

# POINTING CACHE_TYPE TO REDIS

cache = Cache(app, config={'CACHE_TYPE': 'redis'})

app.config['SECRET_KEY']= 'super secret key for me123456789987654321'

######################

# MONGODB SETUP

#####################

app.config['MONGO_HOST'] = 'localhost'

app.config['MONGO_DBNAME'] = 'celery-test-db'

app.config["MONGO_URI"] = 'mongodb://localhost:27017/celery-test-db'

mongo = PyMongo(app)

##############################

# CELERY ARGUMENTS

##############################

# CELERY USING REDIS

app.config['CELERY_BROKER_URL'] = 'redis://localhost:6379/0'

app.config['CELERY_RESULT_BACKEND'] = 'mongodb://localhost:27017/celery-test-db'

app.config['CELERY_RESULT_BACKEND'] = 'mongodb'

app.config['CELERY_MONGODB_BACKEND_SETTINGS'] = {

"host": "localhost",

"port": 27017,

"database": "celery-test-db",

"taskmeta_collection": "celery_jobs",

}

app.config['CELERY_TASK_SERIALIZER'] = 'json'

celery = Celery('task',broker='mongodb://localhost:27017/jobs')

celery = make_celery(app)

LOCK_EXPIRE = 60 * 2 # Lock expires in 2 minutes

@contextmanager

def memcache_lock(lock_id, oid):

timeout_at = monotonic() + LOCK_EXPIRE - 3

print('in memcache_lock and timeout_at is {}'.format(timeout_at))

# cache.add fails if the key already exists

status = cache.add(lock_id, oid, LOCK_EXPIRE)

try:

yield status

print('memcache_lock and status is {}'.format(status))

finally:

# memcache delete is very slow, but we have to use it to take

# advantage of using add() for atomic locking

if monotonic() < timeout_at and status:

# don't release the lock if we exceeded the timeout

# to lessen the chance of releasing an expired lock

# owned by someone else

# also don't release the lock if we didn't acquire it

cache.delete(lock_id)

@celery.task(bind=True, name='app.myTask1')

def myTask1(self):

self.update_state(state='IN TASK')

print('dir is {} '.format(dir(self)))

lock_id = self.name

print('lock_id is {}'.format(lock_id))

with memcache_lock(lock_id, self.app.oid) as acquired:

print('in memcache_lock and lock_id is {} self.app.oid is {} and acquired is {}'.format(lock_id, self.app.oid, acquired))

if acquired:

# do work if we got the lock

print('acquired is {}'.format(acquired))

self.update_state(state='DOING WORK')

time.sleep(90)

return 'result'

# otherwise, the lock was already in use

raise self.retry(countdown=60) # redeliver message to the queue, so the work can be done later

@celery.task(bind=True, name='app.myTask2')

def myTask2(self):

print('you are in task2')

self.update_state(state='STARTING')

time.sleep(120)

print('task2 done')

@app.route('/', methods=['GET', 'POST'])

def index():

return render_template('index.html')

@app.route('/task1', methods=['GET', 'POST'])

def task1():

print('running task1')

result = myTask1.delay()

# get async task id

taskResult = AsyncResult(result.task_id)

# push async taskid into db collection job_task_id

mongo.db.job_task_id.insert({'taskid': str(taskResult), 'TaskName': 'myTask1'})

return render_template('task1.html')

@app.route('/task2', methods=['GET', 'POST'])

def task2():

print('running task2')

result = myTask2.delay()

# get async task id

taskResult = AsyncResult(result.task_id)

# push async taskid into db collection job_task_id

mongo.db.job_task_id.insert({'taskid': str(taskResult), 'TaskName': 'task2'})

return render_template('task2.html')

@app.route('/status', methods=['GET', 'POST'])

def status():

taskid_list = []

task_state_list = []

TaskName_list = []

allAsyncData = mongo.db.job_task_id.find()

for doc in allAsyncData:

try:

taskid_list.append(doc['taskid'])

except:

print('error with db conneciton in asyncJobStatus')

TaskName_list.append(doc['TaskName'])

# PASS TASK ID TO ASYNC RESULT TO GET TASK RESULT FOR THAT SPECIFIC TASK

for item in taskid_list:

try:

task_state_list.append(myTask1.AsyncResult(item).state)

except:

task_state_list.append('UNKNOWN')

return render_template('status.html', data_list=zip(task_state_list, TaskName_list))

if __name__ == '__main__':

app.secret_key = 'super secret key for me123456789987654321'

app.run(port=1234, host='localhost')

这里还有一张截图,你可以看到我运行了

myTask1两次和myTask2一次。现在我已经得到了myTask1的期望行为。现在,如果另一个工作人员试图接手它,myTask1将由单个工作人员运行,并根据我定义的重试次数进行重试。

self是一个字符串,而self.cache不存在。这只是一个猜测,但也许Cache.add应该是一个实例,所以像Cache().add这样的形式?因为当调用add函数时,第一个参数可能是 self,例如def add(self, lock_id, oid, lock_expire):,所以根据你目前的代码,self 就是 lock_id? - jmunschmyTask1时,它只会被排队,直到锁定完成才会运行。 - Max PowersmyTask1没有在使用,那么我希望它能够运行。如果另一个工作者正在使用myTask1,理想情况下我希望任务被排队,但在锁定被移除之前不要运行。希望这样更清楚了。 - Max Powers