我想自己完成斯坦福大学CS231n 2017年卷积神经网络课程的任务。

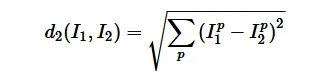

我正在尝试使用NumPy仅使用矩阵乘法和求和广播来计算L2距离。 L2距离为:

我正在尝试使用NumPy仅使用矩阵乘法和求和广播来计算L2距离。 L2距离为:

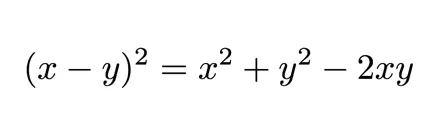

如果我使用这个公式,我认为我可以做到:

以下代码展示了计算L2距离的三种方法。如果我将compute_distances_two_loops方法的输出与compute_distances_one_loop方法的输出进行比较,两者相等。但是,如果我将compute_distances_two_loops方法的输出与只使用矩阵乘法和广播求和实现L2距离的compute_distances_no_loops方法的输出进行比较,它们是不同的。def compute_distances_two_loops(self, X):

"""

Compute the distance between each test point in X and each training point

in self.X_train using a nested loop over both the training data and the

test data.

Inputs:

- X: A numpy array of shape (num_test, D) containing test data.

Returns:

- dists: A numpy array of shape (num_test, num_train) where dists[i, j]

is the Euclidean distance between the ith test point and the jth training

point.

"""

num_test = X.shape[0]

num_train = self.X_train.shape[0]

dists = np.zeros((num_test, num_train))

for i in xrange(num_test):

for j in xrange(num_train):

#####################################################################

# TODO: #

# Compute the l2 distance between the ith test point and the jth #

# training point, and store the result in dists[i, j]. You should #

# not use a loop over dimension. #

#####################################################################

#dists[i, j] = np.sqrt(np.sum((X[i, :] - self.X_train[j, :]) ** 2))

dists[i, j] = np.sqrt(np.sum(np.square(X[i, :] - self.X_train[j, :])))

#####################################################################

# END OF YOUR CODE #

#####################################################################

return dists

def compute_distances_one_loop(self, X):

"""

Compute the distance between each test point in X and each training point

in self.X_train using a single loop over the test data.

Input / Output: Same as compute_distances_two_loops

"""

num_test = X.shape[0]

num_train = self.X_train.shape[0]

dists = np.zeros((num_test, num_train))

for i in xrange(num_test):

#######################################################################

# TODO: #

# Compute the l2 distance between the ith test point and all training #

# points, and store the result in dists[i, :]. #

#######################################################################

dists[i, :] = np.sqrt(np.sum(np.square(self.X_train - X[i, :]), axis = 1))

#######################################################################

# END OF YOUR CODE #

#######################################################################

print(dists.shape)

return dists

def compute_distances_no_loops(self, X):

"""

Compute the distance between each test point in X and each training point

in self.X_train using no explicit loops.

Input / Output: Same as compute_distances_two_loops

"""

num_test = X.shape[0]

num_train = self.X_train.shape[0]

dists = np.zeros((num_test, num_train))

#########################################################################

# TODO: #

# Compute the l2 distance between all test points and all training #

# points without using any explicit loops, and store the result in #

# dists. #

# #

# You should implement this function using only basic array operations; #

# in particular you should not use functions from scipy. #

# #

# HINT: Try to formulate the l2 distance using matrix multiplication #

# and two broadcast sums. #

#########################################################################

dists = np.sqrt(-2 * np.dot(X, self.X_train.T) +

np.sum(np.square(self.X_train), axis=1) +

np.sum(np.square(X), axis=1)[:, np.newaxis])

print(dists.shape)

#########################################################################

# END OF YOUR CODE #

#########################################################################

return dists

您可以在这里找到完整的可测试代码。

您知道我在compute_distances_no_loops或其他地方做错了什么吗?

更新:

抛出错误消息的代码是:

dists_two = classifier.compute_distances_no_loops(X_test)

# check that the distance matrix agrees with the one we computed before:

difference = np.linalg.norm(dists - dists_two, ord='fro')

print('Difference was: %f' % (difference, ))

if difference < 0.001:

print('Good! The distance matrices are the same')

else:

print('Uh-oh! The distance matrices are different')

并且错误信息:

Difference was: 372100.327569

Uh-oh! The distance matrices are different

X.dot(self.X_train.T)这样的吧,可能是这个原因吗? - sharathnatrajnp.allclose(compute_distances_no_loops(Y, Z), compute_distances_one_loop(Y, Z))时,它会返回True。 - Dani Mesejocompute_distances_no_loops方法后,我遇到了错误。 - VansFannel