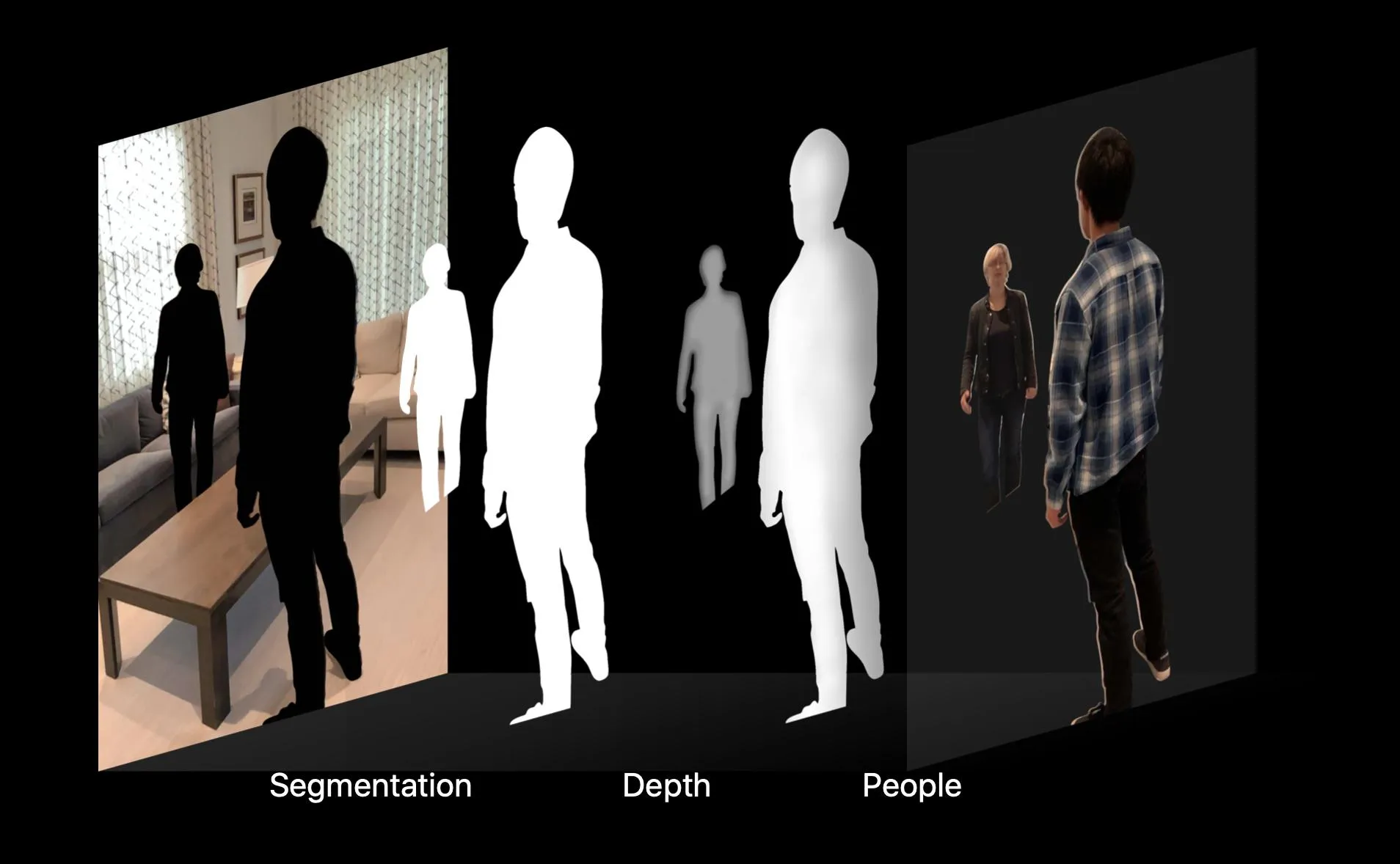

我正在使用苹果的示例项目,与使用

我想确定如何通过CIFilter运行生成的遮挡纹理。在我的代码中,我像这样“过滤”遮挡纹理;

当我将遮罩纹理和背景合成时,遮罩没有应用滤镜效果。以下是纹理的合成方式;

我该如何将 CIFilter 仅应用于由 ARMatteGenerator 生成的 alphaTexture?

ARMatteGenerator生成可用作人员遮挡技术中的遮挡纹理的MTLTexture有关。我想确定如何通过CIFilter运行生成的遮挡纹理。在我的代码中,我像这样“过滤”遮挡纹理;

func updateMatteTextures(commandBuffer: MTLCommandBuffer) {

guard let currentFrame = session.currentFrame else {

return

}

var targetImage: CIImage?

alphaTexture = matteGenerator.generateMatte(from: currentFrame, commandBuffer: commandBuffer)

dilatedDepthTexture = matteGenerator.generateDilatedDepth(from: currentFrame, commandBuffer: commandBuffer)

targetImage = CIImage(mtlTexture: alphaTexture!, options: nil)

monoAlphaCIFilter?.setValue(targetImage!, forKey: kCIInputImageKey)

monoAlphaCIFilter?.setValue(CIColor.red, forKey: kCIInputColorKey)

targetImage = (monoAlphaCIFilter?.outputImage)!

let drawingBounds = CGRect(origin: .zero, size: CGSize(width: alphaTexture!.width, height: alphaTexture!.height))

context.render(targetImage!, to: alphaTexture!, commandBuffer: commandBuffer, bounds: drawingBounds, colorSpace: CGColorSpaceCreateDeviceRGB())

}

当我将遮罩纹理和背景合成时,遮罩没有应用滤镜效果。以下是纹理的合成方式;

func compositeImagesWithEncoder(renderEncoder: MTLRenderCommandEncoder) {

guard let textureY = capturedImageTextureY, let textureCbCr = capturedImageTextureCbCr else {

return

}

// Push a debug group allowing us to identify render commands in the GPU Frame Capture tool

renderEncoder.pushDebugGroup("CompositePass")

// Set render command encoder state

renderEncoder.setCullMode(.none)

renderEncoder.setRenderPipelineState(compositePipelineState)

renderEncoder.setDepthStencilState(compositeDepthState)

// Setup plane vertex buffers

renderEncoder.setVertexBuffer(imagePlaneVertexBuffer, offset: 0, index: 0)

renderEncoder.setVertexBuffer(scenePlaneVertexBuffer, offset: 0, index: 1)

// Setup textures for the composite fragment shader

renderEncoder.setFragmentBuffer(sharedUniformBuffer, offset: sharedUniformBufferOffset, index: Int(kBufferIndexSharedUniforms.rawValue))

renderEncoder.setFragmentTexture(CVMetalTextureGetTexture(textureY), index: 0)

renderEncoder.setFragmentTexture(CVMetalTextureGetTexture(textureCbCr), index: 1)

renderEncoder.setFragmentTexture(sceneColorTexture, index: 2)

renderEncoder.setFragmentTexture(sceneDepthTexture, index: 3)

renderEncoder.setFragmentTexture(alphaTexture, index: 4)

renderEncoder.setFragmentTexture(dilatedDepthTexture, index: 5)

// Draw final quad to display

renderEncoder.drawPrimitives(type: .triangleStrip, vertexStart: 0, vertexCount: 4)

renderEncoder.popDebugGroup()

}

我该如何将 CIFilter 仅应用于由 ARMatteGenerator 生成的 alphaTexture?

Shaders.metal文件,但看到这个,我现在意识到过滤“哑光”并不是理想的方法。谢谢! - ZbadhabitZ