2个回答

4

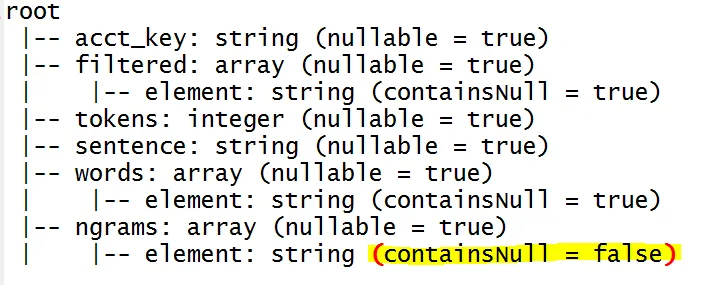

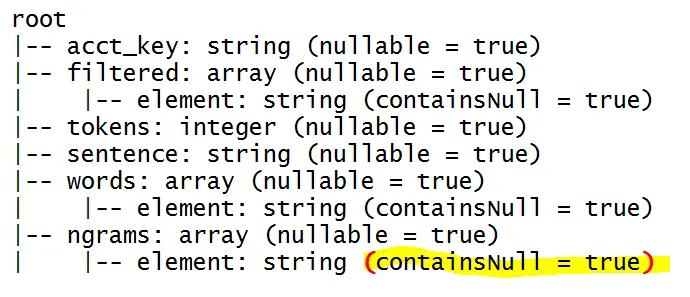

您需要用新的架构替换列。ArrayType采用两个参数:elementType和containsNull。

from pyspark.sql.types import *

from pyspark.sql.functions import udf

x = [("a",["b","c","d","e"]),("g",["h","h","d","e"])]

schema = StructType([StructField("key",StringType(), nullable=True),

StructField("values", ArrayType(StringType(), containsNull=False))])

df = spark.createDataFrame(x,schema = schema)

df.printSchema()

new_schema = ArrayType(StringType(), containsNull=True)

udf_foo = udf(lambda x:x, new_schema)

df.withColumn("values",udf_foo("values")).printSchema()

root

|-- key: string (nullable = true)

|-- values: array (nullable = true)

| |-- element: string (containsNull = false)

root

|-- key: string (nullable = true)

|-- values: array (nullable = true)

| |-- element: string (containsNull = true)

- pauli

0

这里有一个有用的例子,您可以更改每个列的模式,假设您希望具有相同的类型

from pyspark.sql.types import Row

from pyspark.sql.functions import *

df = sc.parallelize([

Row(isbn=1, count=1, average=10.6666666),

Row(isbn=2, count=1, average=11.1111111)

]).toDF()

df.printSchema()

df=df.select(*[col(x).cast('float') for x in df.columns]).printSchema()

输出:

root

|-- average: double (nullable = true)

|-- count: long (nullable = true)

|-- isbn: long (nullable = true)

root

|-- average: float (nullable = true)

|-- count: float (nullable = true)

|-- isbn: float (nullable = true)

- Flufylobster

网页内容由stack overflow 提供, 点击上面的可以查看英文原文,

原文链接

原文链接