我使用Pytorch Lightning训练我的模型(在GPU设备上,使用DDP),默认日志记录器是TensorBoard。

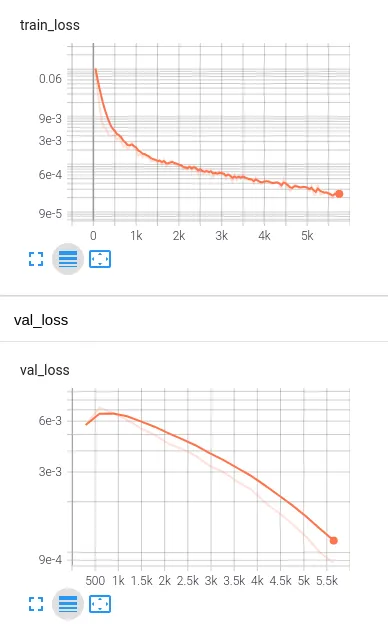

我的代码设置为分别在每个训练和验证步骤上记录训练和验证损失。

TensorBoard能够在“SCALERS”标签页中正确绘制 使用Pytorch 1.8.1、Pytorch Lightning 1.2.6、TensorBoard 2.4.1

使用Pytorch 1.8.1、Pytorch Lightning 1.2.6、TensorBoard 2.4.1

我的代码设置为分别在每个训练和验证步骤上记录训练和验证损失。

class MyLightningModel(pl.LightningModule):

def training_step(self, batch):

x, labels = batch

out = self(x)

loss = F.mse_loss(out, labels)

self.log("train_loss", loss)

return loss

def validation_step(self, batch):

x, labels = batch

out = self(x)

loss = F.mse_loss(out, labels)

self.log("val_loss", loss)

return loss

TensorBoard能够在“SCALERS”标签页中正确绘制

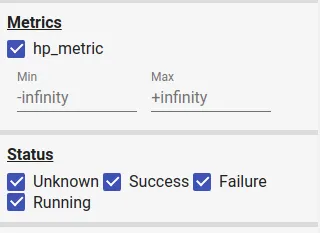

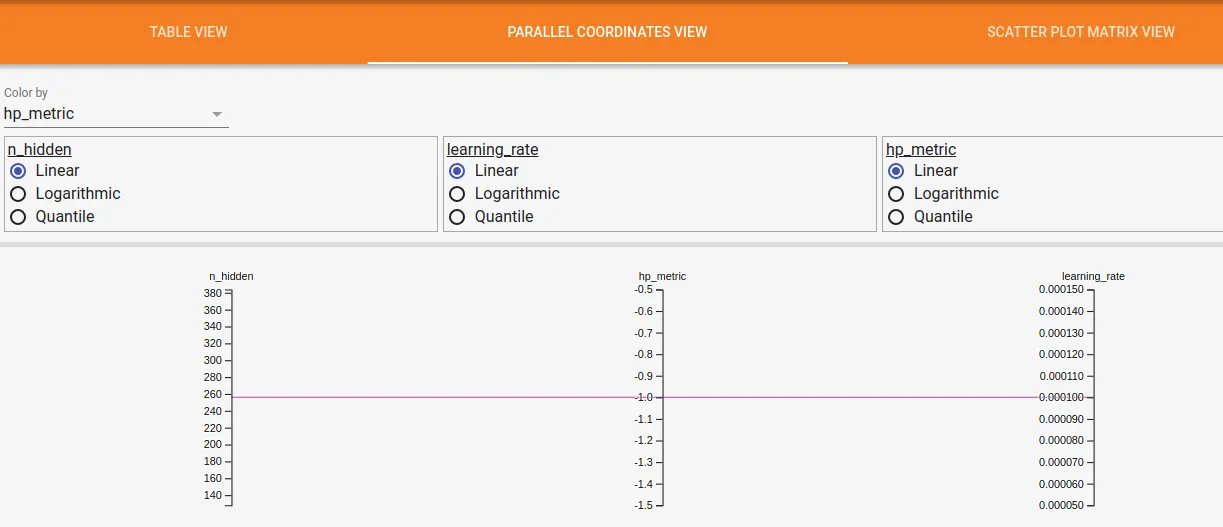

train_loss和val_loss图表。然而,在左侧边栏的“HPARAMS”标签页中,只有hp_metric在“Metrics”下可见。

然而,在左侧栏的HPARAMS选项卡中,只有Metrics下面的hp_metric是可见的。

我们如何在“指标”部分添加“train_loss”和“val_loss”?这样,我们就能在“平行坐标视图”中使用“val_loss”,而不是“hp_metric”。

显示“hp_metric”而无“val_loss”的图像:

使用Pytorch 1.8.1、Pytorch Lightning 1.2.6、TensorBoard 2.4.1

使用Pytorch 1.8.1、Pytorch Lightning 1.2.6、TensorBoard 2.4.1