在Pytorch Lightning中,我对tqdm进度条有很多问题:

- 当我在终端运行训练时,进度条会相互覆盖。在训练一个epoch结束后,验证进度条会在训练进度条下面打印出来,但当它结束后,下一个训练epoch的进度条就会覆盖前一个epoch的进度条,因此无法看到先前epoch的损失。

INFO:root: Name Type Params

0 l1 Linear 7 K

Epoch 2: 56%|████████████▊ | 2093/3750 [00:05<00:03, 525.47batch/s, batch_nb=1874, loss=0.714, training_loss=0.4, v_nb=51]

- 进度条从左到右晃动,是由于某些损失小数点后数字的变化所导致。

- 在Pycharm中运行时,验证进度条未被打印,但相反地,

INFO:root: Name Type Params

0 l1 Linear 7 K

Epoch 1: 50%|█████ | 1875/3750 [00:05<00:05, 322.34batch/s, batch_nb=1874, loss=1.534, training_loss=1.72, v_nb=49]

Epoch 1: 50%|█████ | 1879/3750 [00:05<00:05, 319.41batch/s, batch_nb=1874, loss=1.534, training_loss=1.72, v_nb=49]

Epoch 1: 52%|█████▏ | 1942/3750 [00:05<00:04, 374.05batch/s, batch_nb=1874, loss=1.534, training_loss=1.72, v_nb=49]

Epoch 1: 53%|█████▎ | 2005/3750 [00:05<00:04, 425.01batch/s, batch_nb=1874, loss=1.534, training_loss=1.72, v_nb=49]

Epoch 1: 55%|█████▌ | 2068/3750 [00:05<00:03, 470.56batch/s, batch_nb=1874, loss=1.534, training_loss=1.72, v_nb=49]

Epoch 1: 57%|█████▋ | 2131/3750 [00:05<00:03, 507.69batch/s, batch_nb=1874, loss=1.534, training_loss=1.72, v_nb=49]

Epoch 1: 59%|█████▊ | 2194/3750 [00:06<00:02, 538.19batch/s, batch_nb=1874, loss=1.534, training_loss=1.72, v_nb=49]

Epoch 1: 60%|██████ | 2257/3750 [00:06<00:02, 561.20batch/s, batch_nb=1874, loss=1.534, training_loss=1.72, v_nb=49]

Epoch 1: 62%|██████▏ | 2320/3750 [00:06<00:02, 579.22batch/s, batch_nb=1874, loss=1.534, training_loss=1.72, v_nb=49]

Epoch 1: 64%|██████▎ | 2383/3750 [00:06<00:02, 591.58batch/s, batch_nb=1874, loss=1.534, training_loss=1.72, v_nb=49]

Epoch 1: 65%|██████▌ | 2445/3750 [00:06<00:02, 599.77batch/s, batch_nb=1874, loss=1.534, training_loss=1.72, v_nb=49]

Epoch 1: 67%|██████▋ | 2507/3750 [00:06<00:02, 605.00batch/s, batch_nb=1874, loss=1.534, training_loss=1.72, v_nb=49]

Epoch 1: 69%|██████▊ | 2569/3750 [00:06<00:01, 607.04batch/s, batch_nb=1874, loss=1.534, training_loss=1.72, v_nb=49]

Epoch 1: 70%|███████ | 2633/3750 [00:06<00:01, 613.98batch/s, batch_nb=1874, loss=1.534, training_loss=1.72, v_nb=49]

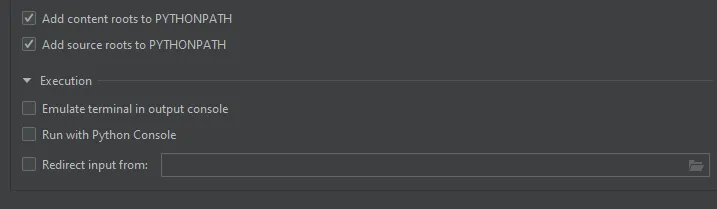

我想知道这些问题能否解决,或者如何禁用进度条,而是在屏幕上只打印一些日志细节。

trainer.fit,而不适用于trainer.test。 - Thomas Ahle