所有上述解决方案都依赖于CIImage,而UIImage通常具有CGImage作为其底层图像,而不是CIImage。这意味着您必须在开始时将底层图像转换为CIImage,并在最后将其转换回CGImage(如果不这样做,则使用CIImage构造UIImage将为您有效地执行此操作)。

尽管对于许多用例来说这可能是可以接受的,但CGImage和CIImage之间的转换是不免费的:它可能会很慢,并且在转换过程中会创建一个大的内存峰值。

因此,我想提及完全不需要来回转换图像的完全不同的解决方案。它使用Accelerate,由苹果在这里完美地描述。

这里有一个游乐场示例,演示了两种方法。

import UIKit

import Accelerate

extension CIImage {

func toGrayscale() -> CIImage? {

guard let output = CIFilter(name: "CIPhotoEffectNoir", parameters: [kCIInputImageKey: self])?.outputImage else {

return nil

}

return output

}

}

extension CGImage {

func toGrayscale() -> CGImage {

guard let format = vImage_CGImageFormat(cgImage: self),

var sourceBuffer = try? vImage_Buffer(

cgImage: self,

format: format

),

var destinationBuffer = try? vImage_Buffer(

width: Int(sourceBuffer.width),

height: Int(sourceBuffer.height),

bitsPerPixel: 8

) else {

return self

}

let redCoefficient: Float = 0.2126

let greenCoefficient: Float = 0.7152

let blueCoefficient: Float = 0.0722

let divisor: Int32 = 0x1000

let fDivisor = Float(divisor)

var coefficientsMatrix = [

Int16(redCoefficient * fDivisor),

Int16(greenCoefficient * fDivisor),

Int16(blueCoefficient * fDivisor)

]

let preBias: [Int16] = [0, 0, 0, 0]

let postBias: Int32 = 0

vImageMatrixMultiply_ARGB8888ToPlanar8(

&sourceBuffer,

&destinationBuffer,

&coefficientsMatrix,

divisor,

preBias,

postBias,

vImage_Flags(kvImageNoFlags)

)

guard let monoFormat = vImage_CGImageFormat(

bitsPerComponent: 8,

bitsPerPixel: 8,

colorSpace: CGColorSpaceCreateDeviceGray(),

bitmapInfo: CGBitmapInfo(rawValue: CGImageAlphaInfo.none.rawValue),

renderingIntent: .defaultIntent

) else {

return self

}

guard let result = try? destinationBuffer.createCGImage(format: monoFormat) else {

return self

}

return result

}

}

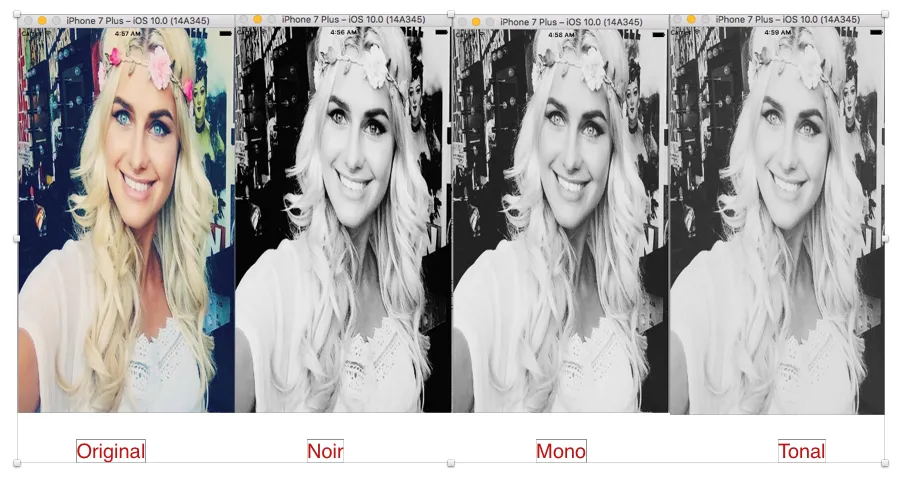

我使用完整的此图像来测试。

let start = Date()

var prev = start.timeIntervalSinceNow * -1

func info(_ id: String) {

print("\(id)\t: \(start.timeIntervalSinceNow * -1 - prev)")

prev = start.timeIntervalSinceNow * -1

}

info("started")

let original = UIImage(named: "Golden_Gate_Bridge_2021.jpg")!

info("loaded UIImage(named)")

let cgImage = original.cgImage!

info("original.cgImage")

let cgImageToGreyscale = cgImage.toGrayscale()

info("cgImage.toGrayscale()")

let uiImageFromCGImage = UIImage(cgImage: cgImageToGreyscale, scale: original.scale, orientation: original.imageOrientation)

info("UIImage(cgImage)")

let ciImage = CIImage(image: original)!

info("CIImage(image: original)!")

let ciImageToGreyscale = ciImage.toGrayscale()!

info("ciImage.toGrayscale()")

let uiImageFromCIImage = UIImage(ciImage: ciImageToGreyscale, scale: original.scale, orientation: original.imageOrientation)

info("UIImage(ciImage)")

结果(以秒为单位)

CGImage 方法总共花费约 1 秒钟:

original.cgImage : 0.5257829427719116

cgImage.toGrayscale() : 0.46222901344299316

UIImage(cgImage) : 0.1819549798965454

CIImage 方法总共花费了约 7 秒钟:

CIImage(image: original)! : 0.6055610179901123

ciImage.toGrayscale() : 4.969912052154541

UIImage(ciImage) : 2.395193934440613

将图片保存为JPEG格式时,使用CGImage创建的图片与使用CIImage创建的图片相比,文件大小要小3倍(5 MB vs. 17 MB)。两种图片的质量都很好。以下是符合SO限制的小尺寸图片: