我正在尝试将XML数据加载到Hive中,但是我遇到了一个错误:

java.lang.RuntimeException: org.apache.hadoop.hive.ql.metadata.HiveException: Hive在处理行{"xmldata":""}时出现运行时错误

我使用的xml文件是:

<?xml version="1.0" encoding="UTF-8"?>

<catalog>

<book>

<id>11</id>

<genre>Computer</genre>

<price>44</price>

</book>

<book>

<id>44</id>

<genre>Fantasy</genre>

<price>5</price>

</book>

</catalog>

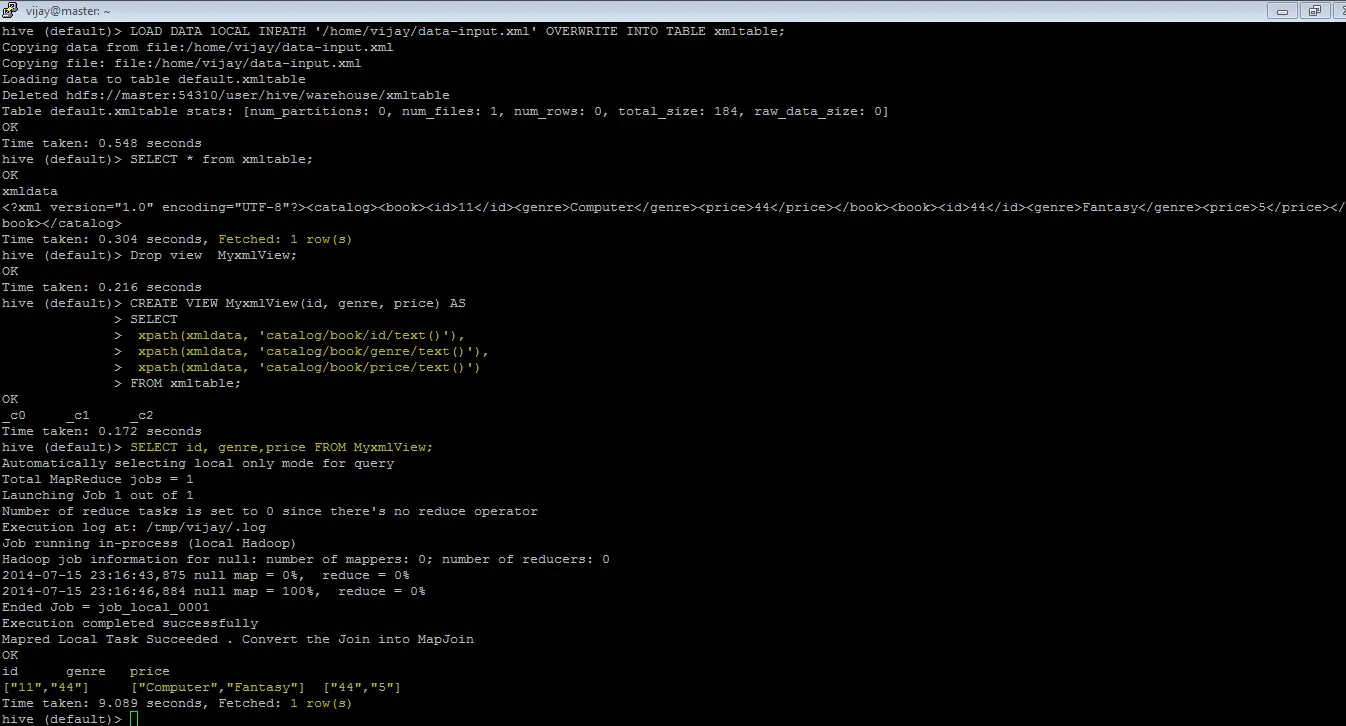

The hive query i have used is :

1) Create TABLE xmltable(xmldata string) STORED AS TEXTFILE;

LOAD DATA lOCAL INPATH '/home/user/xmlfile.xml' OVERWRITE INTO TABLE xmltable;

2) CREATE VIEW xmlview (id,genre,price)

AS SELECT

xpath(xmldata, '/catalog[1]/book[1]/id'),

xpath(xmldata, '/catalog[1]/book[1]/genre'),

xpath(xmldata, '/catalog[1]/book[1]/price')

FROM xmltable;

3) CREATE TABLE xmlfinal AS SELECT * FROM xmlview;

4) SELECT * FROM xmlfinal WHERE id ='11

直到第二个查询都很好,但当我执行第三个查询时,它会给我一个错误:

以下是错误信息:

java.lang.RuntimeException: org.apache.hadoop.hive.ql.metadata.HiveException: Hive Runtime Error while processing row {"xmldata":"<?xml version=\"1.0\" encoding=\"UTF-8\"?>"}

at org.apache.hadoop.hive.ql.exec.ExecMapper.map(ExecMapper.java:159)

at org.apache.hadoop.mapred.MapRunner.run(MapRunner.java:50)

at org.apache.hadoop.mapred.MapTask.runOldMapper(MapTask.java:417)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:332)

at org.apache.hadoop.mapred.Child$4.run(Child.java:268)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:415)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1438)

at org.apache.hadoop.mapred.Child.main(Child.java:262)

Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: Hive Runtime Error while processing row {"xmldata":"<?xml version=\"1.0\" encoding=\"UTF-8\"?>"}

at org.apache.hadoop.hive.ql.exec.MapOperator.process(MapOperator.java:675)

at org.apache.hadoop.hive.ql.exec

FAILED: Execution Error, return code 2 from org.apache.hadoop.hive.ql.exec.MapRedTask

所以出了什么问题?我也在使用正确的XML文件。谢谢,Shree。