我正在尝试正确使用苹果在2017年WWDC上演示的示例Core ML模型。我正在使用GoogLeNet尝试对图像进行分类(请参见Apple机器学习页面)。该模型以CVPixelBuffer作为输入。我有一张名为imageSample.jpg的图片用于此演示。我的代码如下:

var sample = UIImage(named: "imageSample")?.cgImage

let bufferThree = getCVPixelBuffer(sample!)

let model = GoogLeNetPlaces()

guard let output = try? model.prediction(input: GoogLeNetPlacesInput.init(sceneImage: bufferThree!)) else {

fatalError("Unexpected runtime error.")

}

print(output.sceneLabel)

我总是在输出中遇到意外的运行时错误,而不是图像分类。 我用于转换图像的代码如下:

func getCVPixelBuffer(_ image: CGImage) -> CVPixelBuffer? {

let imageWidth = Int(image.width)

let imageHeight = Int(image.height)

let attributes : [NSObject:AnyObject] = [

kCVPixelBufferCGImageCompatibilityKey : true as AnyObject,

kCVPixelBufferCGBitmapContextCompatibilityKey : true as AnyObject

]

var pxbuffer: CVPixelBuffer? = nil

CVPixelBufferCreate(kCFAllocatorDefault,

imageWidth,

imageHeight,

kCVPixelFormatType_32ARGB,

attributes as CFDictionary?,

&pxbuffer)

if let _pxbuffer = pxbuffer {

let flags = CVPixelBufferLockFlags(rawValue: 0)

CVPixelBufferLockBaseAddress(_pxbuffer, flags)

let pxdata = CVPixelBufferGetBaseAddress(_pxbuffer)

let rgbColorSpace = CGColorSpaceCreateDeviceRGB();

let context = CGContext(data: pxdata,

width: imageWidth,

height: imageHeight,

bitsPerComponent: 8,

bytesPerRow: CVPixelBufferGetBytesPerRow(_pxbuffer),

space: rgbColorSpace,

bitmapInfo: CGImageAlphaInfo.premultipliedFirst.rawValue)

if let _context = context {

_context.draw(image, in: CGRect.init(x: 0, y: 0, width: imageWidth, height: imageHeight))

}

else {

CVPixelBufferUnlockBaseAddress(_pxbuffer, flags);

return nil

}

CVPixelBufferUnlockBaseAddress(_pxbuffer, flags);

return _pxbuffer;

}

return nil

}

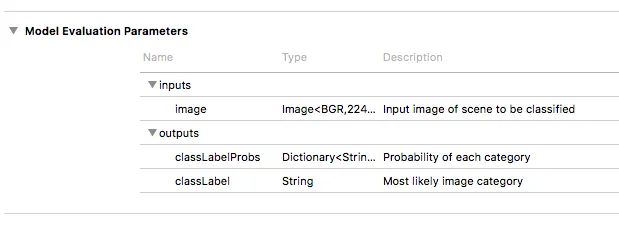

我从之前的StackOverflow帖子中获取了这段代码(最后一个答案在这里)。我知道这段代码可能不正确,但是我不知道该如何自己编写。我相信这是包含错误的部分。模型需要以下类型的输入:Image<RGB,224,224>