由于大约有30/100列,因此让我们向DataFrame添加几列以更好地进行概括。

from pyspark.sql.functions import col, when

df = sc.parallelize([(1,"foo","val","baz","gun","can","baz","buz","oof"),

(2,"bar","baz","baz","baz","got","pet","stu","got"),

(3,"baz","buz","pun","iam","you","omg","sic","baz")]).toDF(["x","y","z","a","b","c","d","e","f"])

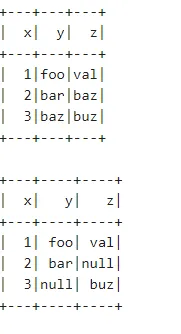

df.show()

+---+---+---+---+---+---+---+---+---+

| x| y| z| a| b| c| d| e| f|

+---+---+---+---+---+---+---+---+---+

| 1|foo|val|baz|gun|can|baz|buz|oof|

| 2|bar|baz|baz|baz|got|pet|stu|got|

| 3|baz|buz|pun|iam|you|omg|sic|baz|

+---+---+---+---+---+---+---+---+---+

假设我们想要在除列x和a之外的所有列中使用列表推导式来选择需要进行替换的那些列,并将baz替换为Null。

all_column_names = df.columns

print(all_column_names)

['x', 'y', 'z', 'a', 'b', 'c', 'd', 'e', 'f']

columns_to_remove = ['x','a']

columns_for_replacement = [i for i in all_column_names if i not in columns_to_remove]

print(columns_for_replacement)

['y', 'z', 'b', 'c', 'd', 'e', 'f']

最后,使用

when()进行替换,它实际上是

if语句的一个别名。

# Doing the replacement on all the requisite columns

for i in columns_for_replacement:

df = df.withColumn(i,when((col(i)=='baz'),None).otherwise(col(i)))

df.show()

+

| x| y| z| a| b| c| d| e| f|

+

| 1| foo| val|baz| gun|can|null|buz| oof|

| 2| bar|null|baz|null|got| pet|stu| got|

| 3|null| buz|pun| iam|you| omg|sic|null|

+

不需要创建一个UDF并定义函数来替换文本,如果可以使用正常的if-else子句完成替换。UDF通常是一项昂贵的操作,应尽可能避免使用。