我正在尝试使用libsvm,并遵循该软件附带的heart_scale数据进行svm训练的示例。我想使用自己预先计算的chi2核。训练数据的分类率下降到24%。我确定我正确计算了核,但我认为我一定做错了什么。以下是代码。您能看到任何错误吗?非常感谢您的帮助。

%read in the data:

[heart_scale_label, heart_scale_inst] = libsvmread('heart_scale');

train_data = heart_scale_inst(1:150,:);

train_label = heart_scale_label(1:150,:);

%read somewhere that the kernel should not be sparse

ttrain = full(train_data)';

ttest = full(test_data)';

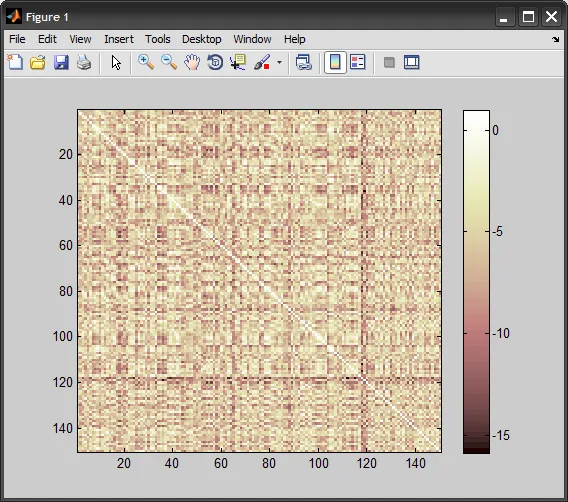

precKernel = chi2_custom(ttrain', ttrain');

model_precomputed = svmtrain2(train_label, [(1:150)', precKernel], '-t 4');

这是内核的预计算方式:

function res=chi2_custom(x,y)

a=size(x);

b=size(y);

res = zeros(a(1,1), b(1,1));

for i=1:a(1,1)

for j=1:b(1,1)

resHelper = chi2_ireneHelper(x(i,:), y(j,:));

res(i,j) = resHelper;

end

end

function resHelper = chi2_ireneHelper(x,y)

a=(x-y).^2;

b=(x+y);

resHelper = sum(a./(b + eps));

使用另一种 SVM 实现(vlfeat),我在训练数据上获得了大约90%的分类率(是的,我在训练数据上测试,只是为了看看发生了什么)。所以我非常确定 libsvm 的结果是错误的。