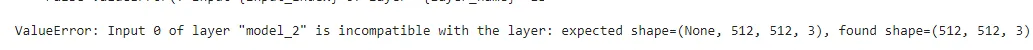

我正在训练一个二元分类的Unet分割模型。数据集已在tensorflow数据管道中加载。图像形状为(512, 512, 3),掩码形状为(512, 512, 1)。模型期望输入形状为(512, 512, 3),但我遇到了以下错误。

“model”层的输入0与该层不兼容:期望形状=(None, 512, 512, 3),找到形状=(512, 512, 3)。

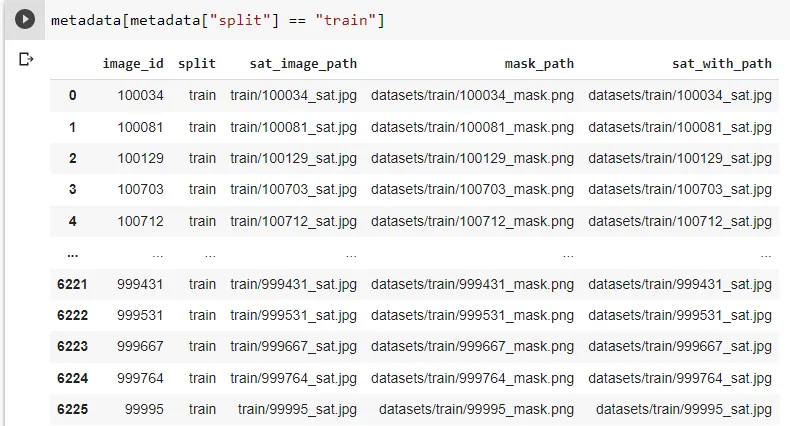

这是metadata数据帧中的图像。

训练图像的解析函数

解析测试图像的函数

这是metadata数据帧中的图像。

随机抽样索引以选择训练和验证集

num_samples = train_metadata.shape[0]

train_indices = np.random.choice(range(num_samples), int(num_samples * 0.8), replace=False)

valid_indices = list(set(range(num_samples)) - set(train_indices))

train_samples = train_metadata.iloc[train_indices, ]

valid_samples = train_metadata.iloc[valid_indices, ]

尺寸

IMG_WIDTH = 512

IMG_HEIGHT = 512

IMG_CHANNELS = 3

训练图像的解析函数

def parse_function_train_images(image_path):

image_path = image_path

mask_path = tf.strings.regex_replace(image_path, "sat", "mask")

mask_path = tf.strings.regex_replace(mask_path, "jpg", "png")

image = tf.io.read_file(image_path)

image = tf.image.decode_jpeg(image, channels=3)

image = tf.image.convert_image_dtype(image, tf.uint8)

image = tf.image.resize(image, (IMG_WIDTH, IMG_HEIGHT))

#image = tf.expand_dims(image, axis=0)

mask = tf.io.read_file(mask_path)

mask = tf.image.decode_png(mask, channels=1)

mask = tf.image.convert_image_dtype(mask, tf.uint8)

mask = tf.image.resize(mask, (IMG_WIDTH, IMG_HEIGHT))

#mask = tf.where(mask == 255, np.dtype("uint8").type(0), mask)

return image, mask

解析测试图像的函数

def parse_function_test_images(image_path):

image = tf.io.read_file(image_path)

image = tf.image.decode_jpeg(image, channels=3)

image = tf.image.convert_image_dtype(image, tf.uint8)

image = tf.image.resize(image, (IMG_WIDTH, IMG_HEIGHT))

#image = tf.expand_dims(image, axis=0)

return image

加载数据集

ds = tf.data.Dataset.from_tensor_slices(train_samples["sat_with_path"].values)

train_dataset = ds.map(parse_function_train_images)

validation_ds = tf.data.Dataset.from_tensor_slices(valid_samples["sat_with_path"].values)

validation_dataset = validation_ds.map(parse_function_train_images)

test_ds = tf.data.Dataset.from_tensor_slices(test_metadata["sat_with_path"].values)

test_dataset = test_ds.map(parse_function_test_images)

标准化图像

def normalize(image, mask):

image = tf.cast(image, tf.float32) / 255.0

mask = tf.cast(mask, tf.float32) / 255.0

return image, mask

def test_normalize(image):

image = tf.cast(image, tf.float32) / 255.0

return image

TRAIN_LENGTH = len(train_dataset)

BATCH_SIZE = 64

BUFFER_SIZE = 1000

STEPS_PER_EPOCH = TRAIN_LENGTH // BATCH_SIZE

映射数据集

train_images = train_dataset.map(normalize, num_parallel_calls=tf.data.AUTOTUNE)

validation_images = validation_dataset.map(normalize, num_parallel_calls=tf.data.AUTOTUNE)

test_images = test_dataset.map(test_normalize, num_parallel_calls=tf.data.AUTOTUNE)

增强层

class Augment(tf.keras.layers.Layer):

def __init__(self, seed=42):

super().__init__()

self.augment_inputs = tf.keras.layers.RandomFlip(mode="horizontal", seed=seed)

self.augment_labels = tf.keras.layers.RandomFlip(mode="horizontal", seed=seed)

def call(self, inputs, labels):

inputs = self.augment_inputs(inputs)

inputs = tf.expand_dims(inputs, axis=0)

labels = self.augment_labels(labels)

return inputs, labels

train_batches = (

train_images

.cache()

.shuffle(BUFFER_SIZE)

.batch(BATCH_SIZE)

.repeat()

.map(Augment())

.prefetch(buffer_size=tf.data.AUTOTUNE)

)

validation_batches = (

validation_images

.cache()

.shuffle(BUFFER_SIZE)

.batch(BATCH_SIZE)

.repeat()

.map(Augment())

.prefetch(buffer_size=tf.data.AUTOTUNE)

)

test_batches = test_images.batch(BATCH_SIZE)

Unet模型

inputs = tf.keras.layers.Input((IMG_WIDTH, IMG_HEIGHT, IMG_CHANNELS))

c1 = tf.keras.layers.Conv2D(16, (3, 3), activation='relu', kernel_initializer="he_normal", padding="same")(inputs)

c1 = tf.keras.layers.Dropout(0.1)(c1)

c1 = tf.keras.layers.Conv2D(16, (3, 3), activation='relu', kernel_initializer="he_normal", padding="same")(c1)

p1 = tf.keras.layers.MaxPooling2D((2, 2))(c1)

c2 = tf.keras.layers.Conv2D(32, (3, 3), activation="relu", kernel_initializer="he_normal", padding="same")(p1)

c2 = tf.keras.layers.Dropout(0.1)(c2)

c2 = tf.keras.layers.Conv2D(32, (3, 3), activation="relu", kernel_initializer="he_normal", padding="same")(c2)

p2 = tf.keras.layers.MaxPooling2D((2, 2))(c2)

c3 = tf.keras.layers.Conv2D(64, (3, 3), activation="relu", kernel_initializer="he_normal", padding="same")(p2)

c3 = tf.keras.layers.Dropout(0.2)(c3)

c3 = tf.keras.layers.Conv2D(64, (3, 3), activation="relu", kernel_initializer="he_normal", padding="same")(c3)

p3 = tf.keras.layers.MaxPooling2D((2, 2))(c3)

c4 = tf.keras.layers.Conv2D(128, (3, 3), activation="relu", kernel_initializer="he_normal", padding="same")(p3)

c4 = tf.keras.layers.Dropout(0.2)(c4)

c4 = tf.keras.layers.Conv2D(128, (3, 3), activation="relu", kernel_initializer="he_normal", padding="same")(c4)

p4 = tf.keras.layers.MaxPooling2D((2, 2))(c4)

c5 = tf.keras.layers.Conv2D(256, (3, 3), activation="relu", kernel_initializer="he_normal", padding="same")(p4)

c5 = tf.keras.layers.Dropout(0.3)(c5)

c5 = tf.keras.layers.Conv2D(256, (3, 3), activation="relu", kernel_initializer="he_normal", padding="same")(c5)

u6 = tf.keras.layers.Conv2DTranspose(128, (2, 2), strides=(2, 2), padding="same")(c5)

u6 = tf.keras.layers.concatenate([u6, c4])

c6 = tf.keras.layers.Conv2D(128, (3, 3), activation="relu", kernel_initializer="he_normal", padding="same")(u6)

c6 = tf.keras.layers.Dropout(0.2)(c6)

c6 = tf.keras.layers.Conv2D(128, (3, 3), activation="relu", kernel_initializer="he_normal", padding="same")(c6)

u7 = tf.keras.layers.Conv2DTranspose(64, (2, 2), strides=(2, 2), padding="same")(c6)

u7 = tf.keras.layers.concatenate([u7, c3])

c7 = tf.keras.layers.Conv2D(64, (3, 3), activation="relu", kernel_initializer="he_normal", padding="same")(u7)

c7 = tf.keras.layers.Dropout(0.2)(c7)

c7 = tf.keras.layers.Conv2D(64, (3, 3), activation="relu", kernel_initializer="he_normal", padding="same")(c7)

u8 = tf.keras.layers.Conv2DTranspose(32, (2, 2), strides=(2, 2), padding="same")(c7)

u8 = tf.keras.layers.concatenate([u8, c2])

c8 = tf.keras.layers.Conv2D(32, (3, 3), activation="relu", kernel_initializer="he_normal", padding="same")(u8)

c8 = tf.keras.layers.Dropout(0.1)(c8)

c8 = tf.keras.layers.Conv2D(32, (3, 3), activation="relu", kernel_initializer="he_normal", padding="same")(c8)

u9 = tf.keras.layers.Conv2DTranspose(16, (2, 2), strides=(2, 2), padding="same")(c8)

u9 = tf.keras.layers.concatenate([u9, c1], axis=3)

c9 = tf.keras.layers.Conv2D(16, (3, 3), strides=(2, 2), padding="same")(u9)

c9 = tf.keras.layers.Dropout(0.1)(c9)

c9 = tf.keras.layers.Conv2D(16, (3, 3), activation="relu", kernel_initializer="he_normal", padding="same")(c9)

outputs = tf.keras.layers.Conv2D(1, (1, 1), activation="sigmoid")(c9)

model = tf.keras.Model(inputs=[inputs], outputs=[outputs])

model.compile(optimizer="adam", loss="binary_crossentropy", metrics=["accuracy"])

model.summary()

checkpointer = tf.keras.callbacks.ModelCheckpoint('model_for_nuclie.h5', verbose=1, save_best_only=True)

callbacks = [

tf.keras.callbacks.EarlyStopping(patience=2, monitor="val_loss"),

tf.keras.callbacks.TensorBoard(log_dir="logs"),

checkpointer

]

将模型拟合到数据中

results = model.fit(train_images, validation_data=validation_images, \

batch_size=16, epochs=25, callbacks=callbacks

)

错误:

train_batches中的一个 batch 的形状吗? - AloneTogetherrepeat()?移除它。 - AloneTogether