只是为了好玩,我尝试了以下操作:

- 选择点数据集的两个点,并计算将前两个模式点映射到这些点的变换。

- 测试是否可以在数据集中找到所有转换后的模式点。

这种方法非常天真,并且具有复杂度为O(m*n²),其中n个数据点和大小为m(点)的单个模式。对于某些最近邻搜索方法,此复杂性可能会增加。因此,您必须考虑它是否不足以满足您的应用程序的效率要求。

一些改进可能包括一些启发式方法,以避免选择所有n²点组合,但是您需要了解最大模式缩放或类似内容的背景信息。

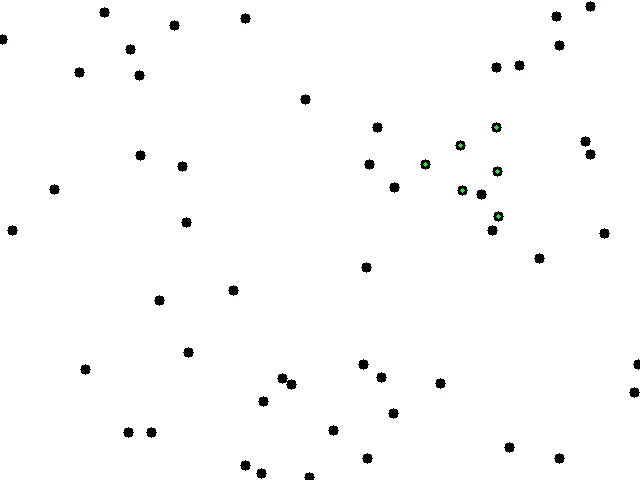

为了评估,我首先创建了一个模式:

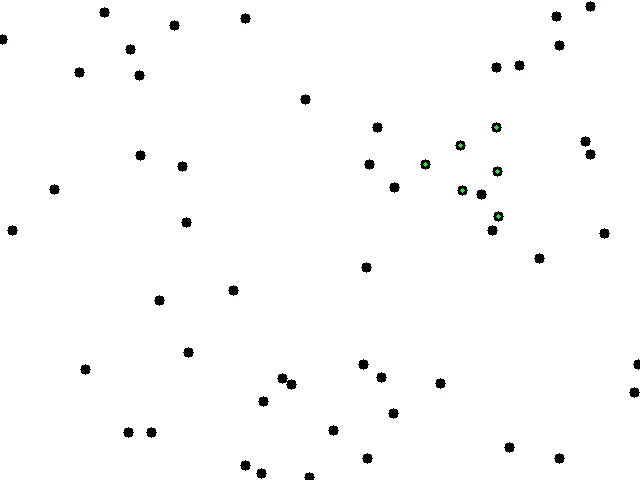

然后我创建随机点,并在某个位置添加模式(缩放、旋转和平移):

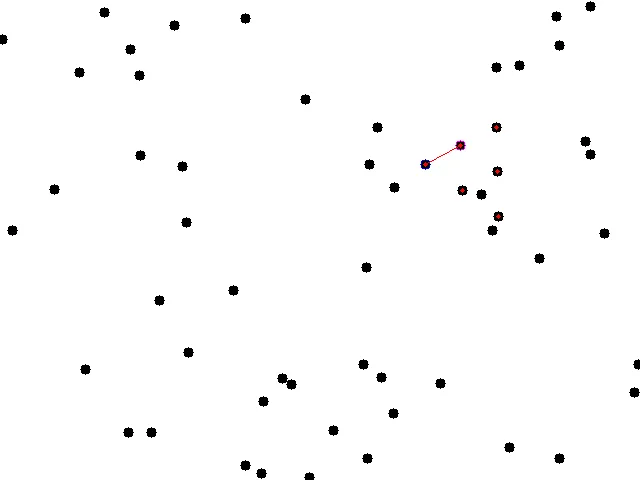

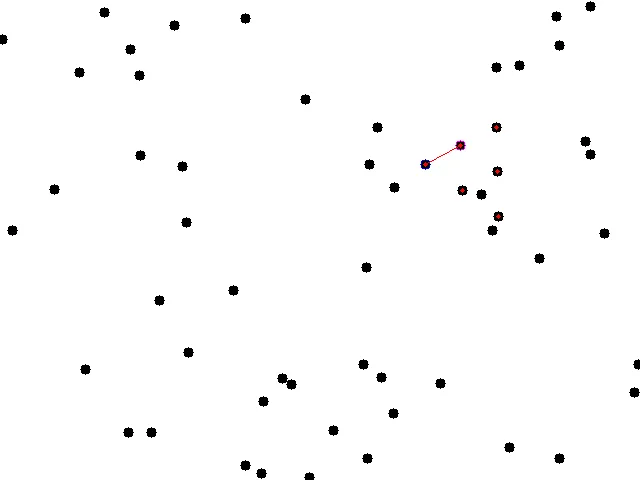

在一些计算之后,该方法识别出了模式。红线显示了用于转换计算的选择点。

这是代码:

void drawPoints(cv::Mat & image, std::vector<cv::Point2f> points, cv::Scalar color = cv::Scalar(255,255,255), float size=10)

{

for(unsigned int i=0; i<points.size(); ++i)

{

cv::circle(image, points[i], 0, color, size);

}

}

std::vector<cv::Point2f> applyTransformation(std::vector<cv::Point2f> points, cv::Mat transformation)

{

for(unsigned int i=0; i<points.size(); ++i)

{

const cv::Point2f tmp = points[i];

points[i].x = tmp.x * transformation.at<float>(0,0) + tmp.y * transformation.at<float>(0,1) + transformation.at<float>(0,2) ;

points[i].y = tmp.x * transformation.at<float>(1,0) + tmp.y * transformation.at<float>(1,1) + transformation.at<float>(1,2) ;

}

return points;

}

const float PI = 3.14159265359;

cv::Mat composeSimilarityTransformation(float s, float r, float tx, float ty)

{

cv::Mat transformation = cv::Mat::zeros(2,3,CV_32FC1);

float rRad = PI*r/180.0f;

transformation.at<float>(0,0) = s*cosf(rRad);

transformation.at<float>(0,1) = s*sinf(rRad);

transformation.at<float>(1,0) = -s*sinf(rRad);

transformation.at<float>(1,1) = s*cosf(rRad);

transformation.at<float>(0,2) = tx;

transformation.at<float>(1,2) = ty;

return transformation;

}

std::vector<cv::Point2f> createPointSet(cv::Size2i imageSize, std::vector<cv::Point2f> pointPattern, unsigned int nRandomDots = 50)

{

cv::Point2f centerOfGravity(0,0);

for(unsigned int i=0; i<pointPattern.size(); ++i)

{

centerOfGravity.x += pointPattern[i].x;

centerOfGravity.y += pointPattern[i].y;

}

centerOfGravity.x /= (float)pointPattern.size();

centerOfGravity.y /= (float)pointPattern.size();

pointPattern = applyTransformation(pointPattern, composeSimilarityTransformation(1,0,-centerOfGravity.x, -centerOfGravity.y));

std::vector<cv::Point2f> pointset;

srand (time(NULL));

for(unsigned int i =0; i<nRandomDots; ++i)

{

pointset.push_back( cv::Point2f(rand()%imageSize.width, rand()%imageSize.height) );

}

cv::Mat image = cv::Mat::ones(imageSize,CV_8UC3);

image = cv::Scalar(255,255,255);

drawPoints(image, pointset, cv::Scalar(0,0,0));

cv::namedWindow("pointset"); cv::imshow("pointset", image);

float scaleFactor = rand()%30 + 10.0f;

float translationX = rand()%(imageSize.width/2)+ imageSize.width/4;

float translationY = rand()%(imageSize.height/2)+ imageSize.height/4;

float rotationAngle = rand()%360;

std::cout << "s: " << scaleFactor << " r: " << rotationAngle << " t: " << translationX << "/" << translationY << std::endl;

std::vector<cv::Point2f> transformedPattern = applyTransformation(pointPattern,composeSimilarityTransformation(scaleFactor,rotationAngle,translationX,translationY));

drawPoints(image, transformedPattern, cv::Scalar(0,0,0));

drawPoints(image, transformedPattern, cv::Scalar(0,255,0),3);

cv::imwrite("dataPoints.png", image);

cv::namedWindow("pointset + pattern"); cv::imshow("pointset + pattern", image);

for(unsigned int i=0; i<transformedPattern.size(); ++i)

pointset.push_back(transformedPattern[i]);

return pointset;

}

void programDetectPointPattern()

{

cv::Size2i imageSize(640,480);

std::vector<cv::Point2f> pointPattern;

pointPattern.push_back(cv::Point2f(0,0));

pointPattern.push_back(cv::Point2f(2,0));

pointPattern.push_back(cv::Point2f(4,0));

pointPattern.push_back(cv::Point2f(1,2));

pointPattern.push_back(cv::Point2f(3,2));

pointPattern.push_back(cv::Point2f(2,4));

cv::Mat trans = cv::Mat::ones(2,3,CV_32FC1);

trans.at<float>(0,0) = 20.0f;

trans.at<float>(1,1) = 20.0f;

trans.at<float>(0,2) = 20.0f;

trans.at<float>(1,2) = 20.0f;

cv::Mat drawnPattern = cv::Mat::ones(cv::Size2i(128,128),CV_8U);

drawnPattern *= 255;

drawPoints(drawnPattern,applyTransformation(pointPattern, trans), cv::Scalar(0),5);

cv::imwrite("patternToDetect.png", drawnPattern);

cv::namedWindow("pattern"); cv::imshow("pattern", drawnPattern);

std::vector<cv::Point2f> pointset = createPointSet(imageSize, pointPattern);

cv::Mat image = cv::Mat(imageSize, CV_8UC3);

image = cv::Scalar(255,255,255);

drawPoints(image,pointset, cv::Scalar(0,0,0));

cv::Mat pointImage = cv::Mat::zeros(imageSize,CV_8U);

float maxDist = 3.0f;

drawPoints(pointImage, pointset, cv::Scalar(255),maxDist);

cv::namedWindow("pointImage"); cv::imshow("pointImage", pointImage);

cv::Point2f referencePoint1 = pointPattern[0];

cv::Point2f referencePoint2 = pointPattern[1];

cv::Point2f diff1;

diff1.x = referencePoint2.x - referencePoint1.x;

diff1.y = referencePoint2.y - referencePoint1.y;

float referenceLength = sqrt(diff1.x*diff1.x + diff1.y*diff1.y);

diff1.x = diff1.x/referenceLength; diff1.y = diff1.y/referenceLength;

std::cout << "reference: " << std::endl;

std::cout << referencePoint1 << std::endl;

for(unsigned int j=0; j<pointset.size(); ++j)

{

cv::Point2f targetPoint1 = pointset[j];

for(unsigned int i=0; i<pointset.size(); ++i)

{

cv::Point2f targetPoint2 = pointset[i];

cv::Point2f diff2;

diff2.x = targetPoint2.x - targetPoint1.x;

diff2.y = targetPoint2.y - targetPoint1.y;

float targetLength = sqrt(diff2.x*diff2.x + diff2.y*diff2.y);

diff2.x = diff2.x/targetLength; diff2.y = diff2.y/targetLength;

if(targetLength < maxDist) continue;

float s = targetLength/referenceLength;

float r = -180.0f/PI*(atan2(diff2.y,diff2.x) + atan2(diff1.y,diff1.x));

std::vector<cv::Point2f> origin;

origin.push_back(referencePoint1);

origin = applyTransformation(origin, composeSimilarityTransformation(s,r,0,0));

float tx = targetPoint1.x - origin[0].x;

float ty = targetPoint1.y - origin[0].y;

std::vector<cv::Point2f> transformedPattern = applyTransformation(pointPattern,composeSimilarityTransformation(s,r,tx,ty));

bool found = true;

for(unsigned int i=0; i<transformedPattern.size(); ++i)

{

cv::Point2f curr = transformedPattern[i];

if((curr.x >= 0)&&(curr.x <= pointImage.cols-1)&&(curr.y>=0)&&(curr.y <= pointImage.rows-1))

{

if(pointImage.at<unsigned char>(curr.y, curr.x) == 0) found = false;

}

else found = false;

}

if(found)

{

std::cout << composeSimilarityTransformation(s,r,tx,ty) << std::endl;

cv::Mat currentIteration;

image.copyTo(currentIteration);

cv::circle(currentIteration,targetPoint1,5, cv::Scalar(255,0,0),1);

cv::circle(currentIteration,targetPoint2,5, cv::Scalar(255,0,255),1);

cv::line(currentIteration,targetPoint1,targetPoint2,cv::Scalar(0,0,255));

drawPoints(currentIteration, transformedPattern, cv::Scalar(0,0,255),4);

cv::imwrite("detectedPattern.png", currentIteration);

cv::namedWindow("iteration"); cv::imshow("iteration", currentIteration); cv::waitKey(-1);

}

}

}

}