我正在尝试使用SHAP库获取高斯过程回归(GPR)模型的SHAP值。但是所有的SHAP值都为零。我正在使用官方文档中的示例。我只将模型更改为GPR。

import sklearn

from sklearn.model_selection import train_test_split

import numpy as np

import shap

import time

from sklearn.gaussian_process import GaussianProcessRegressor

from sklearn.gaussian_process.kernels import Matern, WhiteKernel, ConstantKernel

shap.initjs()

X,y = shap.datasets.diabetes()

X_train,X_test,y_train,y_test = train_test_split(X, y, test_size=0.2, random_state=0)

# rather than use the whole training set to estimate expected values, we summarize with

# a set of weighted kmeans, each weighted by the number of points they represent.

X_train_summary = shap.kmeans(X_train, 10)

kernel = Matern(length_scale=2, nu=3/2) + WhiteKernel(noise_level=1)

gp = GaussianProcessRegressor(kernel)

gp.fit(X_train, y_train)

# explain all the predictions in the test set

explainer = shap.KernelExplainer(gp.predict, X_train_summary)

shap_values = explainer.shap_values(X_test)

shap.summary_plot(shap_values, X_test)

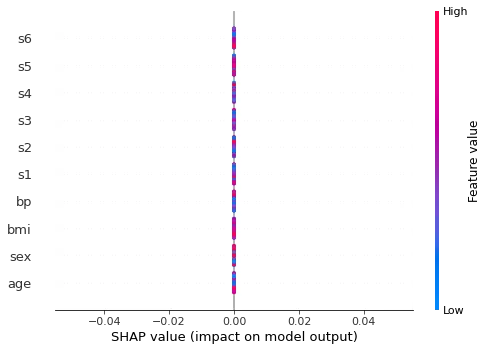

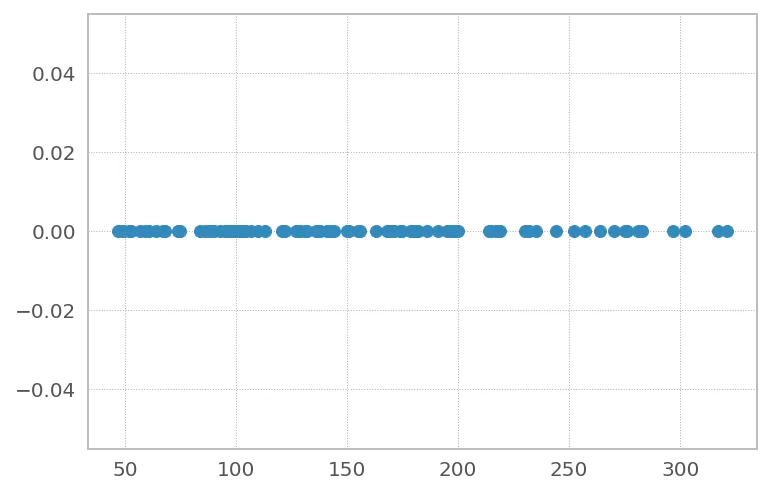

运行上述代码会得到以下图表: 当我使用神经网络或线性回归时,上述代码可以正常运行而没有问题。

如果您有任何解决此问题的想法,请告诉我。

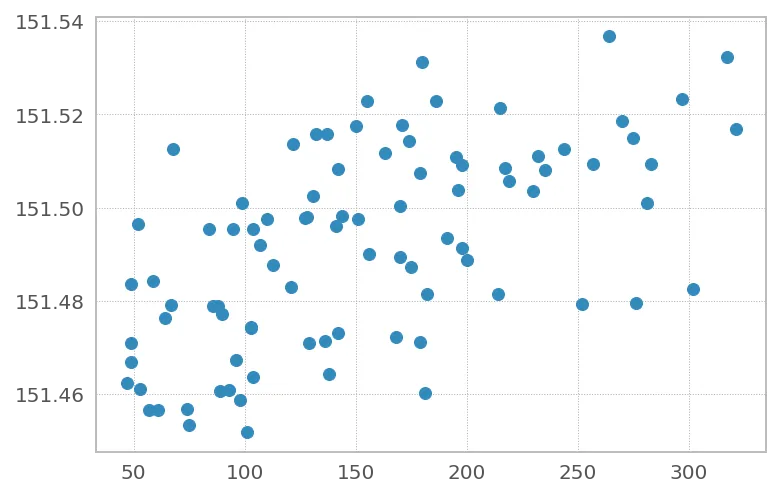

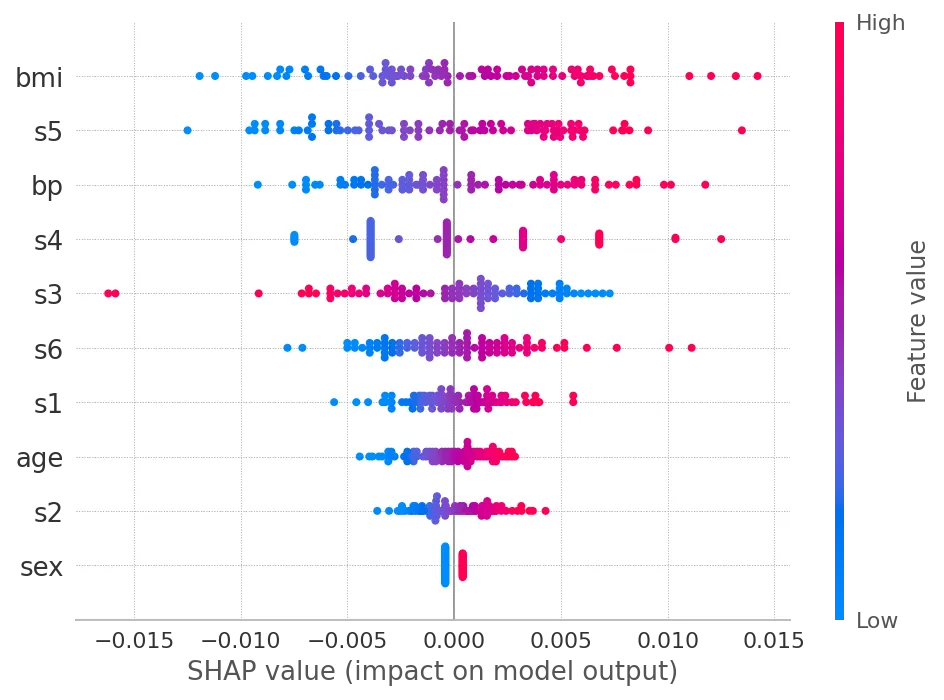

GaussianProcessRegressor。从总结图中看,这是我的第一个想法。 - undefined