我刚开始接触Python和Rapids.AI,尝试在多节点GPU(我有两个GPU)中使用Dask和RAPIDs重新创建SKLearn KMeans。我正在使用装有Jupyter Notebook的RAPIDs docker。下面展示的代码(同时也展示了Iris数据集的例子)会卡死,jupyter notebook单元格永远不会结束。我尝试使用%debug魔术键和Dask仪表板,但没有得出任何清晰的结论(唯一的结论是可能与device_m_csv.iloc有关,但我不确定)。另一件可能发生的事情是我忘记了一些wait()、compute()或persistent()(实际上,我不确定它们应该在哪些场合正确使用)。为了更好地阅读,我将解释代码:

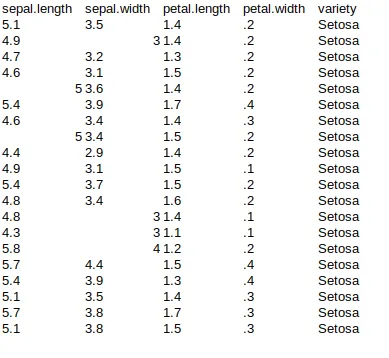

鸢尾花数据集示例:

- 首先,进行所需的导入

- 接下来,使用KMeans算法开始(分隔符:#######################...)

- 创建一个CUDA集群,每个GPU有2个工作进程(我有2个GPU),每个工作进程有1个线程(我已经阅读过这是推荐值),并启动客户端

- 从CSV读取数据集,将其分成2个分区(

chunksize = '2kb') - 将前面的数据集分成数据(更常称为

X)和标签(更常称为y) - 使用Dask实例化cu_KMeans

- 拟合模型

- 预测值

- 检查获得的分数

很抱歉无法提供更多数据,但我无法获取它。任何解决疑问所需的信息,我都会乐意提供。

您认为问题出在哪里或是什么问题呢?

非常感谢您提前的帮助。

%%time

# Import libraries and show its versions

import numpy as np; print('NumPy Version:', np.__version__)

import pandas as pd; print('Pandas Version:', pd.__version__)

import sklearn; print('Scikit-Learn Version:', sklearn.__version__)

import nvstrings, nvcategory

import cupy; print('cuPY Version:', cupy.__version__)

import cudf; print('cuDF Version:', cudf.__version__)

import cuml; print('cuML Version:', cuml.__version__)

import dask; print('Dask Version:', dask.__version__)

import dask_cuda; print('DaskCuda Version:', dask_cuda.__version__)

import dask_cudf; print('DaskCuDF Version:', dask_cudf.__version__)

import matplotlib; print('MatPlotLib Version:', matplotlib.__version__)

import seaborn as sns; print('SeaBorn Version:', sns.__version__)

#import timeimport warnings

from dask import delayed

import dask.dataframe as dd

from dask.distributed import Client, LocalCluster, wait

from dask_ml.cluster import KMeans as skmKMeans

from dask_cuda import LocalCUDACluster

from sklearn import metrics

from sklearn.cluster import KMeans as skKMeans

from sklearn.metrics import adjusted_rand_score as sk_adjusted_rand_score, silhouette_score as sk_silhouette_score

from cuml.cluster import KMeans as cuKMeans

from cuml.dask.cluster.kmeans import KMeans as cumKMeans

from cuml.metrics import adjusted_rand_score as cu_adjusted_rand_score

# Configure matplotlib library

import matplotlib.pyplot as plt

%matplotlib inline

# Configure seaborn library

sns.set()

#sns.set(style="white", color_codes=True)

%config InlineBackend.figure_format = 'svg'

# Configure warnings

#warnings.filterwarnings("ignore")

####################################### KMEANS #############################################################

# Create local cluster

cluster = LocalCUDACluster(n_workers=2, threads_per_worker=1)

client = Client(cluster)

# Identify number of workers

n_workers = len(client.has_what().keys())

# Read data in host memory

device_m_csv = dask_cudf.read_csv('./DataSet/iris.csv', header = 0, delimiter = ',', chunksize='2kB') # Get complete CSV. Chunksize is 2kb for getting 2 partitions

#x = host_data.iloc[:, [0,1,2,3]].values

device_m_data = device_m_csv.iloc[:, [0, 1, 2, 3]] # Get data columns

device_m_labels = device_m_csv.iloc[:, 4] # Get labels column

# Plot data

#sns.pairplot(device_csv.to_pandas(), hue='variety');

# Define variables

label_type = { 'Setosa': 1, 'Versicolor': 2, 'Virginica': 3 } # Dictionary of variables type

# Create KMeans

cu_m_kmeans = cumKMeans(init = 'k-means||',

n_clusters = len(device_m_labels.unique()),

oversampling_factor = 40,

random_state = 0)

# Fit data in KMeans

cu_m_kmeans.fit(device_m_data)

# Predict data

cu_m_kmeans_labels_predicted = cu_m_kmeans.predict(device_m_data).compute()

# Check score

#print('Cluster centers:\n',cu_m_kmeans.cluster_centers_)

#print('adjusted_rand_score: ', sk_adjusted_rand_score(device_m_labels, cu_m_kmeans.labels_))

#print('silhouette_score: ', sk_silhouette_score(device_m_data.to_pandas(), cu_m_kmeans_labels_predicted))

# Close local cluster

client.close()

cluster.close()

鸢尾花数据集示例:

编辑 1

@Corey,这是使用您的代码输出的结果:

NumPy Version: 1.17.5

Pandas Version: 0.25.3

Scikit-Learn Version: 0.22.1

cuPY Version: 6.7.0

cuDF Version: 0.12.0

cuML Version: 0.12.0

Dask Version: 2.10.1

DaskCuda Version: 0+unknown

DaskCuDF Version: 0.12.0

MatPlotLib Version: 3.1.3

SeaBorn Version: 0.10.0

Cluster centers:

0 1 2 3

0 5.006000 3.428000 1.462000 0.246000

1 5.901613 2.748387 4.393548 1.433871

2 6.850000 3.073684 5.742105 2.071053

adjusted_rand_score: 0.7302382722834697

silhouette_score: 0.5528190123564102

dask_cuda数据框进行iloc操作,对吧?另外,如果不麻烦的话...你能给我解释一下何时使用.compute()、wait()和.persistent()吗?我已经阅读了相关资料,但我不确定什么时候该使用它们或者不使用。例如,在“# Predict data”中使用了compute()(根据文档),但我不明白为什么要在那里使用。我对这三个概念不太清楚。再次感谢你。 - JuMoGar