如果您正在寻找从JSON中展开多个层次结构的更通用方法,您可以使用递归和列表推导来重塑数据。以下是一种替代方案:

def flatten_json(nested_json, exclude=['']):

"""Flatten json object with nested keys into a single level.

Args:

nested_json: A nested json object.

exclude: Keys to exclude from output.

Returns:

The flattened json object if successful, None otherwise.

"""

out = {}

def flatten(x, name='', exclude=exclude):

if type(x) is dict:

for a in x:

if a not in exclude: flatten(x[a], name + a + '_')

elif type(x) is list:

i = 0

for a in x:

flatten(a, name + str(i) + '_')

i += 1

else:

out[name[:-1]] = x

flatten(nested_json)

return out

然后,您可以将其应用于数据,而不受嵌套级别的限制:

新的样本数据

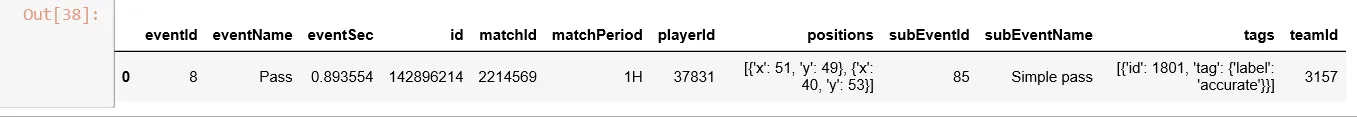

this_dict = {'events': [

{'id': 142896214,

'playerId': 37831,

'teamId': 3157,

'matchId': 2214569,

'matchPeriod': '1H',

'eventSec': 0.8935539999999946,

'eventId': 8,

'eventName': 'Pass',

'subEventId': 85,

'subEventName': 'Simple pass',

'positions': [{'x': 51, 'y': 49}, {'x': 40, 'y': 53}],

'tags': [{'id': 1801, 'tag': {'label': 'accurate'}}]},

{'id': 142896214,

'playerId': 37831,

'teamId': 3157,

'matchId': 2214569,

'matchPeriod': '1H',

'eventSec': 0.8935539999999946,

'eventId': 8,

'eventName': 'Pass',

'subEventId': 85,

'subEventName': 'Simple pass',

'positions': [{'x': 51, 'y': 49}, {'x': 40, 'y': 53},{'x': 51, 'y': 49}],

'tags': [{'id': 1801, 'tag': {'label': 'accurate'}}]}

]}

使用方法

pd.DataFrame([flatten_json(x) for x in this_dict['events']])

Out[1]:

id playerId teamId matchId matchPeriod eventSec eventId \

0 142896214 37831 3157 2214569 1H 0.893554 8

1 142896214 37831 3157 2214569 1H 0.893554 8

eventName subEventId subEventName positions_0_x positions_0_y \

0 Pass 85 Simple pass 51 49

1 Pass 85 Simple pass 51 49

positions_1_x positions_1_y tags_0_id tags_0_tag_label positions_2_x \

0 40 53 1801 accurate NaN

1 40 53 1801 accurate 51.0

positions_2_y

0 NaN

1 49.0

请注意,这段flatten_json代码不是我写的,我是从这里和这里看到的,但不确定原始来源。