介绍:我正在使用MATLAB的神经网络工具箱,试图预测未来一步的时间序列。目前,我只是试图预测一个简单的正弦函数,但希望在获得满意的结果后能够转向更复杂的内容。

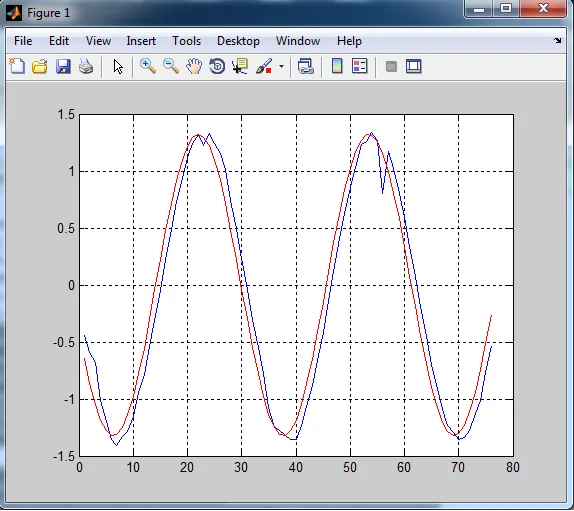

问题:一切似乎都运行良好,然而预测的结果往往会滞后一个周期。如果神经网络只是延迟了一个时间单位输出系列,那么它的预测就没有多大用处,对吧?

代码:

t = -50:0.2:100;

noise = rand(1,length(t));

y = sin(t)+1/2*sin(t+pi/3);

split = floor(0.9*length(t));

forperiod = length(t)-split;

numinputs = 5;

forecasted = [];

msg = '';

for j = 1:forperiod

fprintf(repmat('\b',1,numel(msg)));

msg = sprintf('forecasting iteration %g/%g...\n',j,forperiod);

fprintf('%s',msg);

estdata = y(1:split+j-1);

estdatalen = size(estdata,2);

signal = estdata;

last = signal(end);

[signal,low,high] = preprocess(signal'); % pre-process

signal = signal';

inputs = signal(rowshiftmat(length(signal),numinputs));

targets = signal(numinputs+1:end);

%% NARNET METHOD

feedbackDelays = 1:4;

hiddenLayerSize = 10;

net = narnet(feedbackDelays,[hiddenLayerSize hiddenLayerSize]);

net.inputs{1}.processFcns = {'removeconstantrows','mapminmax'};

signalcells = mat2cell(signal,[1],ones(1,length(signal)));

[inputs,inputStates,layerStates,targets] = preparets(net,{},{},signalcells);

net.trainParam.showWindow = false;

net.trainparam.showCommandLine = false;

net.trainFcn = 'trainlm'; % Levenberg-Marquardt

net.performFcn = 'mse'; % Mean squared error

[net,tr] = train(net,inputs,targets,inputStates,layerStates);

next = net(inputs(end),inputStates,layerStates);

next = postprocess(next{1}, low, high); % post-process

next = (next+1)*last;

forecasted = [forecasted next];

end

figure(1);

plot(1:forperiod, forecasted, 'b', 1:forperiod, y(end-forperiod+1:end), 'r');

grid on;

注意: 函数“preprocess”只是将数据转换为对数差异,“postprocess”将对数差异转换回来进行绘图。(有关预处理和后处理代码,请查看EDIT)

结果:

蓝色:预测值

红色:实际值

请问我在这里做错了什么?或者推荐另一种方法来实现所需的结果(无滞后预测正弦函数,最终更混沌的时间序列)?非常感谢您的帮助。

编辑: 现在已经过去了几天,我希望每个人都度过了愉快的周末。由于没有出现解决方案,我决定发布辅助函数“postprocess.m”、“preprocess.m”及其辅助函数“normalize.m”的代码。也许这会有助于启动球。

postprocess.m:

function data = postprocess(x, low, high)

% denormalize

logdata = (x+1)/2*(high-low)+low;

% inverse log data

sign = logdata./abs(logdata);

data = sign.*(exp(abs(logdata))-1);

end

preprocess.m:

function [y, low, high] = preprocess(x)

% differencing

diffs = diff(x);

% calc % changes

chngs = diffs./x(1:end-1,:);

% log data

sign = chngs./abs(chngs);

logdata = sign.*log(abs(chngs)+1);

% normalize logrets

high = max(max(logdata));

low = min(min(logdata));

y=[];

for i = 1:size(logdata,2)

y = [y normalize(logdata(:,i), -1, 1)];

end

end

normalize.m:

function Y = normalize(X,low,high)

%NORMALIZE Linear normalization of X between low and high values.

if length(X) <= 1

error('Length of X input vector must be greater than 1.');

end

mi = min(X);

ma = max(X);

Y = (X-mi)/(ma-mi)*(high-low)+low;

end