我目前正在尝试在图像上方绘制Firebase ML Kit识别的文本框。目前,我尚未成功,因为所有文本框都显示在屏幕外,无法看到任何一个。我参考了这篇文章:https://medium.com/swlh/how-to-draw-bounding-boxes-with-swiftui-d93d1414eb00和该项目:https://github.com/firebase/quickstart-ios/blob/master/mlvision/MLVisionExample/ViewController.swift。以下是文本框应该显示的视图:

struct ImageScanned: View {

var image: UIImage

@Binding var rectangles: [CGRect]

@State var viewSize: CGSize = .zero

var body: some View {

// TODO: fix scaling

ZStack {

Image(uiImage: image)

.resizable()

.scaledToFit()

.overlay(

GeometryReader { geometry in

ZStack {

ForEach(self.transformRectangles(geometry: geometry)) { rect in

Rectangle()

.path(in: CGRect(

x: rect.x,

y: rect.y,

width: rect.width,

height: rect.height))

.stroke(Color.red, lineWidth: 2.0)

}

}

}

)

}

}

private func transformRectangles(geometry: GeometryProxy) -> [DetectedRectangle] {

var rectangles: [DetectedRectangle] = []

let imageViewWidth = geometry.frame(in: .global).size.width

let imageViewHeight = geometry.frame(in: .global).size.height

let imageWidth = image.size.width

let imageHeight = image.size.height

let imageViewAspectRatio = imageViewWidth / imageViewHeight

let imageAspectRatio = imageWidth / imageHeight

let scale = (imageViewAspectRatio > imageAspectRatio)

? imageViewHeight / imageHeight : imageViewWidth / imageWidth

let scaledImageWidth = imageWidth * scale

let scaledImageHeight = imageHeight * scale

let xValue = (imageViewWidth - scaledImageWidth) / CGFloat(2.0)

let yValue = (imageViewHeight - scaledImageHeight) / CGFloat(2.0)

var transform = CGAffineTransform.identity.translatedBy(x: xValue, y: yValue)

transform = transform.scaledBy(x: scale, y: scale)

for rect in self.rectangles {

let rectangle = rect.applying(transform)

rectangles.append(DetectedRectangle(width: rectangle.width, height: rectangle.height, x: rectangle.minX, y: rectangle.minY))

}

return rectangles

}

}

struct DetectedRectangle: Identifiable {

var id = UUID()

var width: CGFloat = 0

var height: CGFloat = 0

var x: CGFloat = 0

var y: CGFloat = 0

}

这是包含当前视图的父级视图:

struct StartScanView: View {

@State var showCaptureImageView: Bool = false

@State var image: UIImage? = nil

@State var rectangles: [CGRect] = []

var body: some View {

ZStack {

if showCaptureImageView {

CaptureImageView(isShown: $showCaptureImageView, image: $image)

} else {

VStack {

Button(action: {

self.showCaptureImageView.toggle()

}) {

Text("Start Scanning")

}

// show here View with rectangles on top of image

if self.image != nil {

ImageScanned(image: self.image ?? UIImage(), rectangles: $rectangles)

}

Button(action: {

self.processImage()

}) {

Text("Process Image")

}

}

}

}

}

func processImage() {

let scaledImageProcessor = ScaledElementProcessor()

if image != nil {

scaledImageProcessor.process(in: image!) { text in

for block in text.blocks {

for line in block.lines {

for element in line.elements {

self.rectangles.append(element.frame)

}

}

}

}

}

}

教程的计算导致矩形太大,而示例项目的则太小。(高度同理)

不幸的是,我找不到Firebase确定元素大小的尺寸。

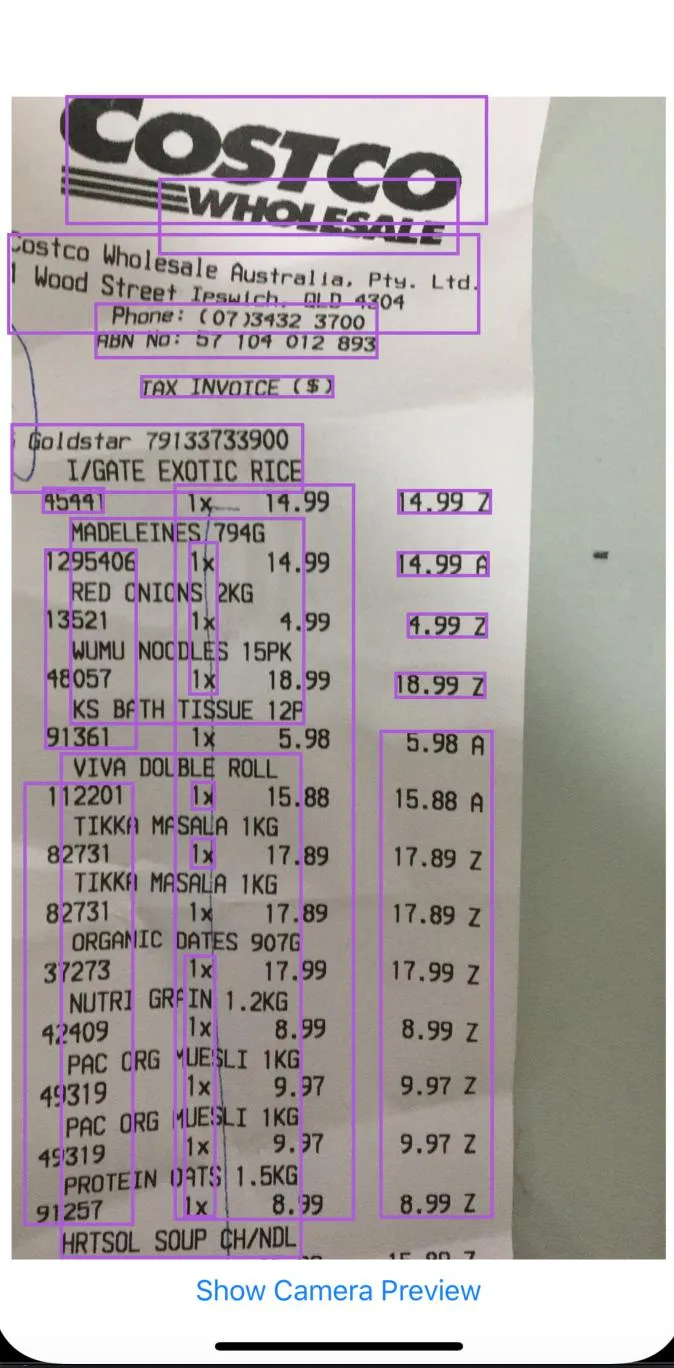

看起来是这样的:

如果根本不计算宽度和高度,则矩形的大小似乎接近它们应该有的大小(不完全),因此我认为ML Kit的大小计算并不是按照图像大小/宽度成比例进行的。

如果根本不计算宽度和高度,则矩形的大小似乎接近它们应该有的大小(不完全),因此我认为ML Kit的大小计算并不是按照图像大小/宽度成比例进行的。