我正在使用独立集群管理器部署一个Spark Apache应用程序。 我的架构使用2台Windows机器:一台设置为主节点,另一台设置为从节点(worker)。

主节点:我在其上运行命令: 从节点:我在其上运行命令:

从节点:我在其上运行命令:

主节点:我在其上运行命令:

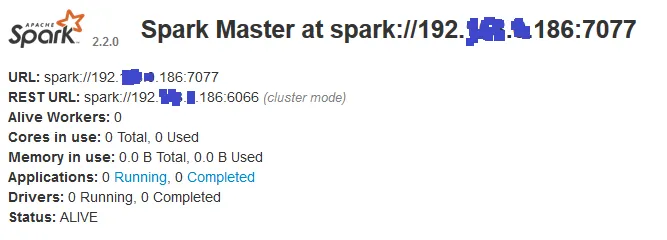

\bin>spark-class org.apache.spark.deploy.master.Master,并且这是Web UI显示的内容: 从节点:我在其上运行命令:

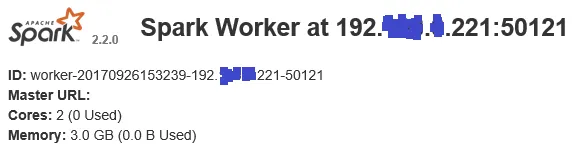

从节点:我在其上运行命令:\bin>spark-class org.apache.spark.deploy.worker.Worker spark://192.*.*.186:7077,并且这是Web UI显示的内容:

问题在于工作节点无法连接到主节点,显示以下错误:17/09/26 16:05:17 INFO Worker: Connecting to master 192.*.*.186:7077...

17/09/26 16:05:22 WARN Worker: Failed to connect to master 192.*.*.186:7077

org.apache.spark.SparkException: Exception thrown in awaitResult:

at org.apache.spark.util.ThreadUtils$.awaitResult(ThreadUtils.scala:205)

at org.apache.spark.rpc.RpcTimeout.awaitResult(RpcTimeout.scala:75)

at org.apache.spark.rpc.RpcEnv.setupEndpointRefByURI(RpcEnv.scala:100)

at org.apache.spark.rpc.RpcEnv.setupEndpointRef(RpcEnv.scala:108)

at org.apache.spark.deploy.worker.Worker$$anonfun$org$apache$spark$deploy$worker$Worker$$tryRegisterAllMasters$1$$anon$1.run(Worker.scala:241)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

Caused by: java.io.IOException: Failed to connect to /192.*.*.186:7077

at org.apache.spark.network.client.TransportClientFactory.createClient(TransportClientFactory.java:232)

at org.apache.spark.network.client.TransportClientFactory.createClient(TransportClientFactory.java:182)

at org.apache.spark.rpc.netty.NettyRpcEnv.createClient(NettyRpcEnv.scala:197)

at org.apache.spark.rpc.netty.Outbox$$anon$1.call(Outbox.scala:194)

at org.apache.spark.rpc.netty.Outbox$$anon$1.call(Outbox.scala:190)

... 4 more

Caused by: io.netty.channel.AbstractChannel$AnnotatedConnectException: Connection timed out: no further information: /192.*.*.186:7077

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:717)

at io.netty.channel.socket.nio.NioSocketChannel.doFinishConnect(NioSocketChannel.java:257)

at io.netty.channel.nio.AbstractNioChannel$AbstractNioUnsafe.finishConnect(AbstractNioChannel.java:291)

at io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:631)

at io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:566)

at io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:480)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:442)

at io.netty.util.concurrent.SingleThreadEventExecutor$2.run(SingleThreadEventExecutor.java:131)

at io.netty.util.concurrent.DefaultThreadFactory$DefaultRunnableDecorator.run(DefaultThreadFactory.java:144)

... 1 more

如果防火墙在两台机器上都已禁用,并且我使用 nmap 测试了它们之间的连接并一切正常,那么这个错误的可能原因是什么呢?但是当我使用telnet时,我收到了这个错误:Connecting To 192.*.*.186...Could not open connection to the host, on port 23: Connect failed

连接到192.*.*.186...无法打开到主机的连接,端口23:连接失败。 - Mehdi Ben Hamidatelnet masterhost port- Rahul Sharma>telnet 192.*.*.186 7077 正在连接到 192.*.*.186...无法打开到主机的连接,端口为 7077:连接失败- Mehdi Ben Hamida