你可以使用2类支持向量机获取分数,如果你将

RAW_OUTPUT 传递到预测中:

bool returnDFVal = (flags & RAW_OUTPUT) != 0;

float result = returnDFVal && class_count == 2 ?

(float)sum : (float)(svm->class_labels.at<int>(k));

那么你需要训练4个不同的2类支持向量机,其中一个与其他类别进行比较。

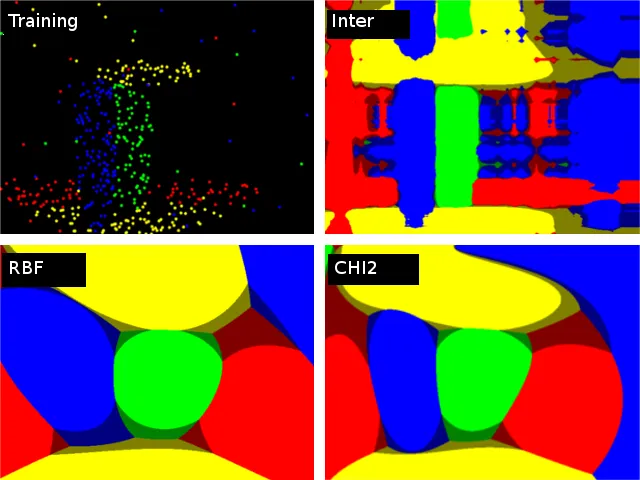

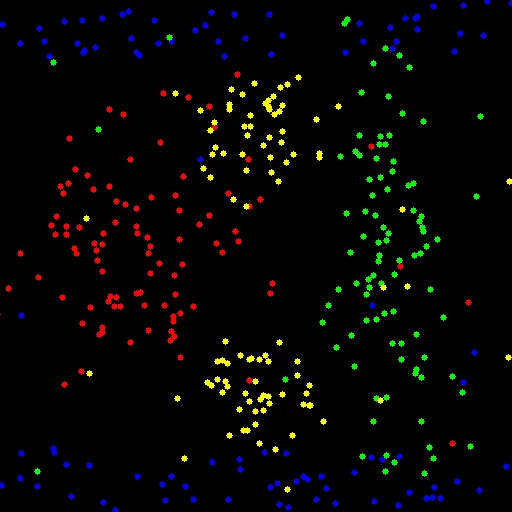

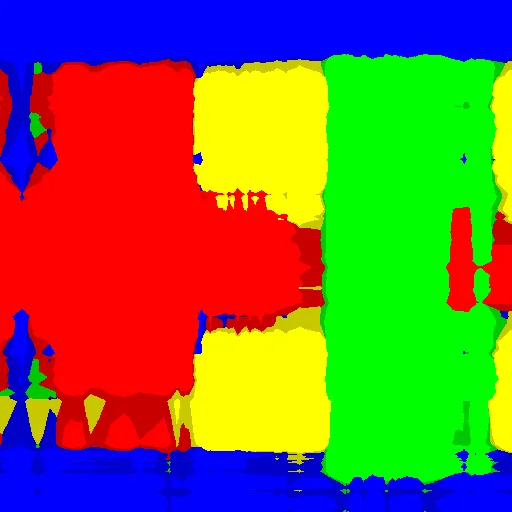

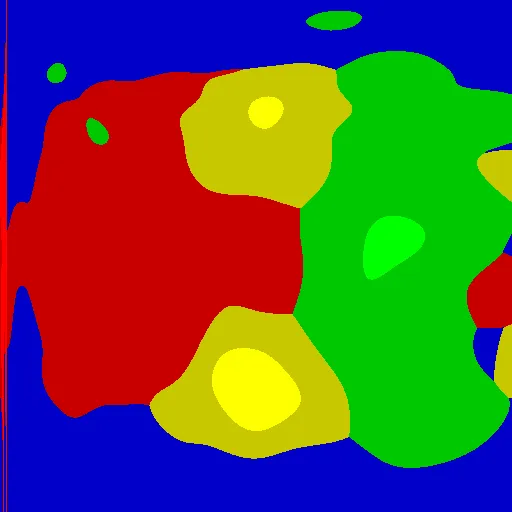

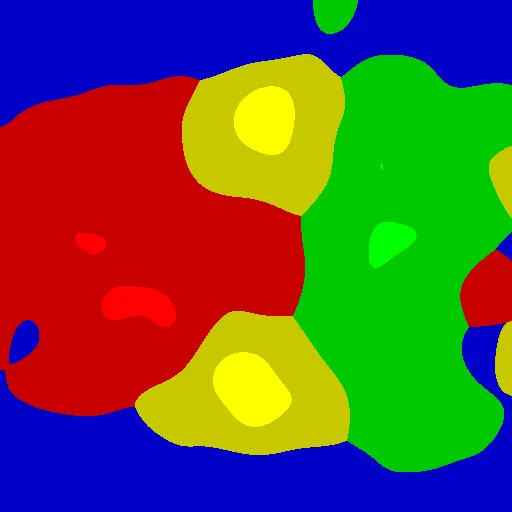

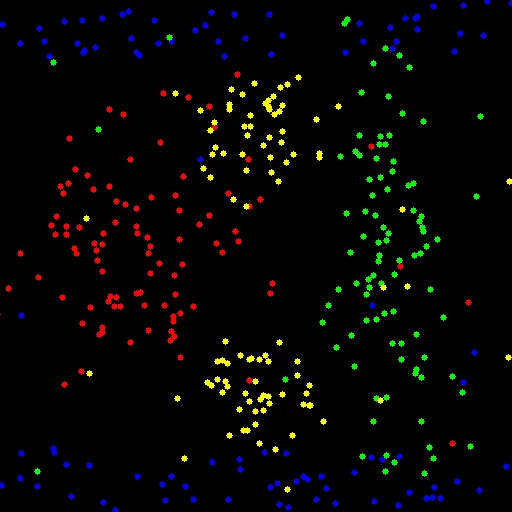

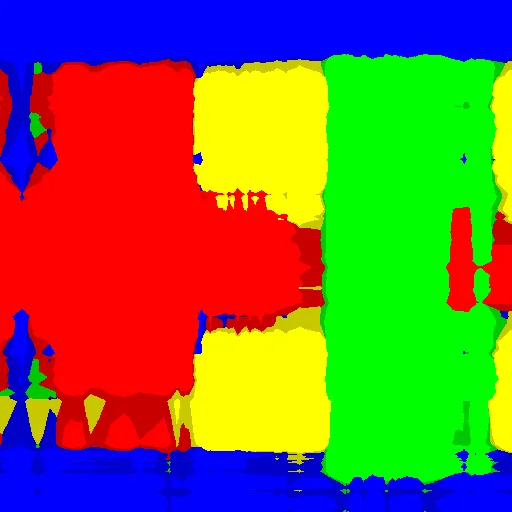

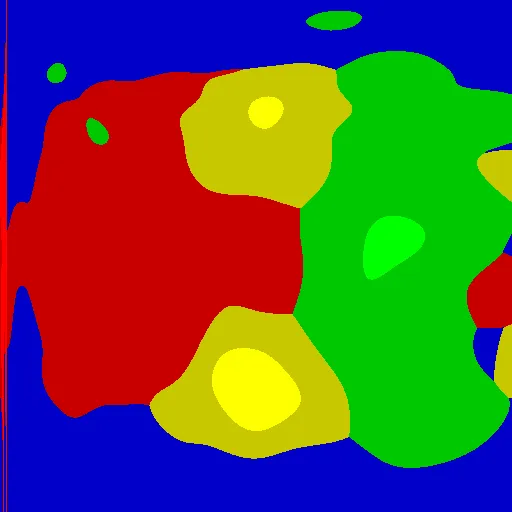

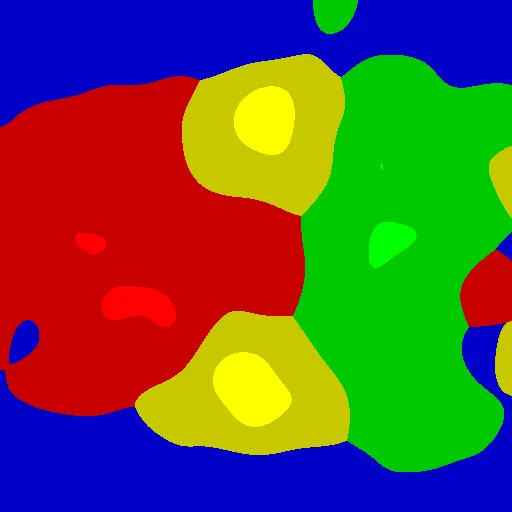

这些是我在这些样本上得到的结果:

INTER与trainAuto

使用trainAuto的CHI2

RBF使用train进行训练(C = 0.1,gamma = 0.001)(在这种情况下,trainAuto会过拟合)

这里是代码。您可以使用布尔变量

AUTO_TRAIN_ENABLED启用

trainAuto,还可以设置

KERNEL以及图像尺寸等。

#include <opencv2/opencv.hpp>

#include <vector>

#include <algorithm>

using namespace std;

using namespace cv;

using namespace cv::ml;

int main()

{

const int WIDTH = 512;

const int HEIGHT = 512;

const int N_SAMPLES_PER_CLASS = 10;

const float NON_LINEAR_SAMPLES_RATIO = 0.1;

const int KERNEL = SVM::CHI2;

const bool AUTO_TRAIN_ENABLED = false;

int N_NON_LINEAR_SAMPLES = N_SAMPLES_PER_CLASS * NON_LINEAR_SAMPLES_RATIO;

int N_LINEAR_SAMPLES = N_SAMPLES_PER_CLASS - N_NON_LINEAR_SAMPLES;

vector<Scalar> colors{Scalar(255,0,0), Scalar(0,255,0), Scalar(0,0,255), Scalar(0,255,255)};

vector<Vec3b> colorsv{ Vec3b(255, 0, 0), Vec3b(0, 255, 0), Vec3b(0, 0, 255), Vec3b(0, 255, 255) };

vector<Vec3b> colorsv_shaded{ Vec3b(200, 0, 0), Vec3b(0, 200, 0), Vec3b(0, 0, 200), Vec3b(0, 200, 200) };

Mat1f data(4 * N_SAMPLES_PER_CLASS, 2);

Mat1i labels(4 * N_SAMPLES_PER_CLASS, 1);

RNG rng(0);

Mat1f class1 = data.rowRange(0, 0.5 * N_LINEAR_SAMPLES);

Mat1f x1 = class1.colRange(0, 1);

Mat1f y1 = class1.colRange(1, 2);

rng.fill(x1, RNG::UNIFORM, Scalar(1), Scalar(WIDTH));

rng.fill(y1, RNG::UNIFORM, Scalar(1), Scalar(HEIGHT / 8));

class1 = data.rowRange(0.5 * N_LINEAR_SAMPLES, 1 * N_LINEAR_SAMPLES);

x1 = class1.colRange(0, 1);

y1 = class1.colRange(1, 2);

rng.fill(x1, RNG::UNIFORM, Scalar(1), Scalar(WIDTH));

rng.fill(y1, RNG::UNIFORM, Scalar(7*HEIGHT / 8), Scalar(HEIGHT));

class1 = data.rowRange(N_LINEAR_SAMPLES, 1 * N_SAMPLES_PER_CLASS);

x1 = class1.colRange(0, 1);

y1 = class1.colRange(1, 2);

rng.fill(x1, RNG::UNIFORM, Scalar(1), Scalar(WIDTH));

rng.fill(y1, RNG::UNIFORM, Scalar(1), Scalar(HEIGHT));

Mat1f class2 = data.rowRange(N_SAMPLES_PER_CLASS, N_SAMPLES_PER_CLASS + N_LINEAR_SAMPLES);

Mat1f x2 = class2.colRange(0, 1);

Mat1f y2 = class2.colRange(1, 2);

rng.fill(x2, RNG::NORMAL, Scalar(3 * WIDTH / 4), Scalar(WIDTH/16));

rng.fill(y2, RNG::NORMAL, Scalar(HEIGHT / 2), Scalar(HEIGHT/4));

class2 = data.rowRange(N_SAMPLES_PER_CLASS + N_LINEAR_SAMPLES, 2 * N_SAMPLES_PER_CLASS);

x2 = class2.colRange(0, 1);

y2 = class2.colRange(1, 2);

rng.fill(x2, RNG::UNIFORM, Scalar(1), Scalar(WIDTH));

rng.fill(y2, RNG::UNIFORM, Scalar(1), Scalar(HEIGHT));

Mat1f class3 = data.rowRange(2 * N_SAMPLES_PER_CLASS, 2 * N_SAMPLES_PER_CLASS + N_LINEAR_SAMPLES);

Mat1f x3 = class3.colRange(0, 1);

Mat1f y3 = class3.colRange(1, 2);

rng.fill(x3, RNG::NORMAL, Scalar(WIDTH / 4), Scalar(WIDTH/8));

rng.fill(y3, RNG::NORMAL, Scalar(HEIGHT / 2), Scalar(HEIGHT/8));

class3 = data.rowRange(2*N_SAMPLES_PER_CLASS + N_LINEAR_SAMPLES, 3 * N_SAMPLES_PER_CLASS);

x3 = class3.colRange(0, 1);

y3 = class3.colRange(1, 2);

rng.fill(x3, RNG::UNIFORM, Scalar(1), Scalar(WIDTH));

rng.fill(y3, RNG::UNIFORM, Scalar(1), Scalar(HEIGHT));

Mat1f class4 = data.rowRange(3 * N_SAMPLES_PER_CLASS, 3 * N_SAMPLES_PER_CLASS + 0.5 * N_LINEAR_SAMPLES);

Mat1f x4 = class4.colRange(0, 1);

Mat1f y4 = class4.colRange(1, 2);

rng.fill(x4, RNG::NORMAL, Scalar(WIDTH / 2), Scalar(WIDTH / 16));

rng.fill(y4, RNG::NORMAL, Scalar(HEIGHT / 4), Scalar(HEIGHT / 16));

class4 = data.rowRange(3 * N_SAMPLES_PER_CLASS + 0.5 * N_LINEAR_SAMPLES, 3 * N_SAMPLES_PER_CLASS + N_LINEAR_SAMPLES);

x4 = class4.colRange(0, 1);

y4 = class4.colRange(1, 2);

rng.fill(x4, RNG::NORMAL, Scalar(WIDTH / 2), Scalar(WIDTH / 16));

rng.fill(y4, RNG::NORMAL, Scalar(3 * HEIGHT / 4), Scalar(HEIGHT / 16));

class4 = data.rowRange(3 * N_SAMPLES_PER_CLASS + N_LINEAR_SAMPLES, 4 * N_SAMPLES_PER_CLASS);

x4 = class4.colRange(0, 1);

y4 = class4.colRange(1, 2);

rng.fill(x4, RNG::UNIFORM, Scalar(1), Scalar(WIDTH));

rng.fill(y4, RNG::UNIFORM, Scalar(1), Scalar(HEIGHT));

labels.rowRange(0*N_SAMPLES_PER_CLASS, 1*N_SAMPLES_PER_CLASS).setTo(1);

labels.rowRange(1*N_SAMPLES_PER_CLASS, 2*N_SAMPLES_PER_CLASS).setTo(2);

labels.rowRange(2*N_SAMPLES_PER_CLASS, 3*N_SAMPLES_PER_CLASS).setTo(3);

labels.rowRange(3*N_SAMPLES_PER_CLASS, 4*N_SAMPLES_PER_CLASS).setTo(4);

Mat3b samples(HEIGHT, WIDTH, Vec3b(0,0,0));

for (int i = 0; i < labels.rows; ++i)

{

circle(samples, Point(data(i, 0), data(i, 1)), 3, colors[labels(i,0) - 1], CV_FILLED);

}

Ptr<SVM> svm1 = SVM::create();

svm1->setType(SVM::C_SVC);

svm1->setKernel(KERNEL);

Mat1i labels1 = (labels != 1) / 255;

if (AUTO_TRAIN_ENABLED)

{

Ptr<TrainData> td1 = TrainData::create(data, ROW_SAMPLE, labels1);

svm1->trainAuto(td1);

}

else

{

svm1->setC(0.1);

svm1->setGamma(0.001);

svm1->setTermCriteria(TermCriteria(TermCriteria::MAX_ITER, (int)1e7, 1e-6));

svm1->train(data, ROW_SAMPLE, labels1);

}

Ptr<SVM> svm2 = SVM::create();

svm2->setType(SVM::C_SVC);

svm2->setKernel(KERNEL);

Mat1i labels2 = (labels != 2) / 255;

if (AUTO_TRAIN_ENABLED)

{

Ptr<TrainData> td2 = TrainData::create(data, ROW_SAMPLE, labels2);

svm2->trainAuto(td2);

}

else

{

svm2->setC(0.1);

svm2->setGamma(0.001);

svm2->setTermCriteria(TermCriteria(TermCriteria::MAX_ITER, (int)1e7, 1e-6));

svm2->train(data, ROW_SAMPLE, labels2);

}

Ptr<SVM> svm3 = SVM::create();

svm3->setType(SVM::C_SVC);

svm3->setKernel(KERNEL);

Mat1i labels3 = (labels != 3) / 255;

if (AUTO_TRAIN_ENABLED)

{

Ptr<TrainData> td3 = TrainData::create(data, ROW_SAMPLE, labels3);

svm3->trainAuto(td3);

}

else

{

svm3->setC(0.1);

svm3->setGamma(0.001);

svm3->setTermCriteria(TermCriteria(TermCriteria::MAX_ITER, (int)1e7, 1e-6));

svm3->train(data, ROW_SAMPLE, labels3);

}

Ptr<SVM> svm4 = SVM::create();

svm4->setType(SVM::C_SVC);

svm4->setKernel(KERNEL);

Mat1i labels4 = (labels != 4) / 255;

if (AUTO_TRAIN_ENABLED)

{

Ptr<TrainData> td4 = TrainData::create(data, ROW_SAMPLE, labels4);

svm4->trainAuto(td4);

}

else

{

svm4->setC(0.1);

svm4->setGamma(0.001);

svm4->setTermCriteria(TermCriteria(TermCriteria::MAX_ITER, (int)1e7, 1e-6));

svm4->train(data, ROW_SAMPLE, labels4);

}

Mat3b regions(HEIGHT, WIDTH);

Mat1f R(HEIGHT, WIDTH);

Mat1f R1(HEIGHT, WIDTH);

Mat1f R2(HEIGHT, WIDTH);

Mat1f R3(HEIGHT, WIDTH);

Mat1f R4(HEIGHT, WIDTH);

for (int r = 0; r < HEIGHT; ++r)

{

for (int c = 0; c < WIDTH; ++c)

{

Mat1f sample = (Mat1f(1,2) << c, r);

vector<float> responses(4);

responses[0] = svm1->predict(sample, noArray(), StatModel::RAW_OUTPUT);

responses[1] = svm2->predict(sample, noArray(), StatModel::RAW_OUTPUT);

responses[2] = svm3->predict(sample, noArray(), StatModel::RAW_OUTPUT);

responses[3] = svm4->predict(sample, noArray(), StatModel::RAW_OUTPUT);

int best_class = distance(responses.begin(), max_element(responses.begin(), responses.end()));

float best_response = responses[best_class];

R(r,c) = best_response;

R1(r, c) = responses[0];

R2(r, c) = responses[1];

R3(r, c) = responses[2];

R4(r, c) = responses[3];

if (best_response >= 0) {

regions(r, c) = colorsv[best_class];

}

else {

regions(r, c) = colorsv_shaded[best_class];

}

}

}

imwrite("svm_samples.png", samples);

imwrite("svm_x.png", regions);

imshow("Samples", samples);

imshow("Regions", regions);

waitKey();

return 0;

}