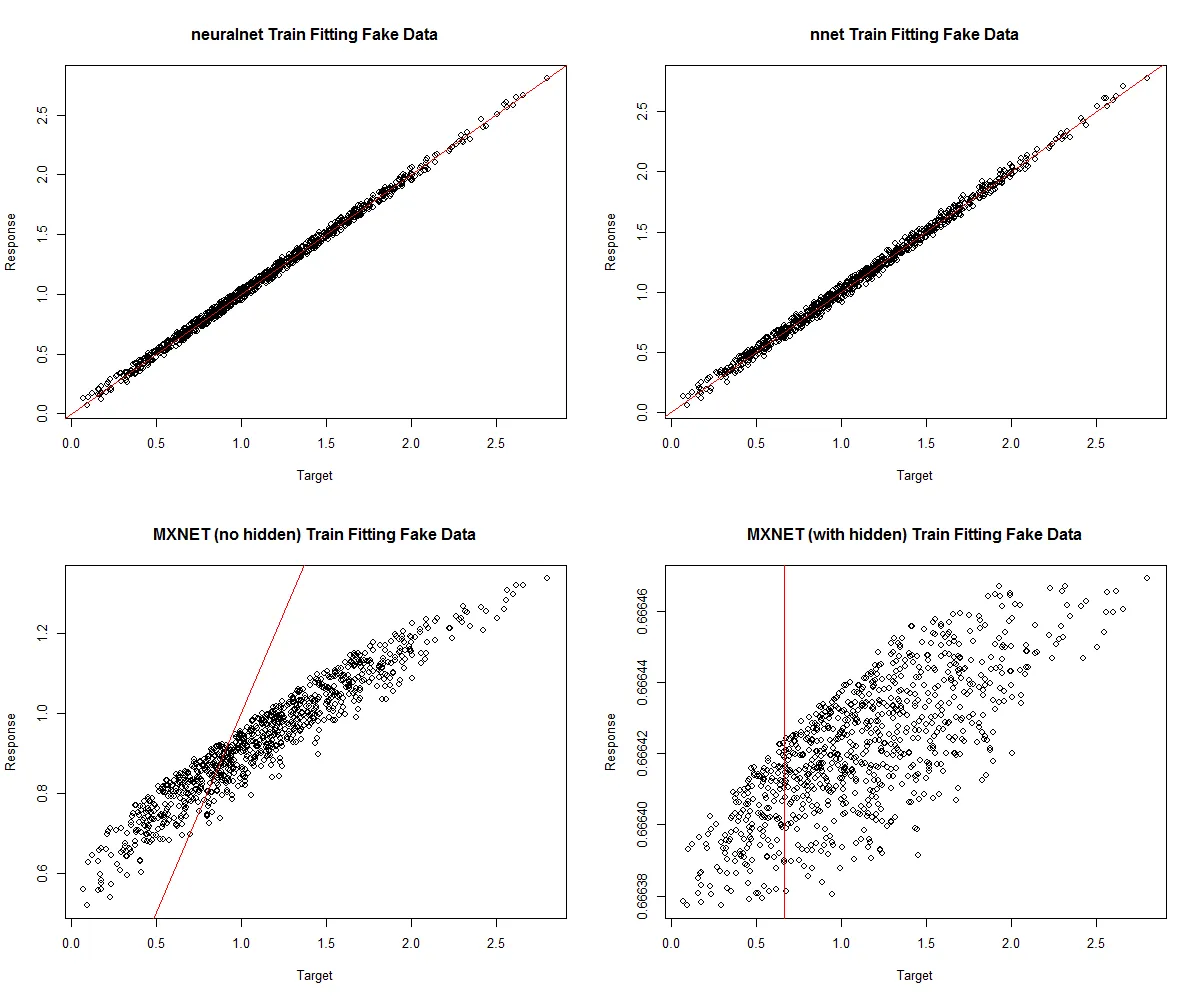

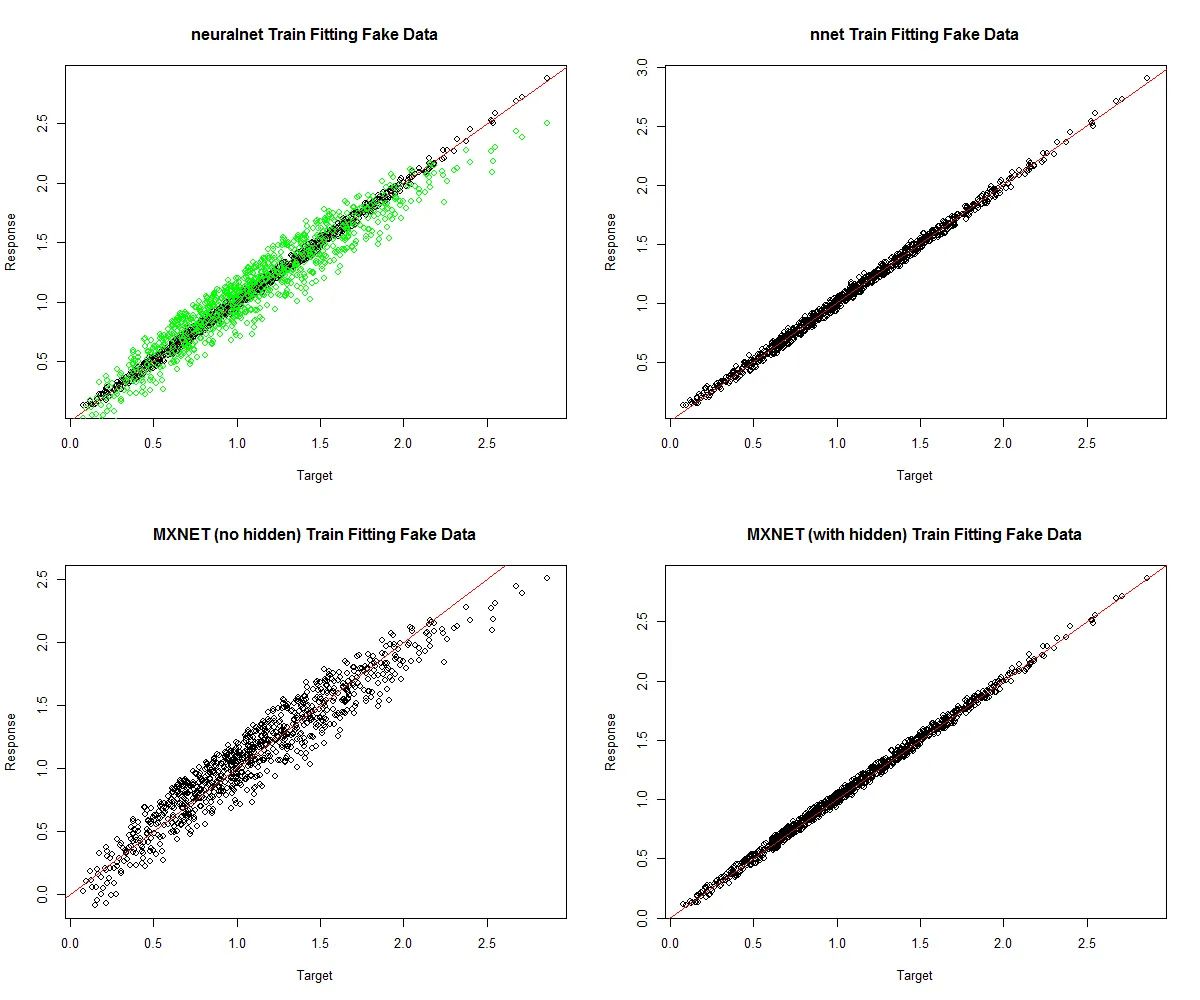

我一直无法使用 mxnet 的 LinearRegressionOutput 层获得合理的性能。

下面的自包含示例尝试对一个简单的多项式函数(y = x1 + x2^2 + x3^3)进行回归,并加入了少量随机噪声。

使用 这里 给出的 mxnet 回归示例,以及稍微复杂一些的包含隐藏层的网络。

下面的示例还使用 neuralnet 和 nnet 包来训练回归网络,从图中可以看到它们的表现更好。

我知道解决性能差的网络问题的方法是进行一些超参数调整,但我已经尝试了一系列值,没有任何性能提升。因此,我有以下几个问题:

- 我的 mxnet 回归实现中是否存在错误?

- 有谁有经验可以帮助我使 mxnet 对像这里考虑的简单回归问题获得合理的性能?

- 还有其他人有 mxnet 回归示例表现良好吗?

我的设置如下:

MXNet version: 0.7

R `sessionInfo()`: R version 3.3.2 (2016-10-31)

Platform: x86_64-w64-mingw32/x64 (64-bit)

Running under: Windows 7 x64 (build 7601) Service Pack 1

mxnet的回归结果较差:

从这个可重复的示例可以看出:

## SIMPLE REGRESSION PROBLEM

# Check mxnet out-of-the-box performance VS neuralnet, and caret/nnet

library(mxnet)

library(neuralnet)

library(nnet)

library(caret)

library(tictoc)

library(reshape)

# Data definitions

nObservations <- 1000

noiseLvl <- 0.1

# Network config

nHidden <- 3

learnRate <- 2e-6

momentum <- 0.9

batchSize <- 20

nRound <- 1000

verbose <- FALSE

array.layout = "rowmajor"

# GENERATE DATA:

df <- data.frame(x1=runif(nObservations),

x2=runif(nObservations),

x3=runif(nObservations))

df$y <- df$x1 + df$x2^2 + df$x3^3 + noiseLvl*runif(nObservations)

# normalize data columns

# df <- scale(df)

# Seperate data into train/test

test.ind = seq(1, nObservations, 10) # 1 in 10 samples for testing

train.x = data.matrix(df[-test.ind, -which(colnames(df) %in% c("y"))])

train.y = df[-test.ind, "y"]

test.x = data.matrix(df[test.ind, -which(colnames(df) %in% c("y"))])

test.y = df[test.ind, "y"]

# Define mxnet network, following 5-minute regression example from here:

# http://mxnet-tqchen.readthedocs.io/en/latest//packages/r/fiveMinutesNeuralNetwork.html#regression

data <- mx.symbol.Variable("data")

label <- mx.symbol.Variable("label")

fc1 <- mx.symbol.FullyConnected(data, num_hidden=1, name="fc1")

lro1 <- mx.symbol.LinearRegressionOutput(data=fc1, label=label, name="lro")

# Train MXNET model

mx.set.seed(0)

tic("mxnet training 1")

mxModel1 <- mx.model.FeedForward.create(lro1, X=train.x, y=train.y,

eval.data=list(data=test.x, label=test.y),

ctx=mx.cpu(), num.round=nRound,

array.batch.size=batchSize,

learning.rate=learnRate, momentum=momentum,

eval.metric=mx.metric.rmse,

verbose=FALSE, array.layout=array.layout)

toc()

# Train network with a hidden layer

fc1 <- mx.symbol.FullyConnected(data, num_hidden=nHidden, name="fc1")

tanh1 <- mx.symbol.Activation(fc1, act_type="tanh", name="tanh1")

fc2 <- mx.symbol.FullyConnected(tanh1, num_hidden=1, name="fc2")

lro2 <- mx.symbol.LinearRegressionOutput(data=fc2, label=label, name="lro")

tic("mxnet training 2")

mxModel2 <- mx.model.FeedForward.create(lro2, X=train.x, y=train.y,

eval.data=list(data=test.x, label=test.y),

ctx=mx.cpu(), num.round=nRound,

array.batch.size=batchSize,

learning.rate=learnRate, momentum=momentum,

eval.metric=mx.metric.rmse,

verbose=FALSE, array.layout=array.layout)

toc()

# Train neuralnet model

mx.set.seed(0)

tic("neuralnet training")

nnModel <- neuralnet(y~x1+x2+x3, data=df[-test.ind, ], hidden=c(nHidden),

linear.output=TRUE, stepmax=1e6)

toc()

# Train caret model

mx.set.seed(0)

tic("nnet training")

nnetModel <- nnet(y~x1+x2+x3, data=df[-test.ind, ], size=nHidden, trace=F,

linout=TRUE)

toc()

# Check response VS targets on training data:

par(mfrow=c(2,2))

plot(train.y, compute(nnModel, train.x)$net.result,

main="neuralnet Train Fitting Fake Data", xlab="Target", ylab="Response")

abline(0,1, col="red")

plot(train.y, predict(nnetModel, train.x),

main="nnet Train Fitting Fake Data", xlab="Target", ylab="Response")

abline(0,1, col="red")

plot(train.y, predict(mxModel1, train.x, array.layout=array.layout),

main="MXNET (no hidden) Train Fitting Fake Data", xlab="Target",

ylab="Response")

abline(0,1, col="red")

plot(train.y, predict(mxModel2, train.x, array.layout=array.layout),

main="MXNET (with hidden) Train Fitting Fake Data", xlab="Target",

ylab="Response")

abline(0,1, col="red")