你知道如何在Jetpack Compose中应用语音识别 (SpeechRecognizer)吗?

类似这样的东西,但是在Compose中。

我按照这个视频中的步骤进行了操作:

- 在清单文件中添加了以下权限:

<uses-permission android:name="android.permission.INTERNET"/>

<uses-permission android:name="android.permission.RECORD_AUDIO"/>

- 我在

MainActivity中编写了以下代码:

class MainActivity : ComponentActivity() {

override fun onCreate(savedInstanceState: Bundle?) {

super.onCreate(savedInstanceState)

setContent {

PageUi()

}

}

}

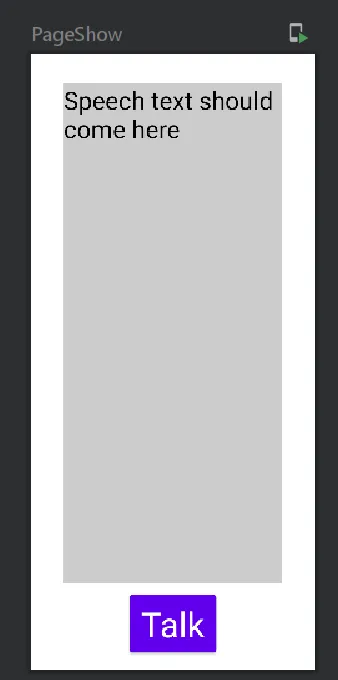

@Composable

fun PageUi() {

val context = LocalContext.current

val talk by remember { mutableStateOf("Speech text should come here") }

Column(

modifier = Modifier.fillMaxSize(),

horizontalAlignment = Alignment.CenterHorizontally,

verticalArrangement = Arrangement.Center

) {

Text(

text = talk,

style = MaterialTheme.typography.h4,

modifier = Modifier

.fillMaxSize(0.85f)

.padding(16.dp)

.background(Color.LightGray)

)

Button(onClick = { askSpeechInput(context) }) {

Text(

text = "Talk", style = MaterialTheme.typography.h3

)

}

}

}

fun askSpeechInput(context: Context) {

if (!SpeechRecognizer.isRecognitionAvailable(context)) {

Toast.makeText(context, "Speech not available", Toast.LENGTH_SHORT).show()

} else {

val i = Intent(RecognizerIntent.ACTION_RECOGNIZE_SPEECH)

i.putExtra(RecognizerIntent.EXTRA_LANGUAGE_MODEL, RecognizerIntent.LANGUAGE_MODEL_FREE_FORM)

i.putExtra(RecognizerIntent.EXTRA_LANGUAGE, Locale.getDefault())

i.putExtra(RecognizerIntent.EXTRA_PROMPT, "Talk")

//startActivityForResult(MainActivity(),i,102)

}

}

@Preview(showBackground = true)

@Composable

fun PageShow() {

PageUi()

}

但是我不知道在Compose中如何使用startActivityForResult并完成其余部分?

而且到目前为止,当我在手机(或模拟器)上测试时,它总是以toast消息结束!