我正在开发一个CUDA应用程序,并尝试使用cudaMemcpyAsync和CUDA核心的异步行为来在GPU和CPU之间共享工作。然而,我遇到了并发性方面的问题。它能够重叠Host到Device数据传输,但是核心执行不能并发。基本上等待CPU完成并调用同步函数,然后内核开始在设备上执行。我无法理解这种行为,难道内核不一直异步于CPU线程吗?我的GPU是Nvidia Geforce GT 550m(Fermi架构,有1个Copy Engine和1个Compute Engine)。我使用CUDA 6.0和Nsight 4.0。下面是代码:

#include "cuda_runtime.h"

#include "device_launch_parameters.h"

#include <stdlib.h>

#include <stdio.h>

#include <iostream>

#include <thread>

#include <chrono>

using namespace std;

struct point4D

{

float x;

float y;

float z;

float w;

};

void heterogenous_1way_plus(point4D * h_ptrData, unsigned int h_dataSize, point4D * h_out, point4D pB, point4D pC);

bool correct_output(point4D * data, unsigned int size);

void flush_buffer(point4D * data, unsigned int size);

void initialize_input(point4D *& data, unsigned int size);

void cudaCheckError(cudaError_t cudaStatus, char* err);

// Implements cross product for 4D point on the GPU-side.

__global__ void gpu_kernel(point4D * d_ptrData, point4D * d_out, point4D pB, point4D pC)

{

int index = blockIdx.x * blockDim.x + threadIdx.x;

point4D pA = d_ptrData[index];

point4D out; out.x = 0; out.y = 0; out.z = 0; out.w = 0;

out.x += pA.y*(pB.z*pC.w - pC.z*pB.w) - pA.z*(pB.y*pC.w - pC.y*pB.w) + pA.w*(pB.y*pC.z - pC.y*pB.z);

out.y += -pA.x*(pB.z*pC.w - pC.z*pB.w) + pA.z*(pB.x*pC.w - pC.x*pB.w) - pA.w*(pB.x*pC.z - pC.x*pB.z);

out.z += pA.x*(pB.y*pC.w - pC.y*pB.w) - pA.y*(pB.x*pC.w - pC.x*pB.w) + pA.w*(pB.x*pC.y - pC.x*pB.y);

out.w += -pA.x*(pB.y*pC.z - pC.y*pB.z) + pA.y*(pB.x*pC.z - pC.x*pB.z) - pA.z*(pB.x*pC.y - pC.x*pB.y);

d_out[index] = out;

}

// Implements cross product for 4D point on the CPU-size.

void cpu_function(point4D * h_ptrData, unsigned int h_dataSize, point4D * h_out, point4D pB, point4D pC)

{

for(unsigned int index = 0; index < h_dataSize; index++)

{

h_out[index].x = 0; h_out[index].y = 0; h_out[index].z = 0; h_out[index].w = 0;

point4D pA = h_ptrData[index];

h_out[index].x += pA.y*(pB.z*pC.w - pC.z*pB.w) - pA.z*(pB.y*pC.w - pC.y*pB.w) + pA.w*(pB.y*pC.z - pC.y*pB.z);

h_out[index].y += -pA.x*(pB.z*pC.w - pC.z*pB.w) + pA.z*(pB.x*pC.w - pC.x*pB.w) - pA.w*(pB.x*pC.z - pC.x*pB.z);

h_out[index].z += pA.x*(pB.y*pC.w - pC.y*pB.w) - pA.y*(pB.x*pC.w - pC.x*pB.w) + pA.w*(pB.x*pC.y - pC.x*pB.y);

h_out[index].w += -pA.x*(pB.y*pC.z - pC.y*pB.z) + pA.y*(pB.x*pC.z - pC.x*pB.z) - pA.z*(pB.x*pC.y - pC.x*pB.y);

}

}

int main(int argc, char *argv[])

{

int devID;

cudaDeviceProp deviceProps;

printf("[%s] - Starting...\n", argv[0]);

int device_count;

cudaCheckError(cudaGetDeviceCount(&device_count), "Couldn't get device count!");

if (device_count == 0)

{

fprintf(stderr, "gpuDeviceInit() CUDA error: no devices supporting CUDA.\n");

exit(EXIT_FAILURE);

}

devID = 0;

cudaCheckError(cudaSetDevice(devID), "Couldn't set device!");

cudaCheckError(cudaGetDeviceProperties(&deviceProps, devID), "Couldn't get Device Properties");

printf("GPU Device %d: \"%s\" with compute capability %d.%d\n\n", devID, deviceProps.name, deviceProps.major, deviceProps.minor);

cudaDeviceReset();

const unsigned int DATA_SIZE = 30000000;

bool bFinalResults = true;

// Input Data Initialization

point4D pointB;

pointB.x = 1; pointB.y = 1; pointB.z = 0; pointB.w = 0;

point4D pointC;

pointC.x = 1; pointC.y = 1; pointC.z = 1; pointC.w = 0;

point4D * data = (point4D*) malloc(DATA_SIZE * sizeof(point4D));

point4D * out_points = (point4D*) malloc(DATA_SIZE * sizeof(point4D));

initialize_input(data, DATA_SIZE);

//

flush_buffer(out_points, DATA_SIZE);

cout << endl << endl;

// 1+way

heterogenous_1way_plus(data, DATA_SIZE, out_points, pointB, pointC);

bFinalResults &= correct_output(out_points, DATA_SIZE); // checking correctness

free(out_points);

free(data);

exit(bFinalResults ? EXIT_SUCCESS : EXIT_FAILURE);

return 0;

}

void heterogenous_1way_plus(point4D * h_ptrData, unsigned int h_dataSize, point4D * h_out, point4D pB, point4D pC)

{

cout << "1-way_plus: STARTS!!!" << endl;

// Run the %25 of the data from CPU, rest will be executed on GPU

unsigned int ratioPercentCPUtoGPU = 25;

unsigned int d_dataSize = (h_dataSize * (100 - ratioPercentCPUtoGPU))/100;

h_dataSize = (h_dataSize * ratioPercentCPUtoGPU)/100;

size_t memorySize = d_dataSize * sizeof(point4D);

cout << "Data Ratio Between CPU and GPU:" << (float)ratioPercentCPUtoGPU/100 << endl;

cout << "CPU will process " << h_dataSize << " data." << endl;

cout << "GPU will process " << d_dataSize << " data." << endl;

// registers host memory as page-locked (required for asynch cudaMemcpyAsync)

cudaCheckError(cudaHostRegister(h_ptrData, memorySize, cudaHostRegisterPortable), "cudaHostRegister failed!");

cudaCheckError(cudaHostRegister(h_out, memorySize, cudaHostRegisterPortable), "cudaHostRegister failed!");

// allocate device memory

point4D * d_in = 0; point4D * d_out = 0;

cudaCheckError(cudaMalloc( (void **)&d_in, memorySize), "cudaMalloc failed!");

cudaCheckError(cudaMalloc( (void **)&d_out, memorySize), "cudaMalloc failed!");

// set kernel launch configuration

dim3 nThreads = dim3(1000,1);

dim3 nBlocks = dim3(d_dataSize / nThreads.x,1);

cout << "GPU Kernel Configuration : " << endl;

cout << "Number of Threads :\t" << nThreads.x << "\t" << nThreads.y << "\t" << nThreads.z << endl;

cout << "Number of Blocks :\t" << nBlocks.x << "\t" << nBlocks.y << "\t" << nBlocks.z << endl;

// create cuda stream

cudaStream_t stream;

cudaCheckError(cudaStreamCreate(&stream), "cudaStreamCreate failed!");

// create cuda event handles

cudaEvent_t start, stop;

cudaCheckError(cudaEventCreate(&start), "cudaEventCreate failed!");

cudaCheckError(cudaEventCreate(&stop), "cudaEventCreate failed!");

// main thread waits for device

cudaCheckError(cudaDeviceSynchronize(), "cudaDeviceSynchronize failed!");

float gpu_time = 0.0f;

cudaEventRecord(start, stream);

cudaMemcpyAsync(d_in, h_ptrData, memorySize, cudaMemcpyHostToDevice, stream);

gpu_kernel<<<nBlocks, nThreads, 0, stream>>>(d_in, d_out, pB, pC);

cudaMemcpyAsync(h_out, d_out, memorySize, cudaMemcpyDeviceToHost, stream);

cudaEventRecord(stop, stream);

// The memory layout of CPU processing starts after GPU's.

cpu_function(h_ptrData + d_dataSize, h_dataSize, h_out + d_dataSize, pB, pC);

cudaCheckError(cudaStreamSynchronize(stream), "cudaStreamSynchronize failed!");

cudaCheckError(cudaEventElapsedTime(&gpu_time, start, stop), "cudaEventElapsedTime failed!");

cudaCheckError(cudaDeviceSynchronize(), "cudaDeviceSynchronize failed!");

// release resources

cudaCheckError(cudaEventDestroy(start), "cudaEventDestroy failed!");

cudaCheckError(cudaEventDestroy(stop), "cudaEventDestroy failed!");

cudaCheckError(cudaHostUnregister(h_ptrData), "cudaHostUnregister failed!");

cudaCheckError(cudaHostUnregister(h_out), "cudaHostUnregister failed!");

cudaCheckError(cudaFree(d_in), "cudaFree failed!");

cudaCheckError(cudaFree(d_out), "cudaFree failed!");

cudaCheckError(cudaStreamDestroy(stream), "cudaStreamDestroy failed!");

cudaDeviceReset();

cout << "Execution of GPU: " << gpu_time << "ms" << endl;

cout << "1-way_plus: ENDS!!!" << endl;

}

// Checks correctness of outputs

bool correct_output(point4D * data, unsigned int size)

{

const static float x = 0, y = 0, z = 0, w = -1;

for (unsigned int i = 0; i < size; i++)

{

if (data[i].x != x || data[i].y != y ||

data[i].z != y || data[i].w != w)

{

printf("Error! data[%d] = [%f, %f, %f, %f], ref = [%f, %f, %f, %f]\n",

i, data[i].x, data[i].y, data[i].z, data[i].w, x, y, z, w);

return 0;

}

}

return 1;

}

// Refresh the output buffer

void flush_buffer(point4D * data, unsigned int size)

{

for(unsigned int i = 0; i < size; i++)

{

data[i].x = 0; data[i].y = 0; data[i].z = 0; data[i].w = 0;

}

}

// Initialize the input data to feed the system for simulation

void initialize_input(point4D *& data, unsigned int size)

{

for(unsigned int idx = 0; idx < size; idx++)

{

point4D* d = &data[idx];

d->x = 1;

d->y = 0;

d->z = 0;

d->w = 0;

}

}

void cudaCheckError(cudaError_t cudaStatus, char* err)

{

if(cudaStatus != cudaSuccess)

{

fprintf(stderr, err);

cudaDeviceReset();

exit(EXIT_FAILURE);

}

}

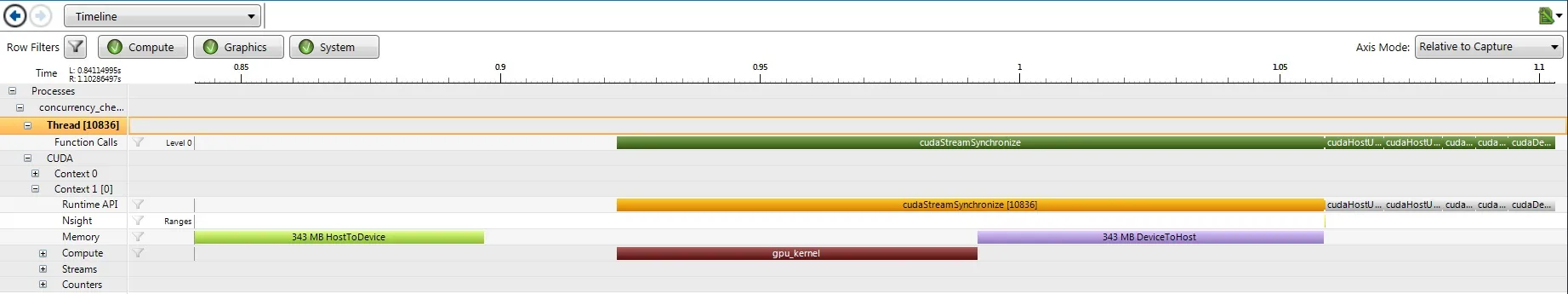

这里是Nsight的截图  :

:

cudaEventRecord()并没有解决任何问题。 - Vemulo