我正在尝试构建一个机器学习模型。然而,我在理解何时应用编码方面遇到了困难。 请看下面的步骤和函数,以复制我一直在遵循的过程。

首先,我将数据集分为训练集和测试集:

# Import the resampling package

from sklearn.naive_bayes import MultinomialNB

import string

from nltk.corpus import stopwords

import re

from sklearn.model_selection import train_test_split

from sklearn.feature_extraction.text import CountVectorizer

from nltk.tokenize import RegexpTokenizer

from sklearn.utils import resample

from sklearn.metrics import f1_score, precision_score, recall_score, accuracy_score

# Split into training and test sets

# Testing Count Vectorizer

X = df[['Text']]

y = df['Label']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=40)

# Returning to one dataframe

training_set = pd.concat([X_train, y_train], axis=1)

现在我进行(欠)取样:

# Separating classes

spam = training_set[training_set.Label == 1]

not_spam = training_set[training_set.Label == 0]

# Undersampling the majority

undersample = resample(not_spam,

replace=True,

n_samples=len(spam), #set the number of samples to equal the number of the minority class

random_state=40)

# Returning to new training set

undersample_train = pd.concat([spam, undersample])

然后我应用所选择的算法:

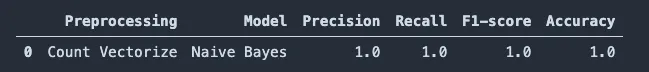

full_result = pd.DataFrame(columns = ['Preprocessing', 'Model', 'Precision', 'Recall', 'F1-score', 'Accuracy'])

X, y = BOW(undersample_train)

full_result = full_result.append(training_naive(X_train, X_test, y_train, y_test, 'Count Vectorize'), ignore_index = True)

BOW 的定义如下:

def BOW(data):

df_temp = data.copy(deep = True)

df_temp = basic_preprocessing(df_temp)

count_vectorizer = CountVectorizer(analyzer=fun)

count_vectorizer.fit(df_temp['Text'])

list_corpus = df_temp["Text"].tolist()

list_labels = df_temp["Label"].tolist()

X = count_vectorizer.transform(list_corpus)

return X, list_labels

basic_preprocessing的定义如下:

def basic_preprocessing(df):

df_temp = df.copy(deep = True)

df_temp = df_temp.rename(index = str, columns = {'Clean_Titles_2': 'Text'})

df_temp.loc[:, 'Text'] = [text_prepare(x) for x in df_temp['Text'].values]

#le = LabelEncoder()

#le.fit(df_temp['medical_specialty'])

#df_temp.loc[:, 'class_label'] = le.transform(df_temp['medical_specialty'])

tokenizer = RegexpTokenizer(r'\w+')

df_temp["Tokens"] = df_temp["Text"].apply(tokenizer.tokenize)

return df_temp

text_prepare 是一个函数名:

def text_prepare(text):

REPLACE_BY_SPACE_RE = re.compile('[/(){}\[\]\|@,;]')

BAD_SYMBOLS_RE = re.compile('[^0-9a-z #+_]')

STOPWORDS = set(stopwords.words('english'))

text = text.lower()

text = REPLACE_BY_SPACE_RE.sub('', text) # replace REPLACE_BY_SPACE_RE symbols by space in text

text = BAD_SYMBOLS_RE.sub('', text) # delete symbols which are in BAD_SYMBOLS_RE from text

words = text.split()

i = 0

while i < len(words):

if words[i] in STOPWORDS:

words.pop(i)

else:

i += 1

text = ' '.join(map(str, words))# delete stopwords from text

return text

and

def training_naive(X_train_naive, X_test_naive, y_train_naive, y_test_naive, preproc):

clf = MultinomialNB() # Gaussian Naive Bayes

clf.fit(X_train_naive, y_train_naive)

res = pd.DataFrame(columns = ['Preprocessing', 'Model', 'Precision', 'Recall', 'F1-score', 'Accuracy'])

y_pred = clf.predict(X_test_naive)

f1 = f1_score(y_pred, y_test_naive, average = 'weighted')

pres = precision_score(y_pred, y_test_naive, average = 'weighted')

rec = recall_score(y_pred, y_test_naive, average = 'weighted')

acc = accuracy_score(y_pred, y_test_naive)

res = res.append({'Preprocessing': preproc, 'Model': 'Naive Bayes', 'Precision': pres,

'Recall': rec, 'F1-score': f1, 'Accuracy': acc}, ignore_index = True)

return res

如你所见,顺序如下:

- 定义text_prepare用于文本清洗;

- 定义basic_preprocessing;

- 定义BOW;

- 将数据集分为训练集和测试集;

- 应用抽样;

- 应用算法。

我不明白的是如何正确编码文本才能使算法正常工作。 我的数据集名为df,列分别为:

Label Text Year

1 bla bla bla 2000

0 add some words 2012

1 this is just an example 1998

0 unfortunately the code does not work 2018

0 where should I apply the encoding? 2000

0 What am I missing here? 2005

我在应用BOW时的顺序有误,因为我收到了以下错误:

ValueError: could not convert string to float: 'Expect a good results if ... '

我按照这个链接中的步骤(和代码)进行操作:kaggle.com/ruzarx/oversampling-smote-and-adasyn 。

然而,采样部分是错误的,因为应该只对训练集进行采样,所以应该在拆分之后进行。原则应该是:(1)拆分训练/测试;(2)在训练集上应用重新采样,使模型训练具有平衡的数据; (3)将模型应用于测试集并在其上进行评估。我很乐意提供进一步的信息、数据和/或代码,但我认为我已经提供了所有最相关的步骤。

非常感谢。

def fun(text): remove_punc = [c for c in text if c not in string.punctuation] remove_punc = ''.join(remove_punc) cleaned = [w for w in remove_punc.split() if w.lower() not in stopwords.words('english')] return cleaned- LdMcount_vectorizer.fit(df_temp['Text'])上,您正在将文本数据传递给算法,但它只能处理整数或浮点数数据。在传递给拟合函数之前,您应该使用某种编码方式。您可以尝试使用LabelEncoder或OneHotEncoder。 - Chandan