看起来在以下代码中找不到StreamingContext类。

import org.apache.spark.streaming.{Seconds, StreamingContext}

import org.apache.spark.{SparkConf, SparkContext}

object Exemple {

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setMaster("local[*]").setAppName("Exemple")

val sc = new SparkContext(conf)

val ssc = new StreamingContext(sc, Seconds(2)) //this line throws error

}

}

这里是错误:

Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/spark/streaming/StreamingContext

at Exemple$.main(Exemple.scala:16)

at Exemple.main(Exemple.scala)

Caused by: java.lang.ClassNotFoundException: org.apache.spark.streaming.StreamingContext

at java.net.URLClassLoader.findClass(URLClassLoader.java:381)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:338)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

... 2 more

Process finished with exit code 1

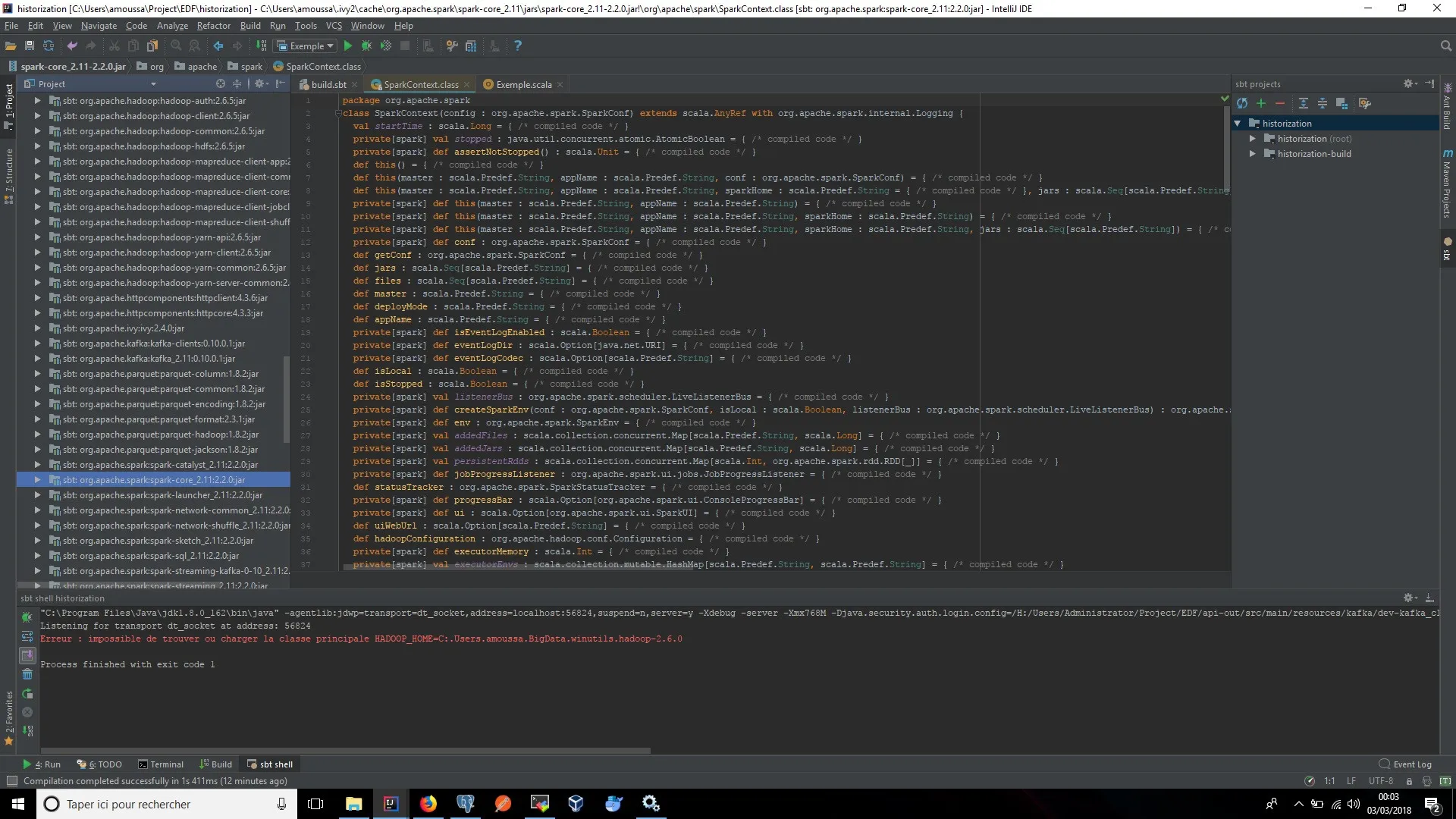

我使用以下的build.sbt文件:

name := "exemple"

version := "1.0.0"

scalaVersion := "2.11.11"

// https://mvnrepository.com/artifact/org.apache.spark/spark-sql

libraryDependencies += "org.apache.spark" %% "spark-sql" % "2.2.0"

// https://mvnrepository.com/artifact/org.apache.spark/spark-streaming

libraryDependencies += "org.apache.spark" %% "spark-streaming" % "2.2.0" % "provided"

// https://mvnrepository.com/artifact/org.apache.spark/spark-streaming-kafka-0-10

libraryDependencies += "org.apache.spark" %% "spark-streaming-kafka-0-10" % "2.2.0"

我使用Intellij的运行按钮运行Exemple类,但出现错误。在sbt shell中可以正常工作。在我的依赖模块中,我可以找到Spark依赖项。代码在Intellij中编译。我可以在外部库中看到Spark依赖项(在左侧项目面板中)。 你有什么想法。这似乎不复杂。