我是一个keras新手。我成功构建了一个有两个输出的网络:

q_dot_P : <tf.Tensor 'concatenate_1/concat:0' shape=(?, 7) dtype=float32>

q_dot_N : <tf.Tensor 'concatenate_2/concat:0' shape=(?, 10) dtype=float32>

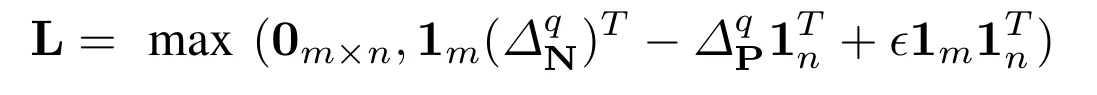

我希望计算上述表达式,其中q_dot_P是\delta^{q}_P,q_dot_N是\delta^{q}_P。

这是我的尝试:

nN = 10

nP = 7

__a = keras.layers.RepeatVector(nN)( q_dot_P ) #OK, same as 1 . q_dot_P

__b = keras.layers.RepeatVector(nP)( q_dot_N ) #OK, same as 1 . q_dot_N

minu = keras.layers.Subtract()( [keras.layers.Permute( (2,1) )( __b ), __a ] )

minu = keras.layers.Lambda( lambda x: x + 0.1)( minu )

minu = keras.layers.Maximum()( [ minu, K.zeros(nN, nP) ] ) #this fails

keras.layers.Maximum() 失败了。

Traceback (most recent call last):

File "noveou_train_netvlad.py", line 226, in <module>

minu = keras.layers.Maximum()( [ minu, K.zeros(nN, nP) ] )

File "/usr/local/lib/python2.7/dist-packages/keras/engine/base_layer.py", line 457, in __call__

output = self.call(inputs, **kwargs)

File "/usr/local/lib/python2.7/dist-packages/keras/layers/merge.py", line 115, in call

return self._merge_function(reshaped_inputs)

File "/usr/local/lib/python2.7/dist-packages/keras/layers/merge.py", line 301, in _merge_function

output = K.maximum(output, inputs[i])

File "/usr/local/lib/python2.7/dist-packages/keras/backend/tensorflow_backend.py", line 1672, in maximum

return tf.maximum(x, y)

File "/usr/local/lib/python2.7/dist-packages/tensorflow/python/ops/gen_math_ops.py", line 4707, in maximum

"Maximum", x=x, y=y, name=name)

File "/usr/local/lib/python2.7/dist-packages/tensorflow/python/framework/op_def_library.py", line 546, in _apply_op_helper

inferred_from[input_arg.type_attr]))

TypeError: Input 'y' of 'Maximum' Op has type string that does not match type float32 of argument 'x'.

这个目标最简单的实现方式是什么?

在遵循@rvinas的建议后,

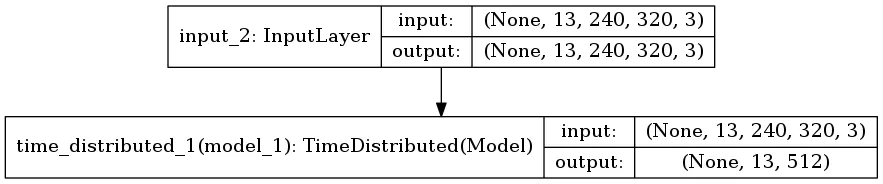

我在Keras中使用了一个分布式时间模型。请参见:Keras TimeDistributed layer without LSTM。

def custom_loss(y_true, y_pred):

nP = 2

nN = 2

# y_pred.shape = shape=(?, 5, 512)

q = y_pred[:,0:1,:] # shape=(?, 1, 512)

P = y_pred[:,1:1+nP,:] # shape=(?, 2, 512)

N = y_pred[:,1+nP:,:] # shape=(?, 2, 512)

q_dot_P = keras.layers.dot( [q,P], axes=-1 ) # shape=(?, 1, 2)

q_dot_N = keras.layers.dot( [q,N], axes=-1 ) # shape=(?, 1, 2)

epsilon = 0.1 # Your epsilon here

zeros = K.zeros((nP, nN), dtype='float32')

ones_m = K.ones(nP, dtype='float32')

ones_n = K.ones(nN, dtype='float32')

code.interact( local=locals() , banner='custom_loss')

aux = ones_m[None, :, None] * q_dot_N[:, None, :] \

- q_dot_P[:, :, None] * ones_n[None, None, :] \

+ epsilon * ones_m[:, None] * ones_n[None, :]

return K.maximum(zeros, aux)

以下是主要内容:

# In __main__

#---------------------------------------------------------------------------

# Setting Up core computation

#---------------------------------------------------------------------------

input_img = Input( shape=(image_nrows, image_ncols, image_nchnl ) )

cnn = make_vgg( input_img )

out = NetVLADLayer(num_clusters = 16)( cnn )

model = Model( inputs=input_img, outputs=out )

#--------------------------------------------------------------------------

# TimeDistributed

#--------------------------------------------------------------------------

t_input = Input( shape=(1+nP+nN, image_nrows, image_ncols, image_nchnl ) )

t_out = TimeDistributed( model )( t_input )

t_model = Model( inputs=t_input, outputs=t_out )

t_model.compile( loss=custom_loss, optimizer='sgd' )