我正在使用

我必须在我的页面上显示与订单相关的信息。通过左连接将三个表合并在一起。 表格:

以下是查询语句:

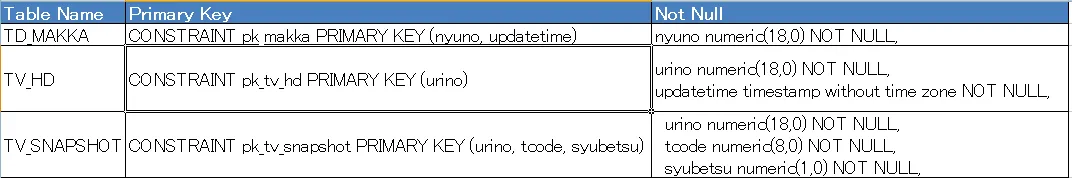

Postgresql-9.2版本,Windows 7 64位,RAM 6GB。这是一个Java企业项目。我必须在我的页面上显示与订单相关的信息。通过左连接将三个表合并在一起。 表格:

- TV_HD(389772行)

- TV_SNAPSHOT(1564756行)

- TD_MAKKA(419298行)

487252。它还会逐日增加。

表关系:

- TV_HD与TV_SNAPSHOT之间存在“一对多”的关系

- TV_HD与TD_MAKKA之间存在“一对多”的关系

为更好地理解,我现在提供了一个带有SQL查询的图片视图

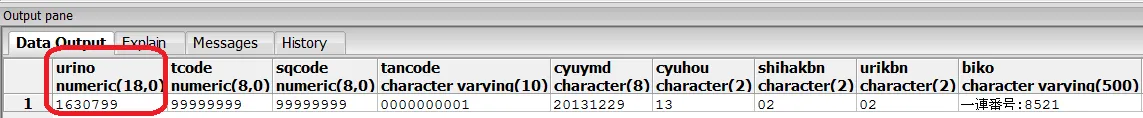

SELECT * FROM tv_hd where urino = 1630799

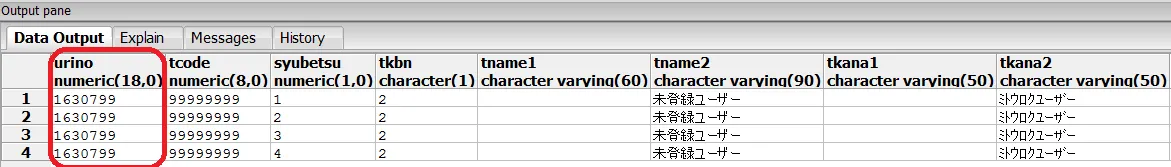

SELECT * FROM tv_snapshot where urino = 1630799

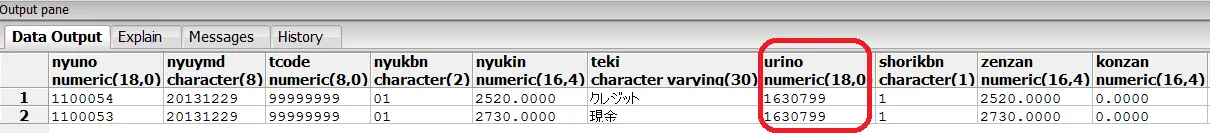

SELECT * FROM td_makka where urino = 1630799

此查询大约需要运行90秒。 我如何改善查询性能?

此查询大约需要运行90秒。 我如何改善查询性能?

以下是查询语句:

SELECT count(*)

FROM (SELECT HD.URINO

FROM

TV_HD HD

LEFT JOIN TV_SNAPSHOT T ON (HD.URINO = T.URINO AND HD.TCODE = T.TCODE AND T.DELFLG = 0 AND T.SYUBETSU = 1)

LEFT JOIN TV_SNAPSHOT T_SQ

ON (HD.URINO = T_SQ.URINO AND HD.SQCODE = T_SQ.TCODE AND T_SQ.DELFLG = 0 AND T_SQ.SYUBETSU = 3)

LEFT JOIN (SELECT N.URINO

FROM

TD_MAKKA N

WHERE

N.UPDATETIME IN (

SELECT MIN(NMIN.UPDATETIME)

FROM

TD_MAKKA NMIN

WHERE

N.URINO = NMIN.URINO

AND

NMIN.TORIKESHIFLG <> -1

)

) NYUMIN

ON (HD.URINO = NYUMIN.URINO)

LEFT JOIN

(

SELECT

NSUM.URINO,

SUM(COALESCE(NSUM.NYUKIN, 0)) NYUKIN,

SUM(COALESCE(NSUM.NYUKIN, 0)) + SUM(COALESCE(NSUM.TESU, 0)) + SUM(COALESCE(NSUM.SOTA, 0)) SUMNYUKIN

FROM

TD_MAKKA NSUM

GROUP BY

URINO

) NYUSUM

ON (HD.URINO = NYUSUM.URINO)

LEFT JOIN

(

SELECT N.URINO

FROM

TD_MAKKA N

WHERE

UPDATETIME = (

SELECT MAX(UPDATETIME)

FROM

TD_MAKKA NMAX

WHERE

N.URINO = NMAX.URINO

AND

NMAX.TORIKESHIFLG <> -1

)

) NYUMAX

ON (HD.URINO = NYUMAX.URINO)

WHERE ((HD.URIBRUI <> '1') OR (HD.URIBRUI = '1' AND T_SQ.NYUKOBEFLG = '-1'))

ORDER BY

HD.URINO DESC

) COUNT_

这是 EXPLAIN ANALYZE 的结果。

Aggregate (cost=7246861.21..7246861.22 rows=1 width=0) (actual time=69549.159..69549.159 rows=1 loops=1)

-> Merge Left Join (cost=7240188.92..7242117.36 rows=379508 width=6) (actual time=68602.689..69510.563 rows=487252 loops=1)

Merge Cond: (hd.urino = n.urino)

-> Sort (cost=3727299.33..3728248.10 rows=379508 width=6) (actual time=62160.072..62557.132 rows=420036 loops=1)

Sort Key: hd.urino

Sort Method: external merge Disk: 6984kB

-> Hash Right Join (cost=169264.26..3686940.26 rows=379508 width=6) (actual time=54796.930..60172.248 rows=420036 loops=1)

Hash Cond: (n.urino = hd.urino)

-> Seq Scan on td_makka n (cost=0.00..3511201.36 rows=209673 width=6) (actual time=24.326..4640.020 rows=419143 loops=1)

Filter: (SubPlan 1)

Rows Removed by Filter: 155

SubPlan 1

-> Aggregate (cost=8.33..8.34 rows=1 width=23) (actual time=0.009..0.009 rows=1 loops=419298)

-> Index Scan using idx_td_makka on td_makka nmin (cost=0.00..8.33 rows=1 width=23) (actual time=0.006..0.007 rows=1 loops=419298)

Index Cond: (n.urino = urino)

Filter: (torikeshiflg <> (-1)::numeric)

Rows Removed by Filter: 0

-> Hash (cost=163037.41..163037.41 rows=379508 width=6) (actual time=54771.078..54771.078 rows=386428 loops=1)

Buckets: 4096 Batches: 16 Memory Usage: 737kB

-> Hash Right Join (cost=75799.55..163037.41 rows=379508 width=6) (actual time=51599.167..54605.901 rows=386428 loops=1)

Hash Cond: ((t_sq.urino = hd.urino) AND (t_sq.tcode = hd.sqcode))

Filter: ((hd.uribrui <> '1'::bpchar) OR ((hd.uribrui = '1'::bpchar) AND (t_sq.nyukobeflg = (-1)::numeric)))

Rows Removed by Filter: 3344

-> Seq Scan on tv_snapshot t_sq (cost=0.00..73705.42 rows=385577 width=15) (actual time=0.053..2002.953 rows=389983 loops=1)

Filter: ((delflg = 0::numeric) AND (syubetsu = 3::numeric))

Rows Removed by Filter: 1174773

-> Hash (cost=68048.99..68048.99 rows=389771 width=14) (actual time=51596.055..51596.055 rows=389772 loops=1)

Buckets: 4096 Batches: 16 Memory Usage: 960kB

-> Hash Right Join (cost=21125.85..68048.99 rows=389771 width=14) (actual time=579.405..51348.270 rows=389772 loops=1)

Hash Cond: (nyusum.urino = hd.urino)

-> Subquery Scan on nyusum (cost=0.00..35839.52 rows=365638 width=6) (actual time=17.435..49996.674 rows=385537 loops=1)

-> GroupAggregate (cost=0.00..32183.14 rows=365638 width=34) (actual time=17.430..49871.702 rows=385537 loops=1)

-> Index Scan using idx_td_makka on td_makka nsum (cost=0.00..21456.76 rows=419345 width=34) (actual time=0.017..48357.702 rows=419298 loops=1)

-> Hash (cost=13969.71..13969.71 rows=389771 width=20) (actual time=491.549..491.549 rows=389772 loops=1)

Buckets: 4096 Batches: 32 Memory Usage: 567kB

-> Seq Scan on tv_hd hd (cost=0.00..13969.71 rows=389771 width=20) (actual time=0.052..242.415 rows=389772 loops=1)

-> Sort (cost=3512889.60..3512894.84 rows=2097 width=6) (actual time=6442.600..6541.728 rows=486359 loops=1)

Sort Key: n.urino

Sort Method: external sort Disk: 8600kB

-> Seq Scan on td_makka n (cost=0.00..3512773.90 rows=2097 width=6) (actual time=0.135..4053.116 rows=419143 loops=1)

Filter: ((updatetime)::text = (SubPlan 2))

Rows Removed by Filter: 155

SubPlan 2

-> Aggregate (cost=8.33..8.34 rows=1 width=23) (actual time=0.008..0.008 rows=1 loops=419298)

-> Index Scan using idx_td_makka on td_makka nmax (cost=0.00..8.33 rows=1 width=23) (actual time=0.005..0.006 rows=1 loops=419298)

Index Cond: (n.urino = urino)

Filter: (torikeshiflg <> (-1)::numeric)

Rows Removed by Filter: 0

Total runtime: 69575.139 ms

这里是解释分析结果的详细信息:

EXPLAIN ANALYZE <long_query_here>。告诉我们分析结果,然后我们可以讨论如何进行优化。如果不清楚需要修复什么,那就很难说了。 - Makotourino列将受益于索引。 - Makoto