我使用CIFAR10数据集来学习如何使用Keras和PyTorch编码。

环境为Python 3.6.7,Torch 1.0.0,Keras 2.2.4,Tensorflow 1.14.0。 我使用相同的批大小、训练轮数、学习率和优化器。 我使用DenseNet121作为模型。

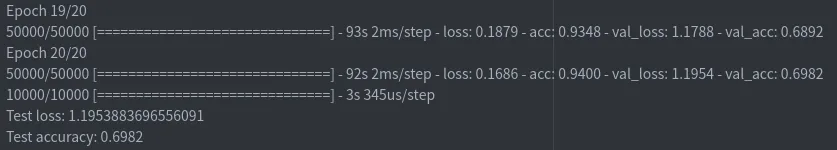

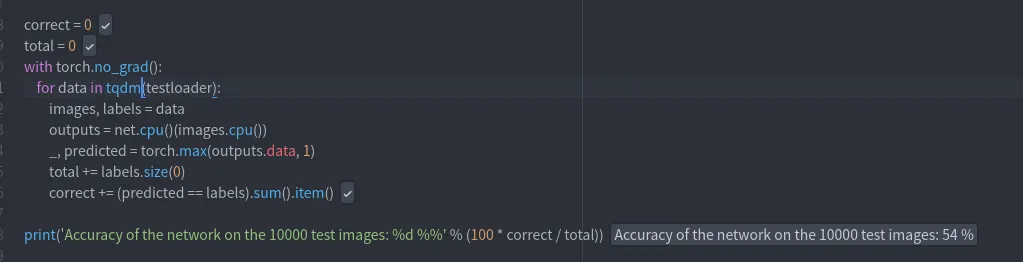

训练后,在测试数据中,Keras获得了69%的准确率。 PyTorch只在测试数据中获得了54%的准确率。

我知道结果不同,但为什么PyTorch的结果这么差呢?

这是Keras代码:

import os, keras

from keras.datasets import cifar10

from keras.applications.densenet import DenseNet121

batch_size = 32

num_classes = 10

epochs = 20

# The data, split between train and test sets:

(x_train, y_train), (x_test, y_test) = cifar10.load_data()

print('x_train shape:', x_train.shape)

print(x_train.shape[0], 'train samples')

print(x_test.shape[0], 'test samples')

# Convert class vectors to binary class matrices.

y_train = keras.utils.to_categorical(y_train, num_classes)

y_test = keras.utils.to_categorical(y_test, num_classes)

# model

model = DenseNet121(include_top=True, weights=None, input_shape=(32,32,3), classes=10)

# initiate RMSprop optimizer

opt = keras.optimizers.SGD(lr=0.001, momentum=0.9)

model.compile(loss='categorical_crossentropy', optimizer=opt, metrics=['accuracy'])

x_train = x_train.astype('float32')

x_test = x_test.astype('float32')

x_train /= 255

x_test /= 255

model.fit(x_train, y_train,

batch_size=batch_size,

epochs=epochs,

validation_data=(x_test, y_test),

shuffle=True)

# Score trained model.

scores = model.evaluate(x_test, y_test, verbose=1)

print('Test loss:', scores[0])

print('Test accuracy:', scores[1])

以下是Pytorch代码:

import torch

import torchvision

import torchvision.transforms as transforms

from torch import flatten

import torch.optim as optim

from torchvision import transforms, models

from torch.nn import Linear, Softmax, Module, Sequential, CrossEntropyLoss

import numpy as np

from tqdm import tqdm

classes = ('plane', 'car', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck')

transform = transforms.Compose([transforms.ToTensor()])

trainset = torchvision.datasets.CIFAR10(root='./DataSet', train=True, download=True, transform=transform)

trainloader = torch.utils.data.DataLoader(trainset, batch_size=32, shuffle=True, num_workers=0)

testset = torchvision.datasets.CIFAR10(root='./DataSet', train=False, download=True, transform=transform)

testloader = torch.utils.data.DataLoader(testset, batch_size=4, shuffle=False, num_workers=0)

import torch.nn as nn

import torch.nn.functional as F

class Net(Module):

def __init__(self):

super(Net, self).__init__()

self.funFeatExtra = Sequential(*[i for i in list(models.densenet121().children())[:-1]])

self.funFlatten = flatten

self.funOutputLayer = Linear(1024, 10)

self.funSoftmax = Softmax(dim=1)

def forward(self, x):

x = self.funFeatExtra(x)

x = self.funFlatten(x, 1)

x = self.funOutputLayer(x)

x = self.funSoftmax(x)

return x

net = Net()

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(net.parameters(), lr=0.001, momentum=0.9)

for epoch in range(20): # loop over the dataset multiple times

running_loss = 0.0

for i, data in tqdm(enumerate(trainloader, 0)):

# get the inputs; data is a list of [inputs, labels]

inputs, labels = data

# zero the parameter gradients

optimizer.zero_grad()

# forward + backward + optimize

outputs = net.cuda()(inputs.cuda())

loss = criterion(outputs, labels.cuda())

loss.backward()

optimizer.step()

# print statistics

running_loss += loss.item()

# if i % 2000 == 1999: # print every 2000 mini-batches

# print('[%d, %5d] loss: %.3f' % (epoch + 1, i + 1, running_loss / 2000))

# running_loss = 0.0

print('Finished Training')

########################################################################

# The results seem pretty good.

#

# Let us look at how the network performs on the whole dataset.

correct = 0

total = 0

with torch.no_grad():

for data in tqdm(testloader):

images, labels = data

outputs = net.cpu()(images.cpu())

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print('Accuracy of the network on the 10000 test images: %d %%' % (100 * correct / total))