我的应用程序首先记录视频,然后在添加一些效果后,使用AVExportSession导出输出。

首先,录制视频期间的问题是视频重力,通过更改AVCaptureVideoPreviewLayer中的videoGravity属性为AVLayerVideoGravityResizeAspectFill解决了该问题。

其次,显示录制的视频的问题是通过将AVPlayerLayer中的VideoGravity属性更改为AVLayerVideoGravityResizeAspectFill解决的。

但是,现在的问题是当我想要在使用AVExportSession添加一些效果后导出视频时,又出现了一些视频重力问题。即使更改CALayer中的contentsGravity属性也不会影响输出。值得一提的是,这个问题在iPad上很明显。

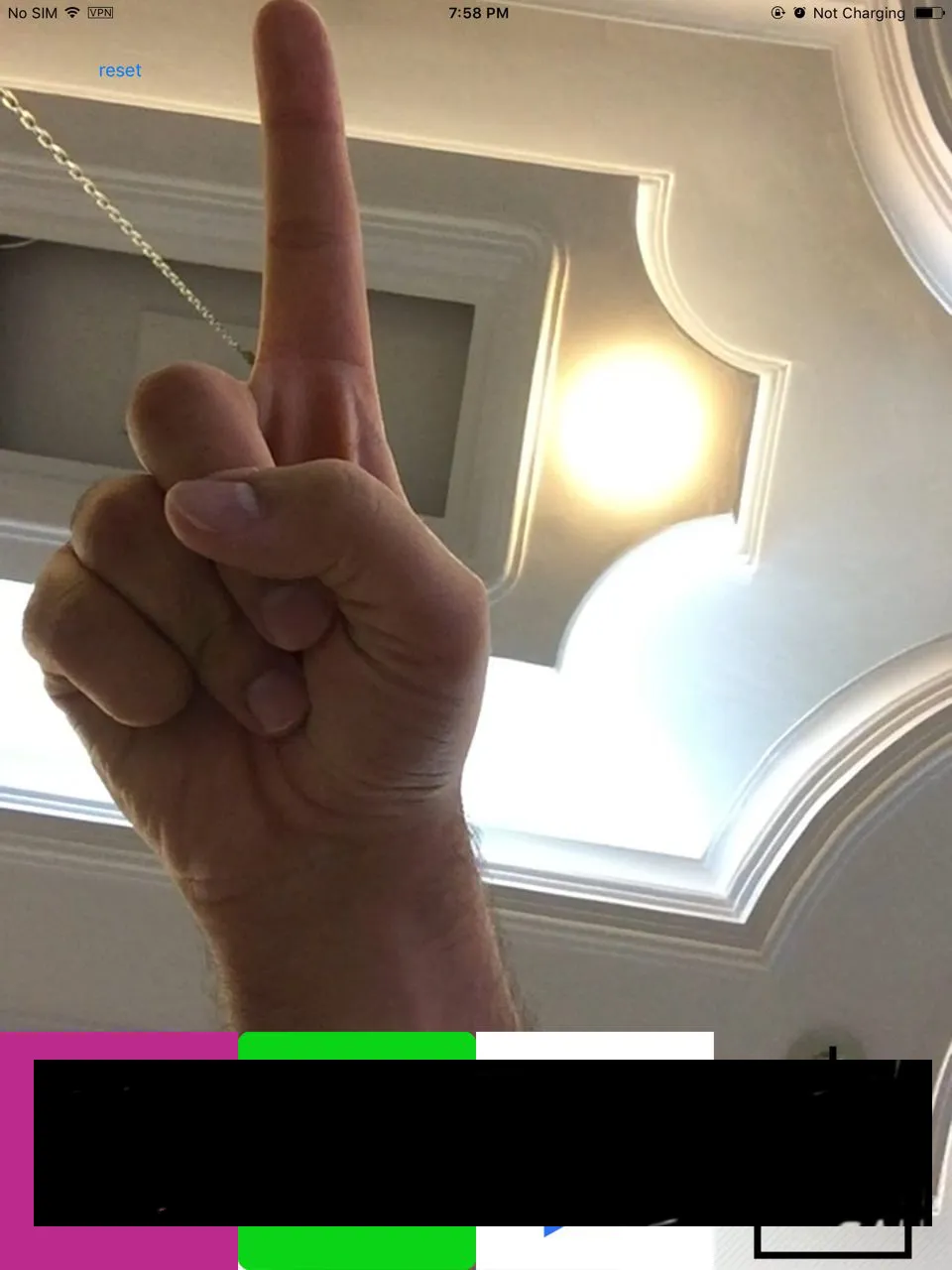

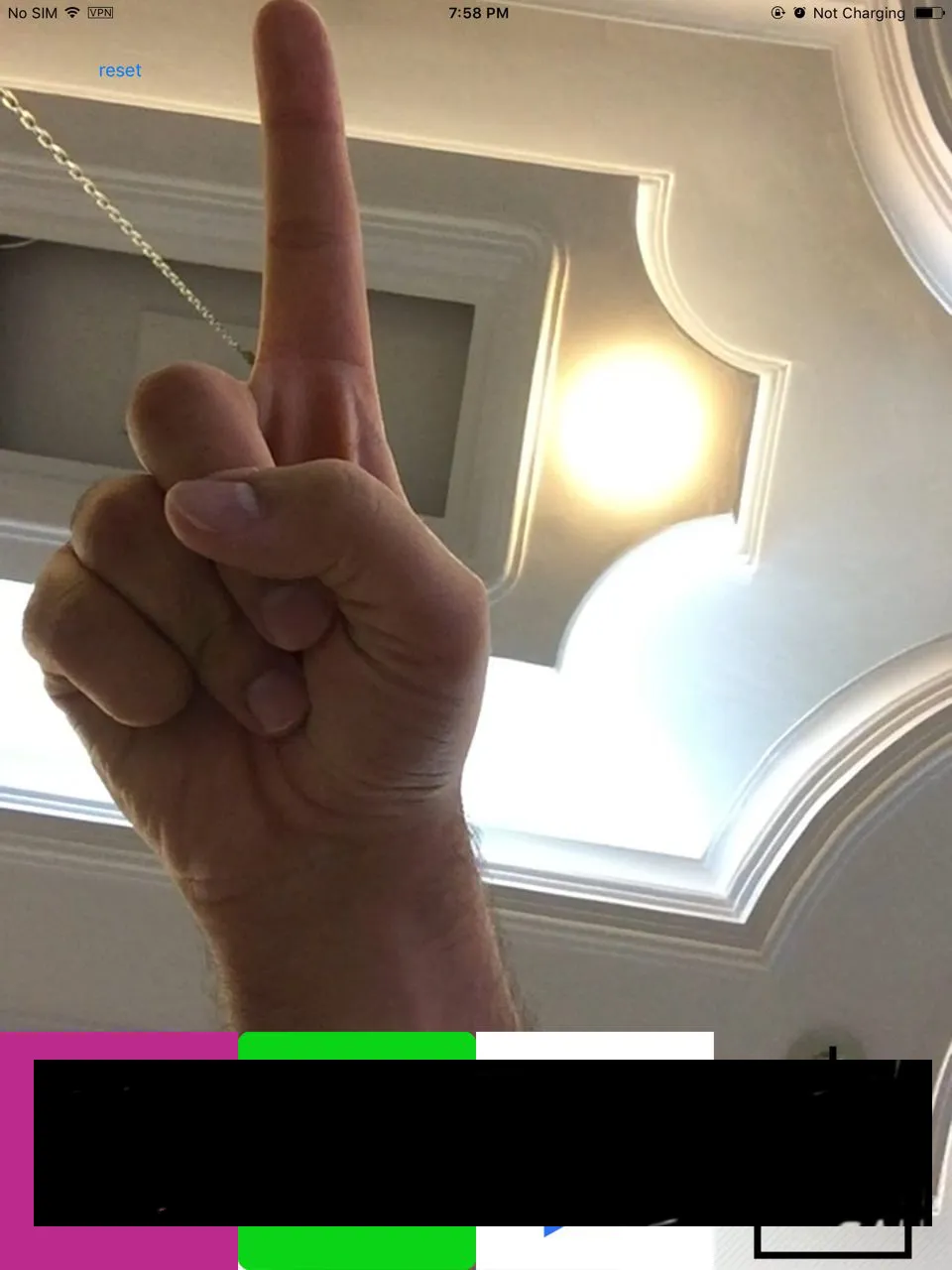

这是在添加一些效果之前想要显示视频时的图像: "As you can see, the tip of my finger is at the top of the screen (because I have fixed the issue with the gravity in the layer inside the app). However, after exporting and saving to the gallery, what I see is like this:

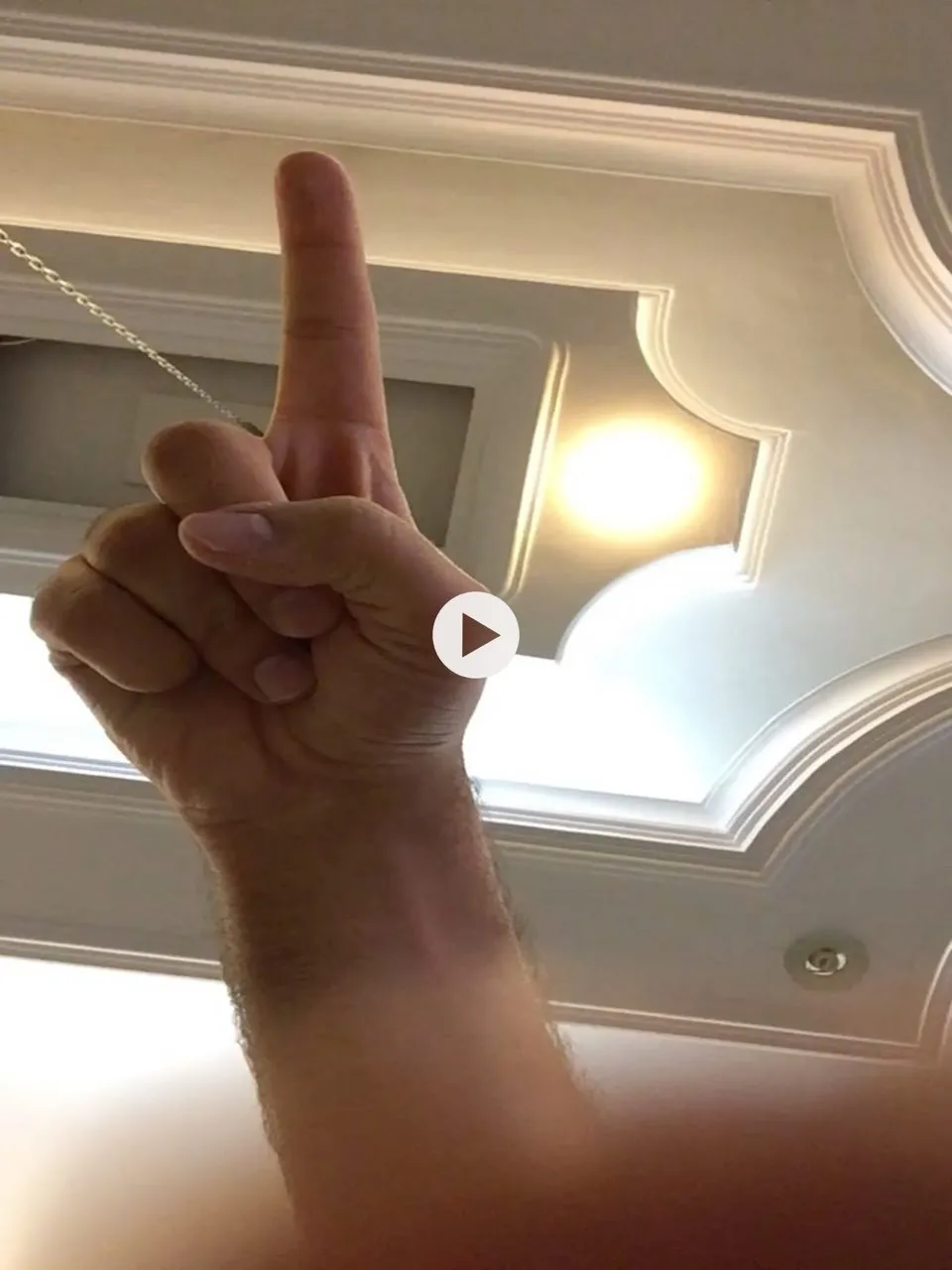

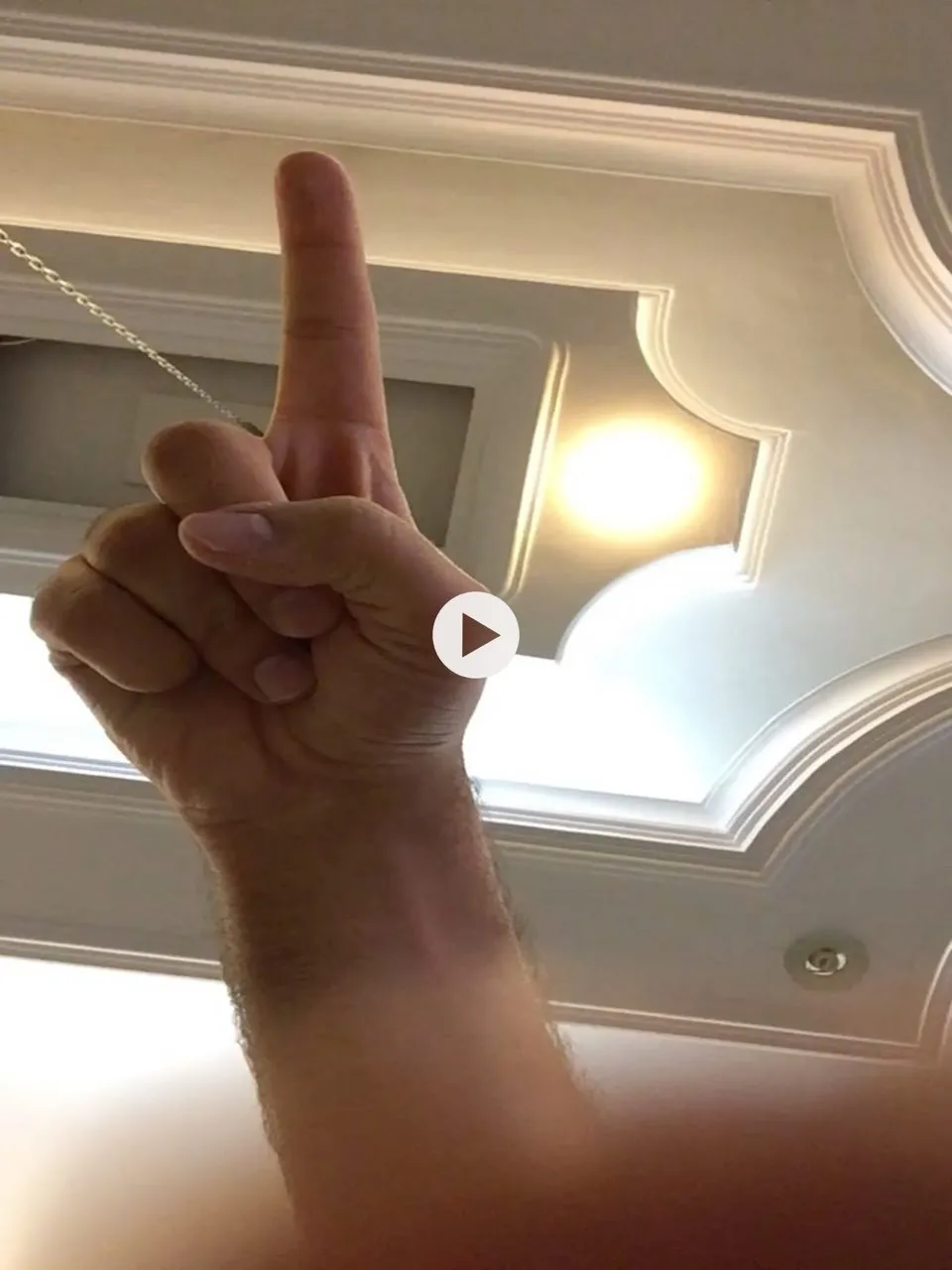

"As you can see, the tip of my finger is at the top of the screen (because I have fixed the issue with the gravity in the layer inside the app). However, after exporting and saving to the gallery, what I see is like this:  . I know that the problem is with the gravity, but I don't know how I can fix it. I don't know if any changes should be made to the video when recording or if there needs to be a change in the code below when exporting."

. I know that the problem is with the gravity, but I don't know how I can fix it. I don't know if any changes should be made to the video when recording or if there needs to be a change in the code below when exporting."

首先,录制视频期间的问题是视频重力,通过更改AVCaptureVideoPreviewLayer中的videoGravity属性为AVLayerVideoGravityResizeAspectFill解决了该问题。

其次,显示录制的视频的问题是通过将AVPlayerLayer中的VideoGravity属性更改为AVLayerVideoGravityResizeAspectFill解决的。

但是,现在的问题是当我想要在使用AVExportSession添加一些效果后导出视频时,又出现了一些视频重力问题。即使更改CALayer中的contentsGravity属性也不会影响输出。值得一提的是,这个问题在iPad上很明显。

这是在添加一些效果之前想要显示视频时的图像:

"As you can see, the tip of my finger is at the top of the screen (because I have fixed the issue with the gravity in the layer inside the app). However, after exporting and saving to the gallery, what I see is like this:

"As you can see, the tip of my finger is at the top of the screen (because I have fixed the issue with the gravity in the layer inside the app). However, after exporting and saving to the gallery, what I see is like this:  . I know that the problem is with the gravity, but I don't know how I can fix it. I don't know if any changes should be made to the video when recording or if there needs to be a change in the code below when exporting."

. I know that the problem is with the gravity, but I don't know how I can fix it. I don't know if any changes should be made to the video when recording or if there needs to be a change in the code below when exporting." let composition = AVMutableComposition()

let asset = AVURLAsset(url: videoUrl, options: nil)

let tracks = asset.tracks(withMediaType : AVMediaTypeVideo)

let videoTrack:AVAssetTrack = tracks[0] as AVAssetTrack

let timerange = CMTimeRangeMake(kCMTimeZero, asset.duration)

let viewSize = parentView.bounds.size

let trackSize = videoTrack.naturalSize

let compositionVideoTrack:AVMutableCompositionTrack = composition.addMutableTrack(withMediaType: AVMediaTypeVideo, preferredTrackID: CMPersistentTrackID())

do {

try compositionVideoTrack.insertTimeRange(timerange, of: videoTrack, at: kCMTimeZero)

} catch {

print(error)

}

let compositionAudioTrack:AVMutableCompositionTrack = composition.addMutableTrack(withMediaType: AVMediaTypeAudio, preferredTrackID: CMPersistentTrackID())

for audioTrack in asset.tracks(withMediaType: AVMediaTypeAudio) {

do {

try compositionAudioTrack.insertTimeRange(audioTrack.timeRange, of: audioTrack, at: kCMTimeZero)

} catch {

print(error)

}

}

let videolayer = CALayer()

videolayer.frame.size = viewSize

videolayer.contentsGravity = kCAGravityResizeAspectFill

let parentlayer = CALayer()

parentlayer.frame.size = viewSize

parentlayer.contentsGravity = kCAGravityResizeAspectFill

parentlayer.addSublayer(videolayer)

let layercomposition = AVMutableVideoComposition()

layercomposition.frameDuration = CMTimeMake(1, 30)

layercomposition.renderSize = viewSize

layercomposition.animationTool = AVVideoCompositionCoreAnimationTool(postProcessingAsVideoLayer: videolayer, in: parentlayer)

let instruction = AVMutableVideoCompositionInstruction()

instruction.timeRange = CMTimeRangeMake(kCMTimeZero, asset.duration)

let videotrack = composition.tracks(withMediaType: AVMediaTypeVideo)[0] as AVAssetTrack

let layerinstruction = AVMutableVideoCompositionLayerInstruction(assetTrack: videotrack)

let trackTransform = videoTrack.preferredTransform

let xScale = viewSize.height / trackSize.width

let yScale = viewSize.width / trackSize.height

var exportTransform : CGAffineTransform!

if (getVideoOrientation(transform: videoTrack.preferredTransform).1 == .up) {

exportTransform = videoTrack.preferredTransform.translatedBy(x: trackTransform.ty * -1 , y: 0).scaledBy(x: xScale , y: yScale)

} else {

exportTransform = CGAffineTransform.init(translationX: viewSize.width, y: 0).rotated(by: .pi/2).scaledBy(x: xScale, y: yScale)

}

layerinstruction.setTransform(exportTransform, at: kCMTimeZero)

instruction.layerInstructions = [layerinstruction]

layercomposition.instructions = [instruction]

let filePath = FileHelper.getVideoTimeStampName()

let exportedUrl = URL(fileURLWithPath: filePath)

guard let assetExport = AVAssetExportSession(asset: composition, presetName:AVAssetExportPresetHighestQuality) else {delegate?.exportFinished(status: .failed, outputUrl: exportedUrl); return}

assetExport.videoComposition = layercomposition

assetExport.outputFileType = AVFileTypeMPEG4

assetExport.outputURL = exportedUrl

assetExport.exportAsynchronously(completionHandler: {

switch assetExport.status {

case .completed:

print("video exported successfully")

self.delegate?.exportFinished(status: .completed, outputUrl: exportedUrl)

break

case .failed:

self.delegate?.exportFinished(status: .failed, outputUrl: exportedUrl)

print("exporting video failed: \(String(describing: assetExport.error))")

break

default :

print("the video export status is \(assetExport.status)")

self.delegate?.exportFinished(status: assetExport.status, outputUrl: exportedUrl)

break

}

})

如果有人能帮忙,我会非常感激。