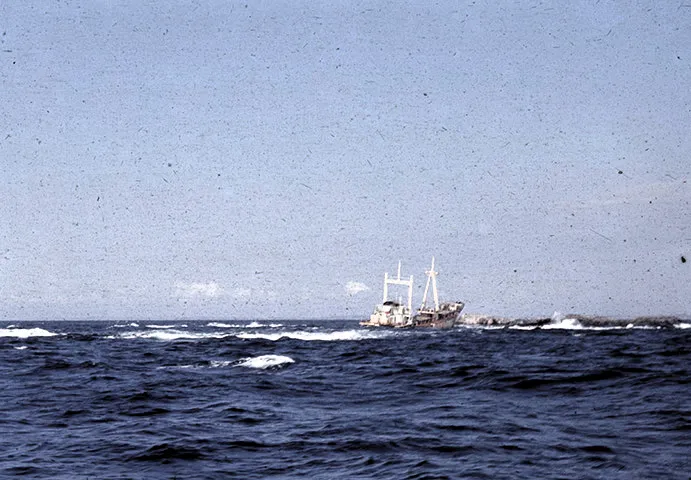

我正在开发一种自动增强扫描的35毫米幻灯片的例行程序。 我正在寻找一种良好的算法来增加对比度并消除色彩偏移。 该算法必须完全自动化,因为将有数千个图像需要处理。 这是两个样本图像,直接从扫描仪中裁剪和缩小以供网络使用:

我正在使用AForge.NET库,并尝试了HistogramEqualization和ContrastStretch滤镜。 HistogramEqualization可以最大限度地提高局部对比度,但总体效果不理想。 ContrastStretch要好得多,但由于它单独拉伸每个颜色通道的直方图,有时会产生较强的色彩偏移:

为了减少色彩偏移,我自己创建了一个UniformContrastStretch滤镜,使用ImageStatistics和LevelsLinear类。 这会对所有颜色通道使用相同的范围,以保留颜色,但代价是对比度较低。

ImageStatistics stats = new ImageStatistics(image);

int min = Math.Min(Math.Min(stats.Red.Min, stats.Green.Min), stats.Blue.Min);

int max = Math.Max(Math.Max(stats.Red.Max, stats.Green.Max), stats.Blue.Max);

LevelsLinear levelsLinear = new LevelsLinear();

levelsLinear.Input = new IntRange(min, max);

Bitmap stretched = levelsLinear.Apply(image);

尽管图像仍然有些蓝色,因此我创建了一个ColorCorrection过滤器,首先计算图像的平均亮度。然后为每个颜色通道计算伽马校正值,以使每个颜色通道的平均值等于平均亮度。均匀对比度拉伸图像的平均值为R=70 G=64 B=93,平均亮度为(70 + 64 + 93) / 3 = 76。伽马值计算为 R=1.09 G=1.18 B=0.80,结果得到非常中性的图像,平均值为 R=76 G=76 B=76,如预期所示:

现在,进入真正的问题... 我想校正图像的平均颜色为灰色可能有点过于激进,并且会使一些图像变得相当单调,就像第二个样本(第一个图像是均匀拉伸的,下一个是相同图像经过颜色校正后):

在照片编辑程序中手动执行颜色校正的一种方法是对已知中性颜色(白色/灰色/黑色)的颜色进行采样,然后调整图像的其余部分。但由于此例程必须完全自动化,因此这不是一个选项。

我想我可以为我的ColorCorrection过滤器添加强度设置,以便强度为0.5将平均值移动到平均亮度的一半。但是另一方面,有些图像可能根本不需要进行任何颜色校正。

有更好的算法想法吗?或者有一些方法可以检测图像是否具有色彩偏差或只是某些颜色很多,例如第二个示例?