3个回答

7

基准测试

- 我对4个算法进行了基准测试

- 在64位

.Net Framework 4.7.1上都进行了测试 - 每个测试运行1000次并取平均值

- 我缩放了每个数据集。

- 在每个测试之前,框架会执行

GC.Collect和GC.WaitForPendingFinalizers - 并进行了1次隔离测试以验证结果

我使用了4个输入数据集

输入

public static List<string> ListofSimpleString(int scale)

{

return Enumerable.Range(0, scale)

.Select(x => Enumerable.Range(0,10).Select(y => (char)_rand.Next(255)).ToString())

.ToList();

}

public static List<string> ListofSimpleString2(int scale)

{

return Enumerable.Range(0, scale)

.Select(x => Enumerable.Range(0, 10).Select(y => (char)_rand.Next(48,95)).ToString())

.ToList();

}

public static List<string> ListofSimpleString4(int scale)

{

return Enumerable.Range(0, scale)

.Select(x => _rand.Next(10).ToString())

.ToList();

}

public static List<string> ListofSimpleString5(int scale)

{

return Enumerable.Range(0, scale)

.Select(x => "duplicate value")

.ToList();

}

结果

Mode : Release (64Bit)

Test Framework : .NET Framework 4.7.1

Operating System : Microsoft Windows 10 Pro

Version : 10.0.17134

CPU Name : Intel(R) Core(TM) i7-2600 CPU @ 3.40GHz

Description : Intel64 Family 6 Model 42 Stepping 7

Cores (Threads) : 4 (8) : Architecture : x64

Clock Speed : 3401 MHz : Bus Speed : 100 MHz

L2Cache : 1 MB : L3Cache : 8 MB

Benchmarks Runs : Inputs (4) * Scales (5) * Benchmarks (4) * Runs (10) = 800

测试1

--- 10 Random ascii chars -----------------------------------------------------------------------

| Value | Average | Fastest | Cycles | Garbage | Test | Gain |

--- Scale 10,000 ----------------------------------------------------------------- Time 0.099 ---

| Dictionary | 1.298 ms | 1.040 ms | 4,180,070 | 8.000 KB | N/A | 43.71 % |

| GroupByCount | 1.315 ms | 1.128 ms | 4,473,950 | 264.242 KB | N/A | 42.98 % |

| GroupBySkipAny | 1.387 ms | 1.097 ms | 4,622,163 | 264.242 KB | N/A | 39.88 % |

| HashSet | 2.306 ms | 2.010 ms | 7,842,618 | 8.000 KB | Base | 0.00 % |

--- Scale 100,000 ---------------------------------------------------------------- Time 0.656 ---

| Dictionary | 11.599 ms | 11.090 ms | 39,514,786 | 8.000 KB | N/A | 48.72 % |

| GroupByCount | 12.469 ms | 12.172 ms | 42,491,474 | 2.008 MB | N/A | 44.88 % |

| GroupBySkipAny | 12.834 ms | 12.490 ms | 43,703,545 | 2.008 MB | N/A | 43.26 % |

| HashSet | 22.620 ms | 22.171 ms | 77,091,768 | 8.000 KB | Base | 0.00 % |

--- Scale 1,000,000 -------------------------------------------------------------- Time 6.502 ---

| Dictionary | 116.635 ms | 114.583 ms | 397,153,297 | 8.000 KB | N/A | 50.92 % |

| GroupBySkipAny | 130.604 ms | 128.622 ms | 444,500,775 | 16.008 MB | N/A | 45.04 % |

| GroupByCount | 133.642 ms | 128.416 ms | 455,399,304 | 16.008 MB | N/A | 43.76 % |

| HashSet | 237.646 ms | 231.029 ms | 809,489,660 | 8.000 KB | Base | 0.00 % |

--- Scale 10,000,000 ------------------------------------------------------------- Time 5.844 ---

| Dictionary | 1,169.275 ms | 1,163.115 ms | 3,984,243,078 | 8.000 KB | N/A | 50.76 % |

| GroupBySkipAny | 1,353.768 ms | 1,345.292 ms | 4,608,323,709 | 256.009 MB | N/A | 43.00 % |

| GroupByCount | 1,358.509 ms | 1,344.632 ms | 4,627,402,507 | 256.009 MB | N/A | 42.80 % |

| HashSet | 2,374.831 ms | 2,334.440 ms | 8,089,227,303 | 8.000 KB | Base | 0.00 % |

--- Scale 100,000,000 ------------------------------------------------------------ Time 8.679 ---

| Dictionary | 11,751.858 ms | 11,656.590 ms | 40,027,059,699 | 8.000 KB | N/A | 52.24 % |

| GroupBySkipAny | 13,585.036 ms | 13,346.376 ms | 46,230,547,515 | 2.000 GB | N/A | 44.79 % |

| GroupByCount | 13,891.448 ms | 13,664.273 ms | 47,215,273,015 | 2.000 GB | N/A | 43.54 % |

| HashSet | 24,605.782 ms | 24,440.468 ms | 83,658,042,598 | 8.000 KB | Base | 0.00 % |

-------------------------------------------------------------------------------------------------

测试 2

--- 10 Random printable ascii chars -------------------------------------------------------------

| Value | Average | Fastest | Cycles | Garbage | Test | Gain |

--- Scale 10,000 ----------------------------------------------------------------- Time 0.259 ---

| Dictionary | 1.087 ms | 1.052 ms | 3,654,669 | 8.000 KB | N/A | 50.38 % |

| GroupByCount | 1.182 ms | 1.133 ms | 4,020,552 | 264.211 KB | N/A | 46.07 % |

| GroupBySkipAny | 1.300 ms | 1.163 ms | 4,415,126 | 264.211 KB | N/A | 40.67 % |

| HashSet | 2.191 ms | 2.024 ms | 7,462,586 | 8.000 KB | Base | 0.00 % |

--- Scale 100,000 ---------------------------------------------------------------- Time 0.758 ---

| Dictionary | 12.033 ms | 11.333 ms | 40,839,598 | 8.000 KB | N/A | 48.66 % |

| GroupBySkipAny | 12.821 ms | 12.616 ms | 43,599,424 | 2.008 MB | N/A | 45.30 % |

| GroupByCount | 12.903 ms | 12.643 ms | 43,879,671 | 2.008 MB | N/A | 44.95 % |

| HashSet | 23.438 ms | 22.309 ms | 79,514,592 | 8.000 KB | Base | 0.00 % |

--- Scale 1,000,000 -------------------------------------------------------------- Time 6.687 ---

| Dictionary | 119.383 ms | 116.696 ms | 406,335,788 | 8.000 KB | N/A | 51.02 % |

| GroupBySkipAny | 134.819 ms | 131.747 ms | 458,589,071 | 16.008 MB | N/A | 44.68 % |

| GroupByCount | 139.834 ms | 132.961 ms | 476,092,342 | 16.008 MB | N/A | 42.63 % |

| HashSet | 243.722 ms | 239.580 ms | 829,409,546 | 8.000 KB | Base | 0.00 % |

--- Scale 10,000,000 ------------------------------------------------------------- Time 8.579 ---

| Dictionary | 1,237.373 ms | 1,213.387 ms | 4,203,352,967 | 8.000 KB | N/A | 49.48 % |

| GroupByCount | 1,404.209 ms | 1,385.300 ms | 4,778,762,566 | 256.009 MB | N/A | 42.67 % |

| GroupBySkipAny | 1,423.058 ms | 1,384.701 ms | 4,838,714,809 | 256.009 MB | N/A | 41.90 % |

| HashSet | 2,449.190 ms | 2,381.713 ms | 8,334,623,472 | 8.000 KB | Base | 0.00 % |

--- Scale 100,000,000 ----------------------------------------------------------- Time 59.573 ---

| Dictionary | 12,126.807 ms | 11,692.415 ms | 41,233,771,464 | 8.000 KB | N/A | 49.00 % |

| GroupByCount | 13,289.256 ms | 13,062.683 ms | 45,292,203,200 | 2.000 GB | N/A | 44.12 % |

| GroupBySkipAny | 13,760.635 ms | 13,261.366 ms | 46,825,002,767 | 2.000 GB | N/A | 42.13 % |

| HashSet | 23,780.270 ms | 22,785.622 ms | 80,971,187,805 | 8.000 KB | Base | 0.00 % |

-------------------------------------------------------------------------------------------------

测试 3

--- Random number between 0 and 10 ------------------------------------------------------------

| Value | Average | Fastest | Cycles | Garbage | Test | Gain |

--- Scale 10,000 --------------------------------------------------------------- Time 0.224 ---

| Dictionary | 0.397 ms | 0.387 ms | 1,349,447 | 8.000 KB | N/A | 50.59 % |

| GroupBySkipAny | 0.495 ms | 0.490 ms | 1,683,949 | 200.563 KB | N/A | 38.38 % |

| GroupByCount | 0.506 ms | 0.503 ms | 1,722,584 | 200.563 KB | N/A | 36.97 % |

| HashSet | 0.803 ms | 0.786 ms | 2,734,083 | 8.000 KB | Base | 0.00 % |

--- Scale 100,000 -------------------------------------------------------------- Time 0.459 ---

| Dictionary | 3.929 ms | 3.767 ms | 13,387,884 | 8.000 KB | N/A | 48.65 % |

| GroupBySkipAny | 5.195 ms | 4.873 ms | 17,699,816 | 2.510 MB | N/A | 32.09 % |

| GroupByCount | 5.233 ms | 4.904 ms | 17,825,215 | 2.510 MB | N/A | 31.60 % |

| HashSet | 7.650 ms | 7.444 ms | 26,081,151 | 8.000 KB | Base | 0.00 % |

--- Scale 1,000,000 ------------------------------------------------------------ Time 3.416 ---

| Dictionary | 39.934 ms | 38.031 ms | 136,107,565 | 8.000 KB | N/A | 47.61 % |

| GroupBySkipAny | 52.159 ms | 50.011 ms | 177,797,622 | 20.011 MB | N/A | 31.57 % |

| GroupByCount | 54.562 ms | 49.745 ms | 185,883,905 | 20.011 MB | N/A | 28.42 % |

| HashSet | 76.221 ms | 73.899 ms | 259,702,109 | 8.000 KB | Base | 0.00 % |

--- Scale 10,000,000 ---------------------------------------------------------- Time 33.643 ---

| Dictionary | 396.948 ms | 381.873 ms | 1,352,809,995 | 7.035 KB | N/A | 47.82 % |

| GroupByCount | 519.931 ms | 515.210 ms | 1,771,927,979 | 160.012 MB | N/A | 31.66 % |

| GroupBySkipAny | 537.953 ms | 516.127 ms | 1,833,424,578 | 160.013 MB | N/A | 29.29 % |

| HashSet | 760.781 ms | 751.582 ms | 2,592,592,185 | 8.000 KB | Base | 0.00 % |

--- Scale 100,000,000 --------------------------------------------------------- Time 43.701 ---

| Dictionary | 3,945.544 ms | 3,845.146 ms | 13,442,361,467 | 8.000 KB | N/A | 49.08 % |

| GroupByCount | 5,666.408 ms | 5,501.203 ms | 19,301,260,141 | 2.500 GB | N/A | 26.87 % |

| GroupBySkipAny | 5,688.156 ms | 5,536.729 ms | 19,370,101,611 | 2.500 GB | N/A | 26.59 % |

| HashSet | 7,748.656 ms | 7,605.495 ms | 26,399,373,179 | 7.315 KB | Base | 0.00 % |

-----------------------------------------------------------------------------------------------

测试 4

--- All Duplicates ----------------------------------------------------------------------------

| Value | Average | Fastest | Cycles | Garbage | Test | Gain |

--- Scale 10,000 --------------------------------------------------------------- Time 0.957 ---

| Dictionary | 0.444 ms | 0.425 ms | 1,508,780 | 8.000 KB | N/A | 50.55 % |

| GroupBySkipAny | 0.561 ms | 0.543 ms | 1,912,069 | 264.211 KB | N/A | 37.44 % |

| GroupByCount | 0.566 ms | 0.544 ms | 1,927,506 | 264.211 KB | N/A | 36.93 % |

| HashSet | 0.897 ms | 0.876 ms | 3,058,602 | 8.000 KB | Base | 0.00 % |

--- Scale 100,000 -------------------------------------------------------------- Time 0.341 ---

| Dictionary | 4.342 ms | 4.195 ms | 14,764,603 | 8.000 KB | N/A | 56.79 % |

| GroupBySkipAny | 6.090 ms | 5.437 ms | 20,636,444 | 2.008 MB | N/A | 39.38 % |

| GroupByCount | 6.327 ms | 5.812 ms | 21,478,886 | 2.008 MB | N/A | 37.03 % |

| HashSet | 10.047 ms | 8.627 ms | 34,243,915 | 8.000 KB | Base | 0.00 % |

--- Scale 1,000,000 ------------------------------------------------------------ Time 2.962 ---

| Dictionary | 45.242 ms | 42.814 ms | 154,054,415 | 8.000 KB | N/A | 54.02 % |

| GroupBySkipAny | 58.574 ms | 53.289 ms | 199,411,629 | 16.008 MB | N/A | 40.47 % |

| GroupByCount | 63.450 ms | 54.705 ms | 215,792,787 | 16.008 MB | N/A | 35.52 % |

| HashSet | 98.396 ms | 85.450 ms | 335,093,581 | 8.000 KB | Base | 0.00 % |

--- Scale 10,000,000 ---------------------------------------------------------- Time 28.309 ---

| Dictionary | 432.955 ms | 424.321 ms | 1,474,860,339 | 8.000 KB | N/A | 49.97 % |

| GroupByCount | 600.265 ms | 581.515 ms | 2,044,282,844 | 256.009 MB | N/A | 30.64 % |

| GroupBySkipAny | 603.112 ms | 581.099 ms | 2,054,976,446 | 256.009 MB | N/A | 30.31 % |

| HashSet | 865.449 ms | 854.386 ms | 2,949,024,388 | 8.000 KB | Base | 0.00 % |

--- Scale 100,000,000 --------------------------------------------------------- Time 38.508 ---

| Dictionary | 4,394.937 ms | 4,261.656 ms | 14,973,292,181 | 8.000 KB | N/A | 50.11 % |

| GroupBySkipAny | 5,799.055 ms | 5,718.249 ms | 19,758,314,574 | 2.000 GB | N/A | 34.16 % |

| GroupByCount | 5,909.234 ms | 5,781.676 ms | 20,126,526,198 | 2.000 GB | N/A | 32.91 % |

| HashSet | 8,808.441 ms | 8,617.298 ms | 30,010,947,763 | 8.000 KB | Base | 0.00 % |

-----------------------------------------------------------------------------------------------

算法

字典

public class Dictionary : Benchmark<List<string>, List<string>>

{

public static IEnumerable<string> GetDistinctDuplicates(IEnumerable<string> original)

{

var dict = new Dictionary<string, bool>();

foreach (var s in original)

if (dict.TryGetValue(s, out var isReturnedDupl))

{

if (isReturnedDupl)

continue;

dict[s] = true;

yield return s;

}

else

dict.Add(s, false);

}

protected override List<string> InternalRun()

{

return GetDistinctDuplicates(Input).ToList();

}

}

GroupByCount

public class GroupBy : Benchmark<List<string>, List<string>>

{

protected override List<string> InternalRun()

{

return Input.GroupBy(x => x)

.Where(g => g.Count() > 1)

.Select(y => y.Key)

.ToList();

}

}

GroupBySkipAny Enigmativity

public class GroupBy2 : Benchmark<List<string>, List<string>>

{

protected override List<string> InternalRun()

{

return Input.GroupBy(x => x)

.Where(g => g.Count() > 1)

.Select(y => y.Key)

.ToList();

}

}

摘要

Dictionary 是最快的,GroupBy 总是表现良好,Enigmativitys 版本的 Skip 和 Any 稍微快一些,而由于某种原因,Hashset 总是慢35-50%。然而,在较小的规模下情况就不那么确定了。尽管在给定的测试数据和测试中其它答案的基准测试结果中,这些结果看起来相当明显,但我有一种感觉,可能还有其他因素影响了测试结果。

总之,祝您搜索重复项愉快!

- TheGeneral

15

@galakt 基本上就像上面所述,不过这是我的自己的基准库,目前还没有公开。但是如果你想要进行自己的基准测试,可以使用 https://github.com/dotnet/BenchmarkDotNet 这个工具,它是一个非常好用的工具。 - TheGeneral

我指的不仅是结果,而且测试方法的代码也应该更加优美。 - galakt

我通过BenchmarkDotNet进行了测试,并得到了相反的结果。 - galakt

确保你在发布模式下运行 - TheGeneral

一切都很好。对于100到100000的列表,使用groupby在我的基准测试中都获得了胜利,这是在每次运行之前进行10个字符的随机字符串和100个测试以及Gc的64位版本中完成的。明天我有时间时,我会在相同的数据和设置上将其与Benchmark.Net进行比较测试,因为我非常感兴趣。 - TheGeneral

显示剩余10条评论

5

没有LINQ,纯代码

获取所有重复项

public static IEnumerable<string> GetDuplicates(IEnumerable<string> original)

{

var hs = new HashSet<string>();

foreach (var item in original)

{

if (!hs.Add(item))

{

yield return item;

}

}

}

获取不同的重复项

public static IEnumerable<string> GetDistinctDuplicates(IEnumerable<string> original)

{

var dict = new Dictionary<string, bool>();

foreach (var s in original)

{

// If found duplicate

if (dict.TryGetValue(s, out var isReturnedDupl))

{

// If already returned

if (isReturnedDupl)

{

continue;

}

dict[s] = true;

yield return s;

}

else

{

// First meet

dict.Add(s, false);

}

}

}

更新:添加bench

更新2:更新bench源码,bench结果

更新3:将MemoryDiagnoser添加到bench和其他算法中

TheGeneral bench和我的bench的区别

| TheGeneral | My bench |

---------- |-------------------- |----------------------- |

Used tool | Own benchmark lib | BenchmarkDotNet |

Input | Random string cases | Corner cases |

算法

- HashSet(GetDuplicates)

- Dict(GetDistinctDuplicates)

- GroupBy(TheGeneral 方法)

- GroupByWithSkip(Enigmativity 方法)

使用默认配置 BenchmarkDotNet 进行基准测试

输入

- AllDupl - 当集合只包含相同字符串时的边缘情况

- NoDupl - 当集合只包含唯一字符串时的边缘情况

Ini:

BenchmarkDotNet=v0.10.14, OS=Windows 10.0.17134

Intel Core i7-4710HQ CPU 2.50GHz (Haswell), 1 CPU, 8 logical and 4 physical cores

[Host] : .NET Framework 4.6.2 (CLR 4.0.30319.42000), 32bit LegacyJIT-v4.7.3101.0

DefaultJob : .NET Framework 4.6.2 (CLR 4.0.30319.42000), 32bit LegacyJIT-v4.7.3101.0

传说

input : Value of the 'input' parameter

description : Value of the 'description' parameter

Mean : Arithmetic mean of all measurements

Error : Half of 99.9% confidence interval

StdDev : Standard deviation of all measurements

Gen 0 : GC Generation 0 collects per 1k Operations

Gen 1 : GC Generation 1 collects per 1k Operations

Gen 2 : GC Generation 2 collects per 1k Operations

Allocated : Allocated memory per single operation (managed only, inclusive, 1KB = 1024B)

1 us : 1 Microsecond (0.000001 sec)

结果

Method | input | description | Mean | Error | StdDev | Gen 0 | Gen 1 | Gen 2 | Allocated |

---------------- |-------------- |-------------------------- |-------------:|------------:|------------:|----------:|----------:|---------:|-----------:|

--------------------------------------------------------------------------------------------------------------------------------------------------------

GroupBy | Array[1000] | AllDupl, Count=1000 | 43.62 us | 0.6882 us | 0.5747 us | 2.6855 | - | - | 8606 B |

GroupByWithSkip | Array[1000] | AllDupl, Count=1000 | 42.86 us | 0.3188 us | 0.2826 us | 2.7466 | - | - | 8669 B |

HashSet | Array[1000] | AllDupl, Count=1000 | 72.54 us | 0.3339 us | 0.3124 us | 0.1221 | - | - | 429 B |

Dict | Array[1000] | AllDupl, Count=1000 | 35.44 us | 0.1953 us | 0.1731 us | 0.0610 | - | - | 244 B |

GroupBy | Array[1000] | NoDupl, Count=1000 | 110.13 us | 0.2874 us | 0.2548 us | 17.8223 | - | - | 56408 B |

GroupByWithSkip | Array[1000] | NoDupl, Count=1000 | 153.37 us | 0.6419 us | 0.5690 us | 38.0859 | - | - | 120411 B |

HashSet | Array[1000] | NoDupl, Count=1000 | 49.50 us | 0.1833 us | 0.1715 us | 18.4937 | - | - | 58672 B |

Dict | Array[1000] | NoDupl, Count=1000 | 71.20 us | 0.1944 us | 0.1723 us | 23.0713 | - | - | 73027 B |

--------------------------------------------------------------------------------------------------------------------------------------------------------

GroupBy | Array[10000] | AllDupl, Count=10000 | 431.36 us | 1.8869 us | 1.7650 us | 41.5039 | - | - | 131580 B |

GroupByWithSkip | Array[10000] | AllDupl, Count=10000 | 431.19 us | 1.3593 us | 1.2050 us | 41.5039 | - | - | 131680 B |

HashSet | Array[10000] | AllDupl, Count=10000 | 721.68 us | 2.0894 us | 1.7448 us | - | - | - | 432 B |

Dict | Array[10000] | AllDupl, Count=10000 | 357.74 us | 1.3790 us | 1.2899 us | - | - | - | 248 B |

GroupBy | Array[10000] | NoDupl, Count=10000 | 1,316.20 us | 8.2301 us | 7.6984 us | 119.1406 | 29.2969 | - | 611337 B |

GroupByWithSkip | Array[10000] | NoDupl, Count=10000 | 1,908.77 us | 15.7756 us | 14.7565 us | 203.1250 | 97.6563 | - | 1251339 B |

HashSet | Array[10000] | NoDupl, Count=10000 | 696.26 us | 0.9738 us | 0.6441 us | 94.7266 | 94.7266 | 94.7266 | 538736 B |

Dict | Array[10000] | NoDupl, Count=10000 | 954.53 us | 1.9645 us | 1.8376 us | 124.0234 | 124.0234 | 124.0234 | 673164 B |

--------------------------------------------------------------------------------------------------------------------------------------------------------

GroupBy | Array[100000] | AllDupl, Count=100000 | 4,767.00 us | 17.4749 us | 16.3461 us | 281.2500 | 281.2500 | 281.2500 | 1051088 B |

GroupByWithSkip | Array[100000] | AllDupl, Count=100000 | 4,742.72 us | 22.8393 us | 21.3639 us | 281.2500 | 281.2500 | 281.2500 | 1051088 B |

HashSet | Array[100000] | AllDupl, Count=100000 | 7,247.34 us | 12.4036 us | 11.6023 us | - | - | - | 448 B |

Dict | Array[100000] | AllDupl, Count=100000 | 3,557.70 us | 14.2987 us | 11.1635 us | - | - | - | 256 B |

GroupBy | Array[100000] | NoDupl, Count=100000 | 31,044.51 us | 371.9730 us | 347.9438 us | 1125.0000 | 750.0000 | 312.5000 | 5849142 B |

GroupByWithSkip | Array[100000] | NoDupl, Count=100000 | 42,374.47 us | 306.7762 us | 286.9586 us | 2187.5000 | 1187.5000 | 500.0000 | 12249704 B |

HashSet | Array[100000] | NoDupl, Count=100000 | 7,497.73 us | 54.6210 us | 51.0925 us | 656.2500 | 593.7500 | 593.7500 | 4830548 B |

Dict | Array[100000] | NoDupl, Count=100000 | 9,825.25 us | 51.1668 us | 45.3581 us | 1062.5000 | 984.3750 | 984.3750 | 6042773 B |

摘要

对于 GroupBy , HashSet 和 Dict 的性能非常依赖于输入。如果输入只包含重复项- 字典更好。但是当输入仅包含唯一值时, HashSet 更好。对于随机输入(例如TheGeneral代码中的输入), GroupBy 比 HashSet 效果更好。还要注意,仅具有唯一值的输入真正是 GroupBy 最糟糕的情况,会严重影响性能。

分配和GC是结果中真正有趣的列...

因此,什么更好取决于输入中重复项的数量,输入的总计数以及您的目标

来源

class Program

{

static void Main(string[] args)

{

var conf = ManualConfig.Create(DefaultConfig.Instance);

conf.Add(MemoryDiagnoser.Default);

var summary = BenchmarkRunner.Run<DuplicateFindBench>(conf);

}

}

public class DuplicateFindBench

{

[Benchmark]

[ArgumentsSource(nameof(Data))]

public List<string> GroupBy(string[] input, string description)

{

return input.GroupBy(x => x)

.Where(g => g.Count() > 1)

.Select(y => y.Key)

.ToList();

}

[Benchmark]

[ArgumentsSource(nameof(Data))]

public List<string> GroupByWithSkip(string[] input, string description)

{

return input.GroupBy(x => x)

.Where(g => g.Skip(1).Any())

.Select(y => y.Key)

.ToList();

}

[Benchmark]

[ArgumentsSource(nameof(Data))]

public List<string> HashSet(string[] input, string description)

{

return GetDuplicates(input).Distinct().ToList();

}

[Benchmark]

[ArgumentsSource(nameof(Data))]

public List<string> Dict(string[] input, string description)

{

return GetDistinctDuplicates(input).ToList();

}

public static IEnumerable<string> GetDuplicates(IEnumerable<string> original)

{

var hs = new HashSet<string>();

foreach (var item in original)

{

if (!hs.Add(item))

{

yield return item;

}

}

}

public static IEnumerable<string> GetDistinctDuplicates(IEnumerable<string> original)

{

var dict = new Dictionary<string, bool>();

foreach (var s in original)

{

// If found duplicate

if (dict.TryGetValue(s, out var isReturnedDupl))

{

// If already returned

if (isReturnedDupl)

{

continue;

}

dict[s] = true;

yield return s;

}

else

{

// First meet

dict.Add(s, false);

}

}

}

public IEnumerable<object[]> Data()

{

const int count1 = 1000;

yield return new object[] { ArrayParam<string>.ForPrimitives(CreateNoDuplInput(count1).ToArray()), $"NoDupl, Count={count1}" };

yield return new object[] { ArrayParam<string>.ForPrimitives(CreateAllDuplInput(count1).ToArray()), $"AllDupl, Count={count1}" };

const int count2 = 10_000;

yield return new object[] { ArrayParam<string>.ForPrimitives(CreateNoDuplInput(count2).ToArray()), $"NoDupl, Count={count2}" };

yield return new object[] { ArrayParam<string>.ForPrimitives(CreateAllDuplInput(count2).ToArray()), $"AllDupl, Count={count2}" };

const int count3 = 100_000;

yield return new object[] { ArrayParam<string>.ForPrimitives(CreateNoDuplInput(count3).ToArray()), $"NoDupl, Count={count3}" };

yield return new object[] { ArrayParam<string>.ForPrimitives(CreateAllDuplInput(count3).ToArray()), $"AllDupl, Count={count3}" };

}

public List<string> CreateNoDuplInput(int inputSize)

{

var result = new List<string>();

for (int i = 0; i < inputSize; i++)

{

result.Add(i.ToString());

}

return result;

}

public List<string> CreateAllDuplInput(int inputSize)

{

var result = new List<string>();

for (int i = 0; i < inputSize; i++)

{

result.Add("duplicate value");

}

return result;

}

}

- galakt

2

结果从来没有讲得通,我唯一能够让哈希集在任何环境下都是最快的方法就是使用有限的数据集 https://dotnetfiddle.net/eqGjlg,如果你只是在你的电脑上运行 .net fiddle 代码,你会得到什么?抱歉,我正在摆弄代码。 - TheGeneral

@TheGeneral 看起来你完全忽略了主要的点 :)(取决于输入集的多样性)请检查这个稍微修改过的小提琴 https://dotnetfiddle.net/3YgUYC - galakt

2

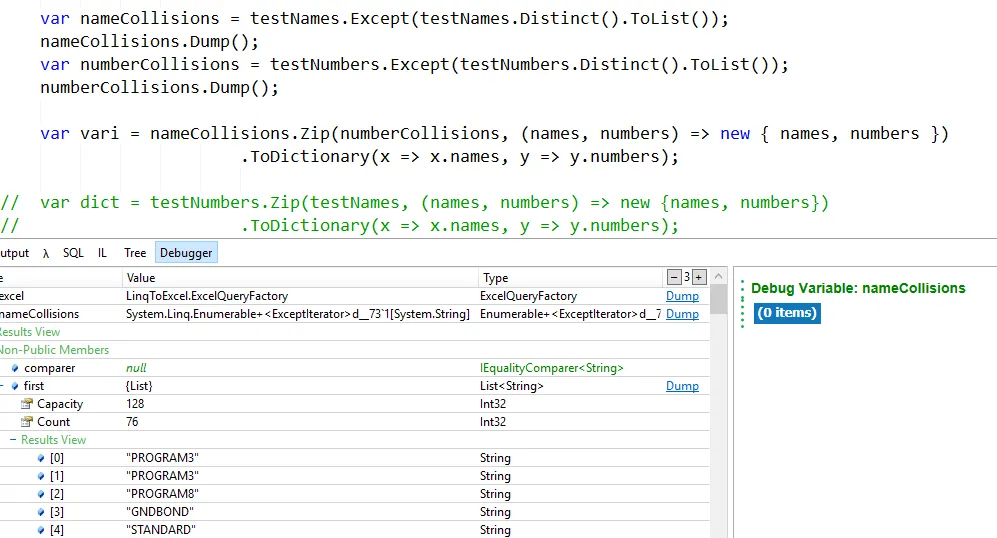

在linq或SQL中,您必须使用

但我不明白你想要的结果是什么,这个答案总是返回空列表。

EXCEPT而不是像下面这样的减号:var namesCollisions = test.Except(test.Distinct().ToList());

但我不明白你想要的结果是什么,这个答案总是返回空列表。

- Hasan Gholamali

7

编译没有问题,但是在名为namesCollisions的列表中有两个重复项...它似乎没有起作用 @hasangholamali - Kelly Kleinknecht

你能否提供你的数据和你想要得到的结果? - Hasan Gholamali

我将调试信息添加到原始问题中。请注意2x PROGRAM3和转储为空。@hasangholamali - Kelly Kleinknecht

1这将始终产生一个空枚举,因为这就像是在说“我想要从一到十的所有数字,除了从一到十的所有不同数字”。 - Streamline

1如果你想提供一个空列表(这就是它的全部作用),那么

new List<string>() 就会简单得多... - mjwills显示剩余2条评论

网页内容由stack overflow 提供, 点击上面的可以查看英文原文,

原文链接

原文链接