我正在编写一段代码,可以从多个图像和多个视频在iOS设备上生成幻灯片演示视频。我已经能够使用单个视频和多个图像完成此操作,但我无法弄清如何将其改进为多个视频。

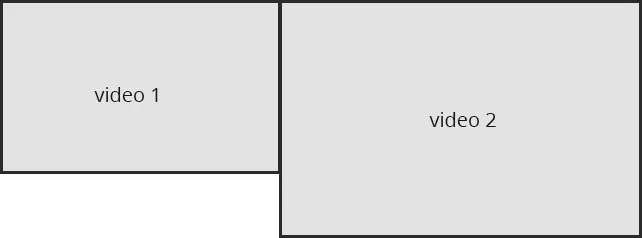

这里是我能够使用一个视频和两个图像生成的样本视频。

这是主要程序,用于准备出口商。

这里是_addVideo:time:方法,它创建了videoLayer。

这里是我能够使用一个视频和两个图像生成的样本视频。

这是主要程序,用于准备出口商。

// Prepare the temporary location to store generated video

NSURL * urlAsset = [NSURL fileURLWithPath:[StoryMaker tempFilePath:@"mov"]];

// Prepare composition and _exporter

AVMutableComposition *composition = [AVMutableComposition composition];

AVAssetExportSession* exporter = [[AVAssetExportSession alloc] initWithAsset:composition presetName:AVAssetExportPresetHighestQuality];

exporter.outputURL = urlAsset;

exporter.outputFileType = AVFileTypeQuickTimeMovie;

exporter.shouldOptimizeForNetworkUse = YES;

exporter.videoComposition = [self _addVideo:composition time:timeVideo];

这里是_addVideo:time:方法,它创建了videoLayer。

-(AVVideoComposition*) _addVideo:(AVMutableComposition*)composition time:(CMTime)timeVideo {

AVMutableVideoComposition* videoComposition = [AVMutableVideoComposition videoComposition];

videoComposition.renderSize = _sizeVideo;

videoComposition.frameDuration = CMTimeMake(1,30); // 30fps

AVMutableCompositionTrack *compositionVideoTrack = [composition addMutableTrackWithMediaType:AVMediaTypeVideo preferredTrackID:kCMPersistentTrackID_Invalid];

[compositionVideoTrack insertTimeRange:CMTimeRangeMake(kCMTimeZero,timeVideo) ofTrack:_baseVideoTrack atTime:kCMTimeZero error:nil];

// Prepare the parent layer

CALayer *parentLayer = [CALayer layer];

parentLayer.backgroundColor = [UIColor blackColor].CGColor;

parentLayer.frame = CGRectMake(0, 0, _sizeVideo.width, _sizeVideo.height);

// Prepare images parent layer

CALayer *imageParentLayer = [CALayer layer];

imageParentLayer.frame = CGRectMake(0, 0, _sizeVideo.width, _sizeVideo.height);

[parentLayer addSublayer:imageParentLayer];

// Specify the perspecrtive view

CATransform3D perspective = CATransform3DIdentity;

perspective.m34 = -1.0 / imageParentLayer.frame.size.height;

imageParentLayer.sublayerTransform = perspective;

// Animations

_beginTime = 1E-10;

_endTime = CMTimeGetSeconds(timeVideo);

CALayer* videoLayer = [self _addVideoLayer:imageParentLayer];

[self _addAnimations:imageParentLayer time:timeVideo];

videoComposition.animationTool = [AVVideoCompositionCoreAnimationTool videoCompositionCoreAnimationToolWithPostProcessingAsVideoLayer:videoLayer inLayer:parentLayer];

// Prepare the instruction

AVMutableVideoCompositionInstruction *instruction = [AVMutableVideoCompositionInstruction videoCompositionInstruction];

{

instruction.timeRange = CMTimeRangeMake(kCMTimeZero, timeVideo);

AVAssetTrack *videoTrack = [[composition tracksWithMediaType:AVMediaTypeVideo] objectAtIndex:0];

AVMutableVideoCompositionLayerInstruction* layerInstruction = [AVMutableVideoCompositionLayerInstruction videoCompositionLayerInstructionWithAssetTrack:videoTrack];

[layerInstruction setTransform:_baseVideoTrack.preferredTransform atTime:kCMTimeZero];

instruction.layerInstructions = @[layerInstruction];

}

videoComposition.instructions = @[instruction];

return videoComposition;

}

_addAnimation:time:方法添加图像层,并安排所有层的动画,包括_videoLayer。

到目前为止一切都工作正常。

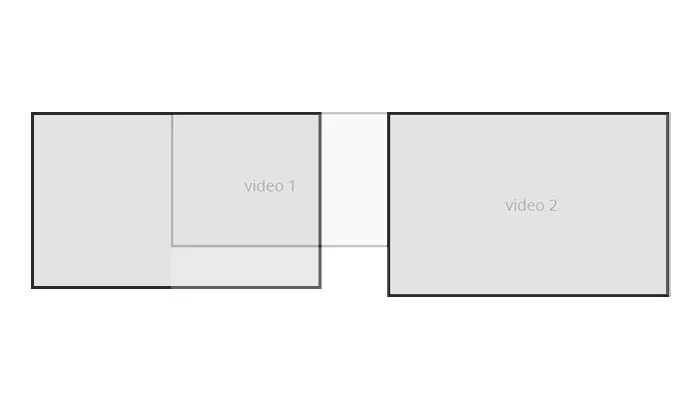

然而,我无法弄清如何将第二个视频添加到此幻灯片演示文稿中。

AVFoundation编程指南中的示例使用多个视频合成指令(AVMutableVideoCompositionInstruction)将两个视频组合在一起,但它只是将它们渲染成一个CALayer对象,该对象在videoCompositionCoreAnimationToolWithPostProcessingAsVideoLayer:inLayer:方法(of AVVideoCompositionCoreAnimationTool)中指定。

我想将两个视频轨道渲染成两个独立的图层(layer1和layer2),并分别对它们进行动画处理,就像我正在处理与图像相关联的图层一样。