在TensorFlow中,我想对一张图片进行随机角度的旋转以进行数据增强。但是我在tf.image模块中没有找到这种变换。

TensorFlow:如何旋转图像进行数据增强?

16

- Mostafa

3

1如果需要添加新操作,请将其添加到TensorFlow问题中,并将此问题和问题链接在一起。 - Guy Coder

1你可以使用tf.transpose然后tf.image.flip_left_right来进行90度旋转。 - mdaoust

正如您在下面的答案中找到的那样,这已经在Tensorflow中实现了。您可能希望将接受的答案更改为此答案。 - Honeybear

8个回答

34

现在可以使用tensorflow来实现这个功能:

tf.contrib.image.rotate(images, degrees * math.pi / 180, interpolation='BILINEAR')

- Andrew Stromme

3

如何在批处理中应用不同的角度? - MarStarck

2tf.contrib贡献包在tensorflow 2.0中已不再可用,现在已转移到tfa.image.rotate。 - Pradeep Singh

4"tfa"代表tensorflow_addons。 - problemofficer - n.f. Monica

11

因为我想要能够旋转张量,所以我想出了以下的代码片段,可以将一个 [height, width, depth] 的张量按照给定的角度进行旋转:

def rotate_image_tensor(image, angle, mode='black'):

"""

Rotates a 3D tensor (HWD), which represents an image by given radian angle.

New image has the same size as the input image.

mode controls what happens to border pixels.

mode = 'black' results in black bars (value 0 in unknown areas)

mode = 'white' results in value 255 in unknown areas

mode = 'ones' results in value 1 in unknown areas

mode = 'repeat' keeps repeating the closest pixel known

"""

s = image.get_shape().as_list()

assert len(s) == 3, "Input needs to be 3D."

assert (mode == 'repeat') or (mode == 'black') or (mode == 'white') or (mode == 'ones'), "Unknown boundary mode."

image_center = [np.floor(x/2) for x in s]

# Coordinates of new image

coord1 = tf.range(s[0])

coord2 = tf.range(s[1])

# Create vectors of those coordinates in order to vectorize the image

coord1_vec = tf.tile(coord1, [s[1]])

coord2_vec_unordered = tf.tile(coord2, [s[0]])

coord2_vec_unordered = tf.reshape(coord2_vec_unordered, [s[0], s[1]])

coord2_vec = tf.reshape(tf.transpose(coord2_vec_unordered, [1, 0]), [-1])

# center coordinates since rotation center is supposed to be in the image center

coord1_vec_centered = coord1_vec - image_center[0]

coord2_vec_centered = coord2_vec - image_center[1]

coord_new_centered = tf.cast(tf.pack([coord1_vec_centered, coord2_vec_centered]), tf.float32)

# Perform backward transformation of the image coordinates

rot_mat_inv = tf.dynamic_stitch([[0], [1], [2], [3]], [tf.cos(angle), tf.sin(angle), -tf.sin(angle), tf.cos(angle)])

rot_mat_inv = tf.reshape(rot_mat_inv, shape=[2, 2])

coord_old_centered = tf.matmul(rot_mat_inv, coord_new_centered)

# Find nearest neighbor in old image

coord1_old_nn = tf.cast(tf.round(coord_old_centered[0, :] + image_center[0]), tf.int32)

coord2_old_nn = tf.cast(tf.round(coord_old_centered[1, :] + image_center[1]), tf.int32)

# Clip values to stay inside image coordinates

if mode == 'repeat':

coord_old1_clipped = tf.minimum(tf.maximum(coord1_old_nn, 0), s[0]-1)

coord_old2_clipped = tf.minimum(tf.maximum(coord2_old_nn, 0), s[1]-1)

else:

outside_ind1 = tf.logical_or(tf.greater(coord1_old_nn, s[0]-1), tf.less(coord1_old_nn, 0))

outside_ind2 = tf.logical_or(tf.greater(coord2_old_nn, s[1]-1), tf.less(coord2_old_nn, 0))

outside_ind = tf.logical_or(outside_ind1, outside_ind2)

coord_old1_clipped = tf.boolean_mask(coord1_old_nn, tf.logical_not(outside_ind))

coord_old2_clipped = tf.boolean_mask(coord2_old_nn, tf.logical_not(outside_ind))

coord1_vec = tf.boolean_mask(coord1_vec, tf.logical_not(outside_ind))

coord2_vec = tf.boolean_mask(coord2_vec, tf.logical_not(outside_ind))

coord_old_clipped = tf.cast(tf.transpose(tf.pack([coord_old1_clipped, coord_old2_clipped]), [1, 0]), tf.int32)

# Coordinates of the new image

coord_new = tf.transpose(tf.cast(tf.pack([coord1_vec, coord2_vec]), tf.int32), [1, 0])

image_channel_list = tf.split(2, s[2], image)

image_rotated_channel_list = list()

for image_channel in image_channel_list:

image_chan_new_values = tf.gather_nd(tf.squeeze(image_channel), coord_old_clipped)

if (mode == 'black') or (mode == 'repeat'):

background_color = 0

elif mode == 'ones':

background_color = 1

elif mode == 'white':

background_color = 255

image_rotated_channel_list.append(tf.sparse_to_dense(coord_new, [s[0], s[1]], image_chan_new_values,

background_color, validate_indices=False))

image_rotated = tf.transpose(tf.pack(image_rotated_channel_list), [1, 2, 0])

return image_rotated

- zimmermc

3

3看起来很酷有趣!我可以建议你尝试将此作为拉取请求提交给TensorFlow本身吗?此外,TensorFlow中存在一个针对此确切问题的问题:https://github.com/tensorflow/tensorflow/issues/781 - Andrew Hundt

当然可以,为什么不呢! - zimmermc

我该如何修改这个程序以进行三维旋转? - Ray.R.Chua

9

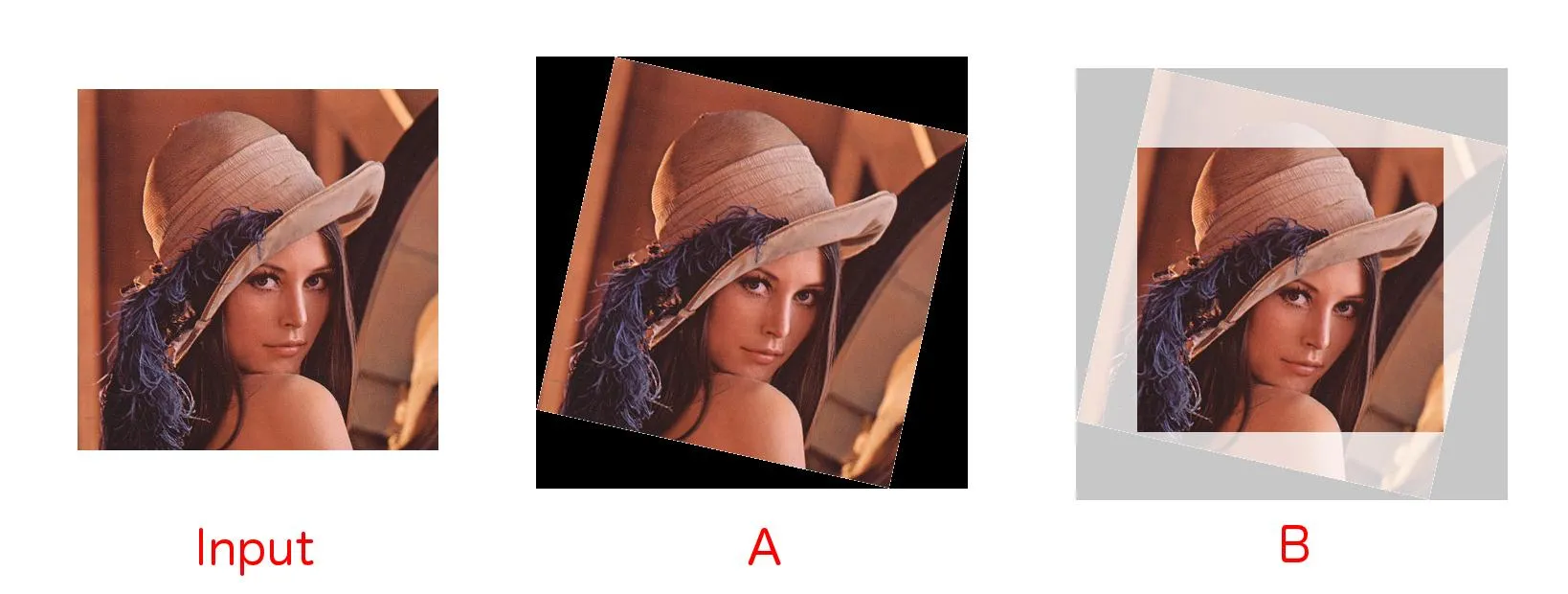

旋转和裁剪在 TensorFlow 中的应用

个人认为,在 TensorFlow 中需要实现图像旋转和裁剪黑边的函数,如下所示。

以下是实现该功能的方法。

以下是实现该功能的方法。

def _rotate_and_crop(image, output_height, output_width, rotation_degree, do_crop):

"""Rotate the given image with the given rotation degree and crop for the black edges if necessary

Args:

image: A `Tensor` representing an image of arbitrary size.

output_height: The height of the image after preprocessing.

output_width: The width of the image after preprocessing.

rotation_degree: The degree of rotation on the image.

do_crop: Do cropping if it is True.

Returns:

A rotated image.

"""

# Rotate the given image with the given rotation degree

if rotation_degree != 0:

image = tf.contrib.image.rotate(image, math.radians(rotation_degree), interpolation='BILINEAR')

# Center crop to ommit black noise on the edges

if do_crop == True:

lrr_width, lrr_height = _largest_rotated_rect(output_height, output_width, math.radians(rotation_degree))

resized_image = tf.image.central_crop(image, float(lrr_height)/output_height)

image = tf.image.resize_images(resized_image, [output_height, output_width], method=tf.image.ResizeMethod.BILINEAR, align_corners=False)

return image

def _largest_rotated_rect(w, h, angle):

"""

Given a rectangle of size wxh that has been rotated by 'angle' (in

radians), computes the width and height of the largest possible

axis-aligned rectangle within the rotated rectangle.

Original JS code by 'Andri' and Magnus Hoff from Stack Overflow

Converted to Python by Aaron Snoswell

Source: https://dev59.com/wGQn5IYBdhLWcg3wmILY

"""

quadrant = int(math.floor(angle / (math.pi / 2))) & 3

sign_alpha = angle if ((quadrant & 1) == 0) else math.pi - angle

alpha = (sign_alpha % math.pi + math.pi) % math.pi

bb_w = w * math.cos(alpha) + h * math.sin(alpha)

bb_h = w * math.sin(alpha) + h * math.cos(alpha)

gamma = math.atan2(bb_w, bb_w) if (w < h) else math.atan2(bb_w, bb_w)

delta = math.pi - alpha - gamma

length = h if (w < h) else w

d = length * math.cos(alpha)

a = d * math.sin(alpha) / math.sin(delta)

y = a * math.cos(gamma)

x = y * math.tan(gamma)

return (

bb_w - 2 * x,

bb_h - 2 * y

)

如果您需要在TensorFlow中进一步实现实例和可视化,您可以使用这个代码库。希望这对其他人有所帮助。

- ByungSoo Ko

9

tfa已被弃用,可以使用预处理层

[RandomRotation][1]。

tf.keras.layers.RandomRotation(factor)

import tensorflow_addons as tfa

tfa.image.transform_ops.rotate(image, radian)

- V.M

1

tfa已被弃用...有其他替代方案吗? - Cedric

4

更新: 请查看下面 @astromme 的回答。现在 Tensorflow 已经原生支持旋转图像。

当 Tensorflow 没有原生方法时,你可以尝试像这样做:

当 Tensorflow 没有原生方法时,你可以尝试像这样做:

from PIL import Image

sess = tf.InteractiveSession()

# Pass image tensor object to a PIL image

image = Image.fromarray(image.eval())

# Use PIL or other library of the sort to rotate

rotated = Image.Image.rotate(image, degrees)

# Convert rotated image back to tensor

rotated_tensor = tf.convert_to_tensor(np.array(rotated))

- mathetes

2

tf.contrib在tensorflow 2中不可用。

对于tensorflow >= 2.*,可以使用以下内容:

tf.keras.preprocessing.image.random_rotation(x, rg, row_axis=1,col_axis=2, channel_axis=0,fill_mode='nearest', cval=0., interpolation_order=1);

您可以在此处找到文档: https://www.tensorflow.org/api_docs/python/tf/keras/preprocessing/image/random_rotation

- AliPrf

1

这里是已更新至TensorFlow v0.12的@zimmermc答案。

更改内容:

pack()is nowstack()order of

splitparameters reverseddef rotate_image_tensor(image, angle, mode='white'): """ Rotates a 3D tensor (HWD), which represents an image by given radian angle. New image has the same size as the input image. mode controls what happens to border pixels. mode = 'black' results in black bars (value 0 in unknown areas) mode = 'white' results in value 255 in unknown areas mode = 'ones' results in value 1 in unknown areas mode = 'repeat' keeps repeating the closest pixel known """ s = image.get_shape().as_list() assert len(s) == 3, "Input needs to be 3D." assert (mode == 'repeat') or (mode == 'black') or (mode == 'white') or (mode == 'ones'), "Unknown boundary mode." image_center = [np.floor(x/2) for x in s] # Coordinates of new image coord1 = tf.range(s[0]) coord2 = tf.range(s[1]) # Create vectors of those coordinates in order to vectorize the image coord1_vec = tf.tile(coord1, [s[1]]) coord2_vec_unordered = tf.tile(coord2, [s[0]]) coord2_vec_unordered = tf.reshape(coord2_vec_unordered, [s[0], s[1]]) coord2_vec = tf.reshape(tf.transpose(coord2_vec_unordered, [1, 0]), [-1]) # center coordinates since rotation center is supposed to be in the image center coord1_vec_centered = coord1_vec - image_center[0] coord2_vec_centered = coord2_vec - image_center[1] coord_new_centered = tf.cast(tf.stack([coord1_vec_centered, coord2_vec_centered]), tf.float32) # Perform backward transformation of the image coordinates rot_mat_inv = tf.dynamic_stitch([[0], [1], [2], [3]], [tf.cos(angle), tf.sin(angle), -tf.sin(angle), tf.cos(angle)]) rot_mat_inv = tf.reshape(rot_mat_inv, shape=[2, 2]) coord_old_centered = tf.matmul(rot_mat_inv, coord_new_centered) # Find nearest neighbor in old image coord1_old_nn = tf.cast(tf.round(coord_old_centered[0, :] + image_center[0]), tf.int32) coord2_old_nn = tf.cast(tf.round(coord_old_centered[1, :] + image_center[1]), tf.int32) # Clip values to stay inside image coordinates if mode == 'repeat': coord_old1_clipped = tf.minimum(tf.maximum(coord1_old_nn, 0), s[0]-1) coord_old2_clipped = tf.minimum(tf.maximum(coord2_old_nn, 0), s[1]-1) else: outside_ind1 = tf.logical_or(tf.greater(coord1_old_nn, s[0]-1), tf.less(coord1_old_nn, 0)) outside_ind2 = tf.logical_or(tf.greater(coord2_old_nn, s[1]-1), tf.less(coord2_old_nn, 0)) outside_ind = tf.logical_or(outside_ind1, outside_ind2) coord_old1_clipped = tf.boolean_mask(coord1_old_nn, tf.logical_not(outside_ind)) coord_old2_clipped = tf.boolean_mask(coord2_old_nn, tf.logical_not(outside_ind)) coord1_vec = tf.boolean_mask(coord1_vec, tf.logical_not(outside_ind)) coord2_vec = tf.boolean_mask(coord2_vec, tf.logical_not(outside_ind)) coord_old_clipped = tf.cast(tf.transpose(tf.stack([coord_old1_clipped, coord_old2_clipped]), [1, 0]), tf.int32) # Coordinates of the new image coord_new = tf.transpose(tf.cast(tf.stack([coord1_vec, coord2_vec]), tf.int32), [1, 0]) image_channel_list = tf.split(image, s[2], 2) image_rotated_channel_list = list() for image_channel in image_channel_list: image_chan_new_values = tf.gather_nd(tf.squeeze(image_channel), coord_old_clipped) if (mode == 'black') or (mode == 'repeat'): background_color = 0 elif mode == 'ones': background_color = 1 elif mode == 'white': background_color = 255 image_rotated_channel_list.append(tf.sparse_to_dense(coord_new, [s[0], s[1]], image_chan_new_values, background_color, validate_indices=False)) image_rotated = tf.transpose(tf.stack(image_rotated_channel_list), [1, 2, 0]) return image_rotated

- XCS

0

要将图像或一批图像逆时针旋转90度的倍数,您可以使用tf.image.rot90(image,k=1,name=None)。

k表示您想要进行的90度旋转次数。

对于单个图像,image是形状为[height, width, channels]的3-D张量,对于一批图像,image是形状为[batch, height, width, channels]的4-D张量。

- Safwan

网页内容由stack overflow 提供, 点击上面的可以查看英文原文,

原文链接

原文链接