我遇到了一个问题,当 isFilteringEnabled = true 时,.builtInDualCamera 的深度数据似乎旋转了90度。

以下是我的代码:

fileprivate let session = AVCaptureSession()

fileprivate let meta = AVCaptureMetadataOutput()

fileprivate let video = AVCaptureVideoDataOutput()

fileprivate let depth = AVCaptureDepthDataOutput()

fileprivate let camera: AVCaptureDevice

fileprivate let input: AVCaptureDeviceInput

fileprivate let synchronizer: AVCaptureDataOutputSynchronizer

init(delegate: CaptureSessionDelegate?) throws {

self.delegate = delegate

session.sessionPreset = .vga640x480

// Setup Camera Input

let discovery = AVCaptureDevice.DiscoverySession(deviceTypes: [.builtInDualCamera], mediaType: .video, position: .unspecified)

if let device = discovery.devices.first {

camera = device

} else {

throw SessionError.CameraNotAvailable("Unable to load camera")

}

input = try AVCaptureDeviceInput(device: camera)

session.addInput(input)

// Setup Metadata Output (Face)

session.addOutput(meta)

if meta.availableMetadataObjectTypes.contains(AVMetadataObject.ObjectType.face) {

meta.metadataObjectTypes = [ AVMetadataObject.ObjectType.face ]

} else {

print("Can't Setup Metadata: \(meta.availableMetadataObjectTypes)")

}

// Setup Video Output

video.videoSettings = [kCVPixelBufferPixelFormatTypeKey as String: kCVPixelFormatType_32BGRA]

session.addOutput(video)

video.connection(with: .video)?.videoOrientation = .portrait

// ****** THE ISSUE IS WITH THIS BLOCK HERE ******

// Setup Depth Output

depth.isFilteringEnabled = true

session.addOutput(depth)

depth.connection(with: .depthData)?.videoOrientation = .portrait

// Setup Synchronizer

synchronizer = AVCaptureDataOutputSynchronizer(dataOutputs: [depth, video, meta])

let outputRect = CGRect(x: 0, y: 0, width: 1, height: 1)

let videoRect = video.outputRectConverted(fromMetadataOutputRect: outputRect)

let depthRect = depth.outputRectConverted(fromMetadataOutputRect: outputRect)

// Ratio of the Depth to Video

scale = max(videoRect.width, videoRect.height) / max(depthRect.width, depthRect.height)

// Set Camera to the framerate of the Depth Data Collection

try camera.lockForConfiguration()

if let fps = camera.activeDepthDataFormat?.videoSupportedFrameRateRanges.first?.minFrameDuration {

camera.activeVideoMinFrameDuration = fps

}

camera.unlockForConfiguration()

super.init()

synchronizer.setDelegate(self, queue: syncQueue)

}

func dataOutputSynchronizer(_ synchronizer: AVCaptureDataOutputSynchronizer, didOutput data: AVCaptureSynchronizedDataCollection) {

guard let delegate = self.delegate else {

return

}

// Check to see if all the data is actually here

guard

let videoSync = data.synchronizedData(for: video) as? AVCaptureSynchronizedSampleBufferData,

!videoSync.sampleBufferWasDropped,

let depthSync = data.synchronizedData(for: depth) as? AVCaptureSynchronizedDepthData,

!depthSync.depthDataWasDropped

else {

return

}

// It's OK if the face isn't found.

let face: AVMetadataFaceObject?

if let metaSync = data.synchronizedData(for: meta) as? AVCaptureSynchronizedMetadataObjectData {

face = (metaSync.metadataObjects.first { $0 is AVMetadataFaceObject }) as? AVMetadataFaceObject

} else {

face = nil

}

// Convert Buffers to CIImage

let videoImage = convertVideoImage(fromBuffer: videoSync.sampleBuffer)

let depthImage = convertDepthImage(fromData: depthSync.depthData, andFace: face)

// Call Delegate

delegate.captureImages(video: videoImage, depth: depthImage, face: face)

}

fileprivate func convertVideoImage(fromBuffer sampleBuffer: CMSampleBuffer) -> CIImage {

// Convert from "CoreMovie?" to CIImage - fairly straight-forward

let pixelBuffer = CMSampleBufferGetImageBuffer(sampleBuffer)

let image = CIImage(cvPixelBuffer: pixelBuffer!)

return image

}

fileprivate func convertDepthImage(fromData depthData: AVDepthData, andFace face: AVMetadataFaceObject?) -> CIImage {

var convertedDepth: AVDepthData

// Convert 16-bif floats up to 32

if depthData.depthDataType != kCVPixelFormatType_DisparityFloat32 {

convertedDepth = depthData.converting(toDepthDataType: kCVPixelFormatType_DisparityFloat32)

} else {

convertedDepth = depthData

}

// Pixel buffer comes straight from depthData

let pixelBuffer = convertedDepth.depthDataMap

let image = CIImage(cvPixelBuffer: pixelBuffer)

return image

}

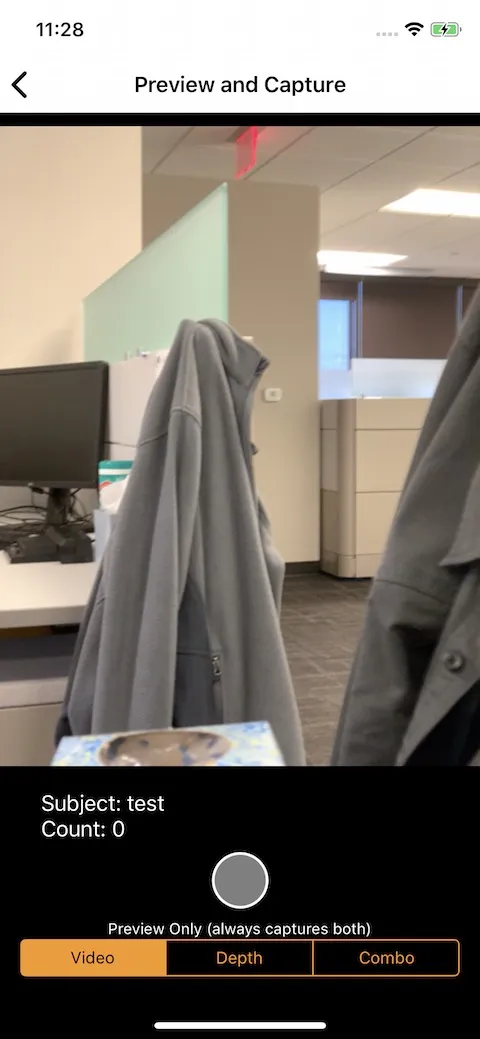

原始视频长这样:(供参考) 当值为:

// Setup Depth Output

depth.isFilteringEnabled = false

depth.connection(with: .depthData)?.videoOrientation = .portrait

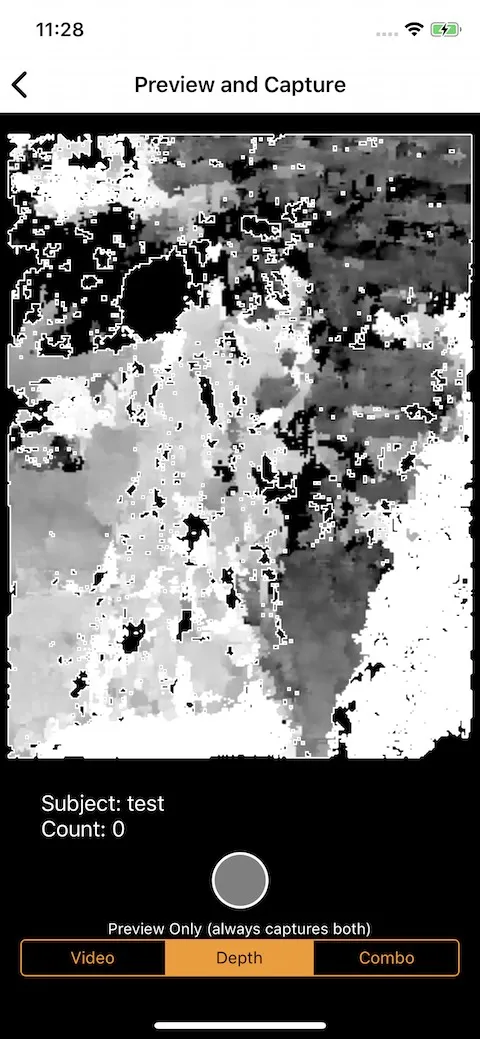

这张图片看起来是这样的:(你可以看到更近的夹克是白色的,更远的夹克是灰色的,距离是深灰色的 - 正如预期的那样) 当值为:

// Setup Depth Output

depth.isFilteringEnabled = true

depth.connection(with: .depthData)?.videoOrientation = .portrait

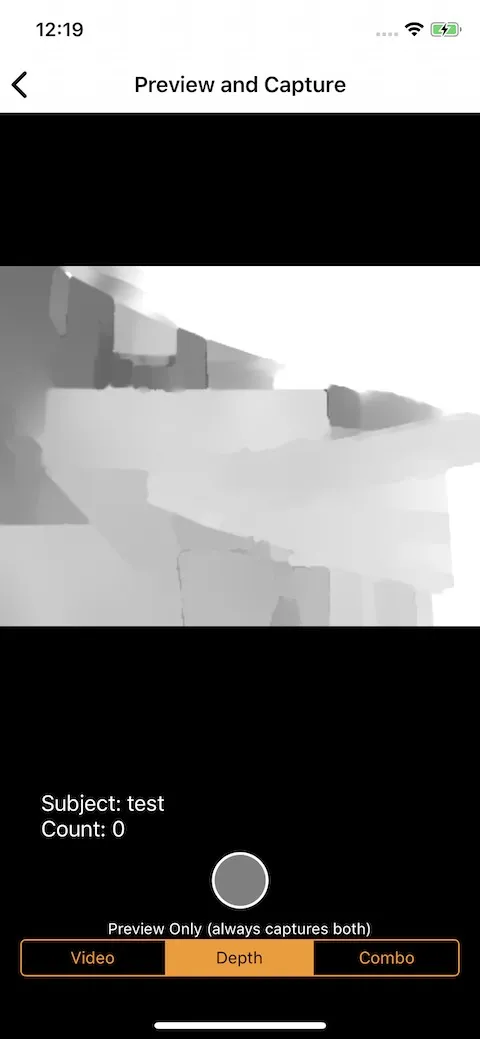

这张图片看起来像这样:(您可以看到颜色值似乎在正确的位置,但平滑滤镜中的形状似乎旋转了) 当值为:

// Setup Depth Output

depth.isFilteringEnabled = true

depth.connection(with: .depthData)?.videoOrientation = .landscapeRight

这张图片看起来像这样:(颜色和形状都呈水平状)

我做错了什么导致这些不正确的值?

我已经尝试重新排列代码。

// Setup Depth Output

depth.connection(with: .depthData)?.videoOrientation = .portrait

depth.isFilteringEnabled = true

但是那样并没有任何作用。

我认为这是与iOS 12相关的问题,因为我记得在iOS 11下它完全正常工作(尽管我没有保存任何图片来证明)

非常感谢您的帮助!

isFiltering,当您想要查看经过滤波的图像时设置该标志。您可以在ConvertVideoImage或ConvertDepthImage中使用该标志来旋转CIImage以正确显示它在您的应用程序中。 - NFarrell